Does Testosterone Affect Human Cognition and Decision-Making?

1450 words

According to a new article published at The Guardian, testosterone does affect human cognition and decision-making. The article, titled, Now we men can blame our hormones: testosterone is trouble, by Phil Daoust, is yet more media sensationalism against testosterone. Daoust’s article is full of assumptions and conclusions that do not follow from an article he cites on testosterone and cognitive reflection and decision making.

The cited article, Single dose testosterone administration impairs cognitive reflection in men, states that endogenous testosterone (testosterone produced in the body) is correlated with physical aggression. However, I’ve shown that this is not true. They conclude overall the exogenous testosterone is related to an increase in irrational thinking and decision-making. Nothing wrong with concluding that from the data. However, Daoust’s interpretation and conclusions he draws from this study are wrong, mostly due to the same old tales and misconceptions about testosterone.

This is the largest study of the effect of exogenous testosterone and decision-making and cognition. The authors show that men administered a gel that was rubbed into the upper body that is used for TRT (testosterone replacement therapy) showed “incorrect intuitive answers were more common, and correct answers were less common in the T group, for each of the three CRT questions analyzed separately” (Nave et al, 2017: 8). However, what The Guardian article does not state is that this relationship could be mediated by more than testosterone, such as motivation and arithmetic skills.

Nevertheless, those who rubbed themselves with the testosterone gel answered 20 percent fewer questions correctly. This was attributed to the fact that they were more likely to be anxious and not think about the answer. One of the authors also states that either testosterone inhibits the action of mentally checking your work or it increases the intuitive feeling that you’re definitely right (since those who rubbed themselves with T gel gave more intuitive answers, implying that the testosterone made them go to their first thought in their head). I have no problems with the paper—other than the fact that gel has an inconsistent absorption rate and has high rates of aromatization. The study has a good design and I hope it gets explored more. I do have a problem with Dauost’s interpretation of it, however.

A host of studies have already shown a correlation between elevated testosterone levels and aggression – and now they’re being linked to dumb overconfidence.

The ‘host of studies‘ that ‘have already shown a correlation between elevated testosterone levels and aggression‘ don’t say what you think they do. This is another case of the testosterone sensationalism of the media—talking about a hormone they don’t really know anything about.

That won’t help with the marketing – though it may explain Donald Trump and his half-cocked willy-waggling. Perhaps it’s not the president’s brain that’s running things, but the Leydig cells in his testicles.

Nice shot. This isn’t how it works, though. You can’t generalize a study done on college-aged males to a 71-year-old man.

Women aren’t entirely off the hook – their bodies also produce testosterone, though in smaller quantities, and the Caltech study notes that “it remains to be tested whether the effect is generalisable to females” – but for now at least they now have another way to fight the scourge of mansplaining: “You’re talking out of your nuts.”

Another paragraph showing no understanding, even bringing up the term ‘mansplaining’—whatever that means. This article is, clearly, demonizing high T men, and is a great example of the media bias on testosterone studies that I have brought up in the past.

Better still, with the evils of testosterone firmly established, the world may learn to appreciate older men. Around the age of 30, no longer “young, dumb and full of cum”, we typically find our testosterone levels declining, so that with every day that passes we become less aggressive, more rational and generally nicer.

“The evils of testosterone firmly established“, nice job at hiding your bias. Yes the cited article (Nave et al 2017) does bring up how testosterone is linked to aggression. But, for the millionth time, the correlation between testosterone and aggressive behavior is only .08 (Archer, Graham-Kevan, and Davies, 2005).

Even then, most of the reduction of this ‘evil hormone’ is due to lifestyle changes. It just so happens that around the ages 25-30—when most men notice a decrease in testosterone levels—that men begin to change their lifestyle habits, which involve marriage which decreases testosterone levels (Gray et al, 2002; Burnham et al, 2003; Gray, 2011; Pollet, Cobey, and van der Meij, 2013; Farrelly et al, 2015; Holmboe et al, 2017), having children (Gray et al, 2002; Gray et al, 2006; Gettler et al, 2011) to obesity (Palmer et al, 2012; Mazur et al, 2013; Fui, Dupuis, and Grossman, 2014; Jayaraman, Lent-Schochet, and Pike, 2014; Saxbe et al, 2017) smoking is not clearly related to testosterone (Zhao et al, 2016), and high-carb diets decrease testosterone (Silva, 2014).

So the so-called age-related decline in testosterone is not really age-related at all—it has to do with environmental and social factors which then decreases testosterone (Shi et al, 2013). Why should a man be ‘happy’ that his testosterone levels are decreasing due—largely—to his lifestyle? Low testosterone is related to cardiovascular risk (Maggio and Basaria, 2009), insulin sensitivity (Pitteloud et al, 2005; Grossman et al, 2008), metabolic syndrome (Salam, Kshetrimayum, and Keisam, 2012; Tsuijimora et al, 2013), heart attack (Daka et al, 2015), elevated risk of dementia in older men (Carcaillon et al, 2014), muscle loss (Yuki et al, 2013), and stroke and ischemic attack (Yeap et al, 2009).

So it seems that, contrary to Phil Daoust’s (the author of The Guardian article on testosterone) claims that low testosterone is associated with less aggressive behavior, more rationality and being nicer, in general, are wrong. Low testosterone is associated with numerous maladies, and the Daoust is trying to make low testosterone out to be ‘a good thing’, while demonizing men with higher levels of testosterone with cherry-picked studies and not large meta-analyses like I have cited that show that testosterone has an extremely low correlation with aggressive behavior.

As I have covered in the past, testosterone levels in the West are declining, along with semen count and quality. These things are due, largely in part, to social and environmental factors such as obesity, low activity, and an overall change in lifestyle. One (albeit anecdotal) reason I could conjure up has to do with dominance. Testosterone is the dominance hormone and so if testosterone levels are declining, then that means men must not be showing dominance as much. I would place part of the blame here on feminism and articles like the one reviewed here as part of the problem. So contra the author’s assertion, lower levels of testosterone into old age are not good, since that signifies a change in lifestyle—many of which are in the control of the male in question (I, of course, would not advise anyone to not have children or get married).

Nave et al (2017) lead the way for further research into this phenomenon. If higher doses of exogenous testosterone do indeed inhibit cognitive reflection, then, as the authors note, “The possibility that this widely prescribed treatment has unknown deleterious influences on specific aspects of decision-making should be investigated further and taken into account by users, physicians, and policy makers” (Nave et al, 2017: 11). This is perhaps one of the most important sentences in the whole article. This is about the application of testosterone-infused gel and decision-making. They’re talking about the implications of administering the gel to men and how it affects decision-making and cognitive reflection. This study is NOT generalizable for 1) endogenous testosterone and 2) non-college students. If the author understood the paper and science, he wouldn’t make those assumptions about Trump’s Leydig cells in his testicles “running the show”.

Because of the testosterone fear, good studies like Nave at al (2017) get used for an agenda by people who don’t understand the hormone. People the the Right and Left both have horrible misconceptions about the hormone, and some cannot interpret studies correctly and draw the correct conclusions from them. Testosterone—endogenous or exogenous—does not cause aggression (Batrinos, 2012). This is an established fact. The testosterone decrease between the ages of 25-30 is avoidable if you don’t change to bad habits that decrease testosterone. All in all, the testosterone scare is ridiculous. People are scared of it because they don’t understand it.

Daoust didn’t understand the article he cited and drew false conclusions from his misinterpretations. I would be interested to see how men would fare on a cognitive reflection test after, say, their favorite team scored during a game, and not after being given supraphysiological doses of testosterone gel. Drawing conclusions like Daoust did, however, is wrong and will mislead numerous more people under the guise of science.

Responses to The Alternative Hypothesis and Robert Lindsay on Testosterone

2300 words

I enjoy reading what other bloggers write about testosterone and its supposed link to crime, aggression, and prostate cancer; I used to believe some of the things they did, since I didn’t have a good understanding of the hormone nor its production in the body. However, once you understand how its produced in the body, then what others say about it will seem like bullshit—because it is. I’ve recently read a few articles on testosterone from the HBD-blog-o-sphere and, of course, they have a lot of misconceptions in them—some even using studies I have used myself on this blog to prove my point that testosterone does not cause crime!! Now, I know that most people don’t read studies that are linked, so they would take what it says on face value because, why not, there’s a cite so what he’s saying must be true, right? Wrong. I will begin with reviewing an article by someone at The Alternative Hypothesis and then review one article from Robert Lindsay on testosterone.

The Alternative Hypothesis

Faulk has great stuff here, but the one who wrote this article, Testosterone, Race, and Crime, 1) doesn’t know what he’s talking about and 2) clearly didn’t read the papers he cited. Read this article, you’ll see him make bold claims using studies I have used for my own arguments that testosterone doesn’t cause crime! Let’s take a look.

One factor which explains part of why Blacks have higher than average crime rates is testosterone. Testosterone is known to cause aggression, and Blacks are known to at once have more of it and, for genetic reasons, to be more sensitive to its effects.

- No it doesn’t.

- “Testosterone is known to cause aggression“, but that’s the thing: it’s only known that it ’causes’ aggression, it really doesn’t.

- Evidence is mixed on blacks being “… for genetic reasons … more sensitive to its effects” (Update on Androgen Receptor gene—Race/History/Evolution Notes).

Testosterone activity has been linked many times to aggression and crime. Meta-analyses show that testosterone is correlated with aggression among humans and non human animals (Book, Starzyk, and Quinsey, 2001).

Why doesn’t he say what the correlation is? It’s .14 and this study, while Archer, Graham-Kevan and Davies, (2005) reanalyzed the studies used in the previous analysis and found the correlation to be .08. This is a dishonest statement.

Women who suffer from a disease known as congenital adrenal hyperplasia are exposed to abnormally high amounts of testosterone and are abnormally aggressive.

Abnormal levels of androgens in the womb for girls with CAH are associated with aggression, while boys with and without CAH are similar in aggression/activity level (Pasterski et al, 2008), yet black women, for instance, don’t have higher levels of testosterone than white women (Mazur, 2016). CAH is just girls showing masculinized behavior; testosterone doesn’t cause the aggression (See Archer, Graham-Kevan and Davies, 2005)

Artificially increasing the amount of testosterone in a person’s blood has been shown to lead to increases in their level of aggression (Burnham 2007; Kouri et al. 1995).

Actually, no. Supraphysiological levels of testosterone administered to men (200 and 600 mg weekly) did not increase aggression or anger (Batrinos, 2012).

Finally, people in prison have higher than average rates of testosterone (Dabbs et al., 2005).

Dabbs et al don’t untangle correlation from causation. Environmental factors can explain higher testosterone levels (Mazur, 2016) in inmates, and even then, some studies show socially dominant and aggressive men have the same levels of testosterone (Ehrenkraz, Bliss, and Sheard, 1974).

Thus, testosterone seems to cause both aggression and crime.

No, it doesn’t.

Why Testosterone Does Not Cause Crime

Testosterone and Aggressive Behavior

Furthermore, of the studies I could find on testosterone in Africans, they have lower levels than Western men (Campbell, O’Rourke, and Lipson, 2003; Lucas and Campbell, and Ellison, 2004; Campbell, Gray, and Ellison, 2006) so, along with the studies and articles cited on testosterone, aggression, and crime, that’s another huge blow to the testosterone/crime/aggression hypothesis.

Richard et al. (2014) meta-analyzed data from 14 separate studies and found that Blacks have higher levels of free floating testosterone in their blood than Whites do.

They showed that blacks had 2.5 to 4.9 percent higher testosterone than whites, which could not explain the higher prostate cancer incidence (which meta-analyses call in to question; Sridhar et al 2010; Zagars et al 1998). That moderate amount would not be enough to cause differences in aggression either.

Exacerbating this problem even further is the fact that Blacks are more likely than Whites to have low repeat versions of the androgen receptor gene. The androgen reception (AR) gene codes for a receptor by the same name which reacts to androgenic hormones such as testosterone. This receptor is a key part of the mechanism by which testosterone has its effects throughout the body and brain.

The rest of the article talks about CAG repeats and aggressive/criminal behavior, but it seems that whites have fewer CAG repeats than blacks.

Robert Lindsay

This one is much more basic, and tiring to rebut but I’ll do it anyway. Lindsay has a whole slew of articles on testosterone on his blog that show he doesn’t understand the hormone, but I’ll just talk about this one for now: Black Males and Testosterone: Evolution and Perspectives.

It was also confirmed by a recent British study (prostate cancer rates are somewhat lower in Black British men because a higher proportion of them have one White parent)

Jones and Chinegwundoh (2014) write: “Caution should be taken prior to the interpretation of these results due to a paucity of research in this area, limited accurate ethnicity data, and lack of age-specific standardisation for comparison. Cultural attitudes towards prostate cancer and health care in general may have a significant impact on these figures, combined with other clinico-pathological associations.”

This finding suggests that the factor(s) responsible for the difference in rates occurs, or first occurs, early in life. Black males are exposed to higher testosterone levels from the very start.

In a study of women in early pregnancy, Ross found that testosterone levels were 50% higher in Black women than in White women (MacIntosh 1997).

I used to believe this, but it’s much more nuanced than that. Black women don’t have higher levels of testosterone than white women (Mazur, 2016; and even then Lindsay fails to point out that this was pregnant women).

According to Ross, his findings are “very consistent with the role of androgens in prostate carcinogenesis and in explaining the racial/ethnic variations in risk” (MacIntosh 1997).

Testosterone has been hypothesized to play a role in the etiology of prostate cancer, because testosterone and its metabolite, dihydrotestosterone, are the principal trophic hormones that regulate growth and function of epithelial prostate tissue.

Testosterone doesn’t cause prostate cancer (Stattin et al, 2003; Michaud, Billups, and Partin, 2015). Diet explains any risk that may be there (Hayes et al, 1999; Gupta et al, 2009; Kheirandish and Chinegwundoh, 2011; Williams et al, 2012; Gathirua-Mingwai and Zhang, 2014). However in a small population-based study on blacks and whites from South Carolina, Sanderson et al (2017) “did not find marked differences in lifestyle factors associated with prostate cancer by race.”

Regular exercise, however, can decrease PCa incidence in black men (Moore et al, 2010). A lot of differences can be—albeit, not too largely— ameliorated by environmental interventions such as dieting and exercising.

Many studies have shown that young Black men have higher testosterone than young White men (Ellis & Nyborg 1992; Ross et al. 1992; Tsai et al. 2006).

Ellis and Nyborg (1992) found 3 percent difference. Ross et al (1992) have the same problem as Ross et al (1986), which used University students (~50) for their sample. They’re not representative of the population. Ross et al (1992) also write:

Samples were also collected between 1000 h and 1500 h to avoid confounding

by any diurnal variation in testosterone concentrations.

Testosterone levels should be measured near to 8 am. This has the same time variation too, so I don’t take this study seriously due to that confound. Assays were collected “between” the hours of 10 am and 3 pm, which means it was whenever convenient for the student. No controls on activities, nor attempting to assay at 8 am. People of any racial group could have gone at whatever time in that 5 hour time period and skew the results. Assaying “between” those times completely defeats the purpose of the study.

This advantage [the so-called testosterone advantage] then shrinks and eventually disappears at some point during the 30s (Gapstur et al., 2002).

Gapstur et al (2002) help my argument, not yours.

This makes it very difficult if not impossible to explain differing behavioral variables, including higher rates of crime and aggression, in Black males over the age of 33 on the basis of elevated testosterone levels.

See above where I talk about crime/testosterone/aggression.

Critics say that more recent studies done since the early 2000’s have shown no differences between Black and White testosterone levels. Perhaps they are referring to recent studies that show lower testosterone levels in adult Blacks than in adult Whites. This was the conclusion of one recent study (Alvergne et al. 2009) which found lower T levels in Senegalese men than in Western men. But these Senegalese men were 38.3 years old on average.

Alvergne, Fauri, and Raymond (2009) show that the differences are due to environmental factors:

This study investigated the relationship between mens’ salivary T and the trade-off between mating and parenting efforts in a polygynous population of agriculturists from rural Senegal. The men’s reproductive trade-offs were evaluated by recording (1) their pair-bonding/fatherhood status and (2) their behavioral profile in the allocation of parental care and their marital status (i.e. monogamously married; polygynously married).

They also controlled for age, so his statement “But these Senegalese men were 38.3 years old on average” is useless.

These critics may also be referring to various studies by Sabine Rohrmann which show no significance difference in T levels between Black and White Americans. Age is poorly controlled for in her studies.

That is one study out of many that I reference. Rohrmann et al (2007) controlled for age. I like how he literally only says “age is poorly controlled for in her studies“, because she did control for age.

That study found that more than 25% of the samples for adults between 30 and 39 years were positive for HSV-2. It is likely that those positive samples had been set aside, thus depleting the serum bank of male donors who were not only more polygamous but also more likely to have high T levels. This sample bias was probably worse for African American participants than for Euro-American participants.

Why would they use diseased samples? Do you even think?

Young Black males have higher levels of active testosterone than European and Asian males. Asian levels are about the same as Whites, but a study in Japan with young Japanese men suggested that the Japanese had lower activity of 5-alpha reductase than did U.S. Whites and Blacks (Ross et al 1992). This enzyme metabolizes testosterone into dihydrotestosterone, or DHT, which is at least eight to 10 times more potent than testosterone. So effectively, Asians have the lower testosterone levels than Blacks and Whites. In addition, androgen receptor sensitivity is highest in Black men, intermediate in Whites and lowest in Asians.

Wu et al (1995) show that Asians have the highest testosterone levels. Evidence is also mixed here as well. See above on AR sensitivity.

Ethnicmuse also showed that, contrary to popular belief, Asians have higher levels of testosterone than Africans who have higher levels of testosterone than Caucasians in his meta-analysis. (Here is his data.)

The Androgen Receptor and “masculinization”

Let us look at one study (Ross et al 1986) to see what the findings of a typical study looking for testosterone differences between races shows us. This study gives the results of assays of circulating steroid hormone levels in white and black college students in Los Angeles, CA. Mean testosterone levels in Blacks were 19% higher than in Whites, and free testosterone levels were 21% higher. Both these differences were statistically significant.

Assay times between 10 am and 3 pm, unrepresentative sample of college men, didn’t have control for waist circumference. Horribly study.

A 15% difference in circulating testosterone levels could readily explain a twofold difference in prostate cancer risk.

No, it wouldn’t (if it were true).

Higher testosterone levels are linked to violent behavior.

Causation not untangled.

Studies suggest that high testosterone lowers IQ (Ostatnikova et al 2007). Other findings suggest that increased androgen receptor sensitivity and higher sperm counts (markers for increased testosterone) are negatively correlated with intelligence when measured by speed of neuronal transmission and hence general intelligence (g) in a trade-off fashion (Manning 2007).

Who cares about correlations? Causes matter more. High testosterone doesn’t lower IQ. Racial differences in testosterone are tiring to talk about now, but there are still a few more articles I need to rebut.

Conclusion

Racial differences in testosterone don’t exist/are extremely small in magnitude (as I’ve covered countless times). The one article from TAH literally misrepresents studies/leaves out important figures in the testosterone differences between the two races to push a certain agenda. Though if you read the studies you see something completely different. It’s the same with Lindsay. He misunderstood a few studies to push his agenda about testosterone and crime and prostate cancer. They’re both wrong, though.

Why Testosterone Does Not Cause Crime

Testosterone and Aggressive Behavior

Race, Testosterone, and Prostate Cancer

Population variation in endocrine function—Race/History/Evolution Notes

Racial differences in testosterone are tiring to talk about now, but there are still a few more articles I need to rebut. People read and write about things they don’t understand, which is the cause of these misconceptions with the hormone, as well as, of course, misinterpreting studies. Learn about the hormone and you won’t fear it. It doesn’t cause crime, prostate cancer nor aggression; these people who write these articles have one idea in their head and they just go for it. They don’t understand the intricacies of the endocrine system and how sensitive it is to environmental influence. I will cover more articles that others have written on testosterone and aggression to point out what they got wrong.

HBD and Sports: Baseball and Reaction Time

2050 words

If you’ve ever played baseball, then you have first-hand experience on what it takes to play the game, one of the major abilities you need is a quick reaction time. Baseball players are in the upper echelons in regards to pitch recognition and ability to process information (Clark et al, 2012).

Some people, however, believe that there is an ‘IQ cutoff’ in regards to baseball; since general intelligence is supposedly correlated with reaction time (RT), then those with higher RTs must have higher intelligence and vice-versa. However, this trait—in a baseball context—is trainable to an extent. To those that would claim that IQ would be a meaningful metric in baseball I pose two question: would higher IQ teams, on average, beat lower IQ teams and would higher IQ people have better batting averages (BAs) than lower IQ people? This, I doubt, because as I will cover, these variables are trainable and therefore talking about reaction time in the MLB in regards to intelligence is useless.

Meden et al (2012) tested athlete and non-athlete college students on visual reaction time (VRT). They tested the athletes’ VRT once, while they tested the non-athletes VRT two times a week for a 3 week period totaling 6 tests. Men ended up having higher VRTs in comparison to women, and athletes had better VRTs than non-athletes. So therefore, this study proves that VRT is a trainable variable. If VRT can be improved with training, then hitting and fielding can also be trained as well.

Reaction time training is the communication between the brain, musculoskeletal system and spinal cord, which includes both physical and cognitive training. So since VRT can be trained, then it makes logical sense that Major League hitting and fielding can be trained as well.

David Epstein, author of The Sports Gene says that he has a faster reaction time than Albert Pujols:

One of the big surprises for me was that pro athletes, particularly in baseball, don’t have faster reflexes on average than normal people do. I tested faster than Albert Pujols on a visual reaction test. He only finished in the 66thpercentile compared to a bunch of college students.

It’s not a superior RT that baseball players have in comparison to the normal population, says Epstein, but “learned perceptual skills that the MLB players don’t know they learned.” Major League baseball players do have average reaction times (Epstein, 2013: 1) but a far superior visual acuity. Most pro-baseball players had visual acuity of 20/13, with some players having 20/11; the theoretical best visual acuity that is possible is 20/8 (Clark et al, 2012). Laby, Kirschen, and Abbatine show that 81 percent of the 1500 Major and Minor League Mets and Dodgers players had visual acuities of 20/15 or better, along with 2 percent of players having a visual acuity of 20/9.2. Baseball players average a 20/13 visual acuity with the best eyesight humanly possible being 20/8. (Laby et al, 1996).

So it’s not faster RT that baseball players have, but a better visual acuity—on average—in comparison to the general population. Visual reaction time is a highly trainable variable, and so since MLB players have countless hours of practice, they will, of course, be superior on that variable.

Clark et al (2012) showed that high-performance vision training can be performed at the beginning of the season and maintained throughout the season to improve batting parameters. They also state that visual training programs can help hitters, since the eyes account for 80 percent of the information taken into the brain. Reichow, Garchow, and Baird (2011) conclude that a “superior ability to recognize pitches presented via tachistoscope may correlate with a higher skill level in batting.” Clark et al (2012) posit that their training program will help batters to better recognize the spot of the ball and the pitcher’s finger position in order to better identify different pitches. Clark et al (2012) conclude:

The University of Cincinnati baseball team, coaches and vision performance team have concluded that our vision training program had positive benefits in the offensive game including batting and may be providing improved play on defense as well. Vision training is becoming part of out pre-season and in season conditioning program as well as for warmups.

Classe et al (1997) showed that VRT was related to batting, but not fielding or pitching skill. Further, there was no statistically significant difference observed between VRT and age, race or fielding. Therefore, we can say that VRT has no statistical difference on race and does not contribute to any racial differences in baseball.

Baseball and basketball athletes had faster RTs than non-athletes (Nakamoto and Mori, 2008). The Go/NoGo response that is typical of athletes is most certainly trainable. Kida et al (2005) showed that intensive practice improved the Go/NoGo reaction time, but not simple reaction time. Kida et al (2005: 263-264) conclude that simple reaction time is not an accurate indicator of experience, performance or success in sports; Go/NoGo can be improved by practice and is not innate (but simple reaction time was not altered) and the Go/NoGo reaction time can be “theoretically shortened toward a certain value determined by the simple reaction time proper to each individual.“

In baseball players in comparison to a control group, readiness potential was significantly shorter for the baseball players (Park, Fairweather, and Donaldson, 2015). Hand-eye coordination, however, had no effect on earned run average (ERA) or batting average in a sample of 410 Major and Minor League members of the LA Dodgers (Laby et al, 1997).

So now we know that VRT can be trained, VRT shows no significant racial differences, and that Go/NoGo RT can be improved by practice. Now a question I will tackle is: can RT tell us anything about success in baseball and is RT related to intelligence/IQ?

Khodadi et al (2014) conclude that “The relationship between reaction time and IQ is too complicated and revealing a significant correlation depends on various variables (e.g. methodology, data analysis, instrument etc.).” So since the relationship is too complicated between the two variables, mostly due to methodology and the instrument used, RT is not a good correlate of IQ. It can, furthermore, be trained (Dye, Green, and Bavelier, 2012).

In the book A Question of Intelligence, journalist Dan Seligman writes:

In response, Jensen made two points: (1) The skills I was describing involve a lot more than just reaction time, they also depended heavily on physcial coordination and endless practice. (2) It was, however, undoubtedly true that there was some IQ requirement-Jensen guessed it might be around 85- below which you could never recruit for major league baseball. (About one-sixth of Americans fall below 85).

I don’t know where Jensen grabbed the ‘IQ requirement’ for baseball, which he claims to be around 85 (which is at the black average in America). This quote, however, proves my point that there is way more than RT involved in hitting a baseball, especially a Major League fastball:

Hitting a baseball traveling at 100 mph is often considered one of the most difficult tasks in all of sports. After all, if you hit the ball only 30% of the time, baseball teams will pay you millions of dollars to play for them. Pitches traveling at 100 mph take just 400 ms to travel from the pitcher to the hitter. Since the typical reaction time is 200 ms, and it takes 100 ms to swing the bat, this leaves just 100 ms of observation time on which the hitter can base his swing.

This lends more credence to the claim that hitting a baseball is more than just quick reflexes; considerable training can be done to learn certain cues that certain pitchers use; for instance, like identifying different pitches a particular pitcher does with certain arm motions coming out of the stretch. This, as shown above in the Epstein quote, is most definitely a trainable variable.

Babe Ruth, for instance, had better hand-eye coordination than 98.8 percent of the population. Though that wasn’t why he was one of the greatest hitters of all time; it’s because he mastered all of the other variables in regards to hitting, which are learnable and not innate.

Witt and Proffitt (2005) showed that the apparent ball size is correlated with batting average, that is, the better batters fared at the plate, the bigger they perceived the ball to be so they had an easier time hitting it. Hitting has much less to do with reaction time and much more to do with prediction, as well as the pitching style of the pitcher, his pitching repertoire, and numerous other factors.

It takes a 90-95 mph fast ball about 400 milliseconds to reach home plate. It takes the brain 100 milliseconds to process the image that the eyes are taking in, 150 milliseconds to swing and 25 milliseconds for his brain to send a signal to his body to swing. This leaves the hitter with 125 milliseconds left to hit the incoming fastball. Clearly, there is more to hitting than reaction time, especially when all of these variables are in play. Players have .17 seconds to decide whether or not to hit a pitch and where to place their bat (Clark et al, 2012)

A so-called ‘IQ cutoff’ for baseball does exist, but only because IQs lower than 85 (once you begin to hit the 70s range, especially the lower levels) indicate developmental disorders. Further, the 85-115 IQ range encompasses 68 percent of the population. However, RT is not even one of the most important factors in hitting; numerous other (trainable) variables influence fastball hitting, and all of the best players in the world employ these strategies. People may assume that since intelligence and RT are (supposedly) linked, that baseball players, since they (supposedly) have quick RTs. Nevertheless, if quick RTs were correlated with baseball profienciency—namely, in hitting, then why are Asians 1.2 percent of the players in the MLB? Maybe because RT doesn’t really have anything to do with hitting proficiency and other variables have more to do with it.

People may assume that since intelligence and RT are (supposedly) linked, that baseball players, since they (supposedly) have quick RTs then they must be intelligent and therefore there must be an IQ cutoff because intelligence/g and RT supposedly correlate. However, I’ve shown 2 things: 1) RT isn’t too important to hitting at an elite level and 2) more important skills can be acquired in hitting fastballs, most notable, in my opinion, is pitch verification and the arm location of the pitcher. The Go/NoGo RT can also be trained and is, arguably, one of the most important training systems for elite hitting. Clearly, elite hitting is predicated on way more than just a quick RT; and most of the variables that are involved in elite hitting are most definitely trainable, as reviewed in this article.

People, clearly, make unfounded claims without having any experience in something. It’s easy to make claims about something when you’re just looking at numbers and attempting to draw conclusions based on data. But it’s a whole other ballgame (pun intended) when you’re up at the plate yourself or coaching someone on how to hit or play in the infield. These baseless claims would be avoided a lot more if only the people who make these claims had any actual athletic experience. If so, they would know of the constant repetition that goes into hitting and fielding, the monotonous drills you have to do everyday until your muscle memory is trained to flawlessly—without even thinking about it—throw a ball from shortstop to first base.

Practice, especially Major League practice, is pivotal to elite hitting; only with elite practice can a player learn how to spot the ball and the pitcher’s finger position to quickly identify the pitch type in order to decide if he wants to swing or not. In conclusion, a whole slew of cognitive/psychological abilities are involved in the upper echelons of elite baseball, however a good majority of the traits needed to succeed in baseball are trainable, and RT has little to do with elite hitting.

(When I get time I’m going to do a similar analysis like what I wrote about in the article on my possible retraction of my HBD and baseball article. Blacks dominate in all categories that matter, this holds for non-Hispanic whites and blacks as well as Hispanic blacks and whites, read more here. Nevertheless, I may look at the years 1997-2017 and see if anything has changed from the analysis done in the late 80s. Any commentary on that matter is more than welcome.)

Racial Differences in Testosterone…again

1200 words

Testosterone is a fascinating hormone—the most well-known hormone to the lay public. What isn’t well-known to the lay public is how the hormone is produced and the reasons why it gets elevated. I’ve covered racial differences in testosterone in regards to crime, penis size and Rushton’s overall misuse of r/K selection theory. In this article, I will talk about what raises and decreases testosterone, as well as speak about racial differences in testosterone again since it’s such a fun topic to cover.

JP Rushton writes, in his 1995 article titled Race and Crime: An International Dilemma:

One study, published in the 1993 issue of Criminology by Alan Booth and D. Wayne Osgood, showed clear evidence of a testosterone-crime link based on an analysis of 4,462 U.S. military personnel. Other studies have linked testosterone to an aggressive and impulsive personality, to a lack of empathy, and to sexual behavior.

Booth and Osgood (1993: 93) do state that “This pattern of results supports the conclusions that (I) testosterone is one of a larger constellation of factors contributing to a general latent propensity toward deviance and (2) the influence of testosterone on adult deviance is closely tied to social factors.” However, as I have extensively documented, the correlation between testosterone and aggression is extremely low (Archer, 1991; Book et al (2001); Archer, Graham-Kevan and Davies, 2005), and therefore cannot be the cause of crime.

Another reason why testosterone is not the cause of aggression/deviant behavior is due to what times most crimes are committed at. Therefore, testosterone cannot possibly be the cause of crime. I’ve also shown that, contrary to popular belief, blacks don’t have higher levels testosterone than whites, along with the fact that testosterone does not cause prostate cancer, that even if blacks did have these supposed higher levels of the hormone, that it would NOT explain higher rates of crime.

Wu et al (1995) show that Asian Americans had the highest testosterone levels, African Americans were intermediate and European Americans were last, after adjustments for BMI and age were made. Though, I’ve shown in larger samples that, if there is any difference at all (and a lot of studies show no difference), it is a small advantage favoring blacks. We then are faced with the conclusion that this would not explain disease prevalence nor higher rates of crime or aggression.

Testosterone, contrary to Rushton’s (1999) assertion, is not a ‘master switch’. Rushton, of course, cites Ross et al (1986) which I’ve tirelessly rebutted. Assay times were all over the place (between 10 am and 3 pm) with testosterone levels being highest at 8 am. The most important physiological variable in Rushton’s model is testosterone, and without his highly selected studies, his narrative falls apart. Testosterone doesn’t cause crime, aggression, nor prostate cancer.

The most important take away is this: Rushton’s r/K theory hinges on 1) blacks having higher levels of testosterone than whites and 2) that these higher levels of testosterone then influence higher levels of aggression which lead to crime and then prostate cancer. Even then, Sridhar et al (2010) meta-analyzed 17 articles which talk about racial differences in prostate cancer survival rates. They state in their conclusion that “there are no differences between African American and Whites in survival from prostate cancer.” Zagars et al (1998) show that there were no significant racial differences in serum testosterone. Furthermore, when matched for major prognostic factors “the outcome for clinically local–regional prostate cancer does not depend on race (6,7,14–19). Moreover there appear to be no racial differences in the response of advanced prostate cancer to androgen ablation (29,47). Our study provides further evidence that racial differences in disease outcome are absent for clinically localized prostate cancer” (Zagars et al, 1998: 521). So it seems that these two studies also provide further support that Rushton et al were wrong in regards to prostate cancer mortality as well.

Rushton (1997 185) writes:

In any case, socialization cannot account for the early onset of the traits, the speed of dental and other maturational variables, the size of the brain, the number of gametes produced, the physiological differences in testosterone, nor the evidence on cross-cultural consistency.

There are no racial differences in testosterone and if there were, social factors would explain the difference between the races. However, as I’ve noted in the past, testosterone levels are high in young black males with low educational attainment (Mazur, 2016). The higher levels of testosterone in blacks compared to whites (which, if you look at figure 1 the levels are not high at all) is accounted for by honor culture, a social variable. Furthermore, the effects of the environment are also more notable on testosterone than are genetics at 5 months of age (Carmaschi et al, 2010). Environmental factors greatly influence testosterone (Booth et al, 2006), so Rushton stating that “socialization cannot account for the early onset” of “physiological differences in testosterone” is clearly wrong since environmental influences can be seen in infants as well as adults. Testosterone is strongly mediated by the environment; this is not up for debate.

Testosterone is one of many important hormones in the body; the races do not differ in the variable. So, therefore, all of Rushton’s ‘r/K predictions’, which literally hinge on testosterone (Lynn, 1990) fall apart without this ‘master switch’ (Rushton, 1999) driving all of these behaviors. Any theories of crime that include testosterone as a main driver in crime need to be rethunk; numerous studies attest to the fact that testosterone does not cause crime. Racial differences in testosterone only appear in small studies and the studies that do show these differences get touted around all the while, all of the better, larger analyses don’t get talked about because it goes against a certain narrative.

Finally, there is no inevitability of a testosterone decrease in older men. So-called “age-related declines” in the hormone are largely explained by smoking, obesity, chronic disease, marital status, and depression (Shi et al, 2013), and even becoming a father explains lower levels of testosterone (Gray, Yang, and Pope, 2006). On top of that, marriage also reduces testosterone, with men who went from unmarried to married showing a sharp decline in testosterone over a ten-year period (Holmboe et al, 2017). This corroborates numerous other studies showing that marriage lowers testosterone levels in men (Mazur and Michalek, 1998; Nansunga et al, 2014) But some of this decrease may be lessened by frequent sexual intercourse (Gettler et al, 2013). So if you live a healthy lifestyle, the testosterone decrease that plagues most men won’t occur to you. The decreases are due to lifestyle changes; not explicitly tied to age.

People are afraid of higher levels of testosterone at a young age and equally as terrified of lowering testosterone levels at an old age. However, I’ve exhasutively shown that testosterone is not the boogeyman, nor the ‘master switch’ (Rushton, 1999) it’s made out to be. There are no ‘genes for’ testosterone; its production is indirect through DNA. Thusly, if you keep an active lifestyle, don’t become obese, and don’t become depressed, you can bypass the so-called testosterone decrease. Fear mongering on both sides of the ‘testosterone curve’ are seriously blown out of proportion. Testosterone doesn’t cause crime, aggression, nor prostate cancer (even then, large meta-analyses show no difference in PCa mortality between blacks and whites).

The fear of the hormone testosterone is due to ignornace of what it does in the body and how it is produced in the body. If people were to understand the hormone, they would not fear it.

Earlier Evidence for Erectus’ Use of Fire

1600 words

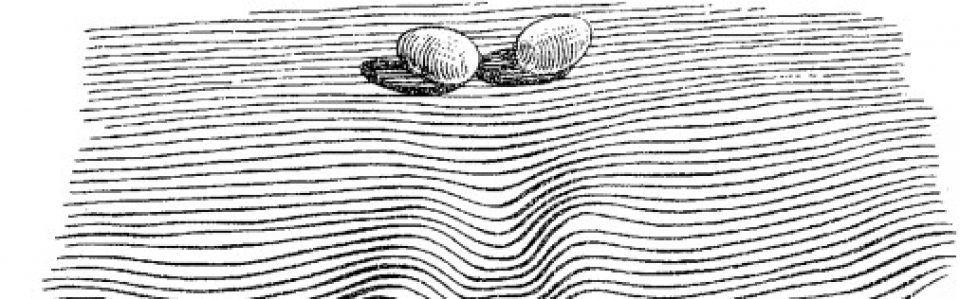

I hold the position that the creation and management of man-made fire was a pivotal driver in our brain size increase over the past 2 my. However, evidence for fire use in early hominins is scant; a few promising locations have popped up over the year, the most promising being Wonderwerk Cave in South Africa (Berna et al, 2012). Much more recently, however, it was discovered in Koobi Fora, Kenya, that there was evidence of fire use by Erectus 1.5 mya (Hlublik et al, 2017).

Hlublik et al (2017) identified two sites at Koobi Fora, Kenya that have evidence of fire use 1.5 mya, and Erectus was the hominin in that area at that time. Hlublik et al (2017) conclude the following:

(1) Spatial analysis reveals statistically significant clusters of ecofacts and artifacts, indicating that the archaeological material is in situ and is probably the result of various hominin activities during one or a few occupation phases over a short period of time. (2) We have found evidence of fire associated with Early Stone Age archaeological material in the form of heated basalt (potlids flakes), heated chert, heated bone, and heated rubified sediment. To our knowledge this is, to date, the earliest securely documented evidence of fire in the archaeological context. (3) Spatial analysis shows the presence of two potential fire loci. Both loci contain a few heated items and are characterized by surrounding artifact distributions with strong similarities to the toss and drop zones and ring distribution patterns described for ethnographic and prehistoric hearths (Binford 1983; Henry 2012; Stappert 1998).

This is one of the best sites yet for early hominin fire control. This would also show how the biologic/physiologic/anatomic changes occurred in Erectus. Erectus could then afford a larger brain and could spend more time doing other activities since he wasn’t constrained to foraging and eating for 8+ hours per day. So, clearly, the advent of fire use in our lineage was one of the most important time frames in our evolutionary history since we could extract more energy from what we ate.

Carmody et al (2016) identified ‘cooking genes’ that were under selection between 275-765,000 ya. So we must have been cooking, in my opinion, before 765,000 ya, which would have then brought about the genetic changes due to a large shift in diet—which would have been cooked meat/tubers. Man’s adaptation to cooked food is one of the most important things to occur in our genus, because it allowed us to spend less time eating and more time doing. This change to a higher quality diet began in early Erectus (Aiello, 1997), which then shrank his teeth and gut. If Erectus did not control fire, the reduction in tooth size needs to be explained—but the only way it can be explained is through the use of cooked food.

Around 1.6 my there is evidence of the first human-like footprints/gait (Steudel-Numbers, 2006; Bennett et al, 2009). This seems to be the advent of hunting parties and cooperation in hominins to chase prey. The two new identified sites at Koobi Fora lend further credence to the endurance running hypothesis (Carrier, 1984; Bramble and Lieberman, 2004; Mattson, 2012), since without higher quality nutrition, Erectus would not have had the anatomic changes he did, nor would he have the ability to hunt due to being restricted to foraging, eating, and digesting. Only with higher quality energy could the human body have evolved. The further socialization from hunting and cooking/eating meat was also pivotal to our brain size and evolution. This allowed our brains to grow in size, since we could have high-quality energy to power our growing set of neurons.

The growth pattern of Nariokotome boy (formerly called Turkana boy) is within the range of modern humans and does not imply that he had a growth pattern different from that of modern humans (Clegg and Aiello, 1999). It’s interesting to note that Nariokotome boy, one of the best preserved Erectus fossils we have discovered to date, had a similar growth pattern to modern humans. Nariokotome boy is estimated to be about 1.6 million years old, so this implies that we had similar high-quality diets in order to have similar morphology. It is also interesting to note that Lake Turkana is near Koobi Fora in Kenya. So it seems that basic human morphology emerged around 1.5-1.6 mya and was driven, in part, by the use and acquisition of fire to cook food.

This is in line with the brain size increase that Fonseca-Azevedo and Herculano-Houzel (2012) observed in their study. Metabolic limitations of herbivorous diets impose constraints on how big a brain can get. Herculano-Houzel and Kaas (2011) state that Erectus had about 62 billion neurons, so given the number of neurons he had to power, he’d have had to eat a raw, herbivorous diet for over 8 hours a day. Modern humans, with our 86 billion neurons (Herculano-Houzel, 2009; 2012), would need to feed for over 9 hours on a raw diet to power our neurons. But, obviously, that’s not practical. So Erectus must have had another way to extract more and higher-quality energy out of his food.

Think about this for a minute. If we ate a raw, plant-based diet, then we would have to feed for most of our waking hours. Can you imagine spending what amounts to more than one work day just foraging and eating? The rest of the time awake would be mostly spent digesting the food you’ve eaten. This is why cooking was pivotal to our evolution and why Erectus must have had the ability to cook—his estimated neuron count based on his cranial capacity shows that he would not have been able to subsist on a raw, plant-based diet and so the only explanation is that Erectus had the ability to cook and afford his larger brain.

Wrangham (2017) goes through the pros and cons of the cooking hypothesis, which hinges on Erectus’ control of fire. Dates for Neanderthal hearths have been appearing later, however nowhere near close enough to when Erectus was proposed to have used and controlled fire. As noted above, 1.6 mya is when the human body plan began to emerge (Gowlett, 2016) which can be seen by looking at Nariokotome boy. So if Nariokotome boy had a growth pattern similar to that of modern humans, then he must have been eating a high-quality diet of cooked food.

Wrangham (2017) poses two questions if Erectus did not control fire:

First, how could H. erectus use increased energy, reduce its chewing efficiency, and sleep safely on the ground without fire? Second, how could a cooked diet have been introduced to a raw-foodist, mid-Pleistocene Homo without having major effects on its evolutionary biology? Satisfactory answers to these questions will do much to resolve the tension between archaeological and biological evidence.

I don’t see how these things can be explained without entertaining the fact that Erectus did control fire. And now, Hlublick et al (2017) lends more credence to the cooking hypothesis. The biological and anatomical evidence is there, and now the archaeological evidence is beginning to line up with what we know about the evolution of our genus—most importantly our brain size, pelvic size, and modern-day gait.

Think about what I said above about time spent foraging and eating. Looking at gorillas, for instance, they have large bodies partly due to sexual selection and the large amounts of kcal they consume. Some gorillas have been observed to have consumed food for upwards of 10 hours per day. So, pretty much, you can have brains or you can have brawn, you can’t have both. Cooking allowed for our brains as we could extract higher quality nutrients out of our food. Cooking allowed for the release of a metabolic constraint—as seen with gorillas. It wouldn’t be possible to power such large brains without the addition of higher quality nutrition.

One of the most important things to note is that Erectus had smaller teeth. That could only occur due to a shift in diet—masticating softer foods leads to a subsequent decrease in tooth and jaw size. Zink and Lieberman (2016) show that although fire-use/cooking was important for mastication. Slicing meat and pounding tubers improved the ability to breakdown food by 5 percent and decreased masticatory force requirements by 41 percent. This, too, led to the decrease in jaw/tooth size in Erectus, and the advent of cooking with softer/higher-quality food led to a further decrease ontop of what Zink and Lieberman (2016) state, although Zink and Lieberman (2016: 3) state that “the reductions in jaw muscle and dental size that evolved by H. erectus did not require cooking and would have been made possible by the combined effects of eating meat and mechanically processing both meat and USOs.” I disagree and believe that the two hypotheses are complimentary. A reduction would have occurred with the introduction of mashed food and then again when Erectus controlled fire and began cooking meat and tubers.

In conclusion, the brain size increases that are noted in Erectus’ evolutionary history need to be explained and one of the best is that he controlled fire and cooked his food. There are numerous lines of evidence that he did, mostly biological in nature, but now archaeological sites are beginning to show just how long ago erectus began using fire (Berna et al, 2012; Hlublick et al, 2017). Many pivotal events in our history can be explained by our shift in diet to softer foods due to the advent of cooking, like smaller teeth and jaws to the biologic and physiologic adaptations that occurred after the shift to a new diet. Erectus is a very important hominin to study, because many of our modern-day behaviors began with him and by better understanding what he did and created and how he lived, we can better understand ourselves.

Racial Differences in Jock Behavior: Implications for STI Prevalence and Deviance

1350 words

The Merriam-Webster dictionary defines jock as “a school or college athlete” and “a person devoted to a single pursuit or interest“. This term, as I previously wrote about, holds a lot of predictive power in terms of life success. What kind of racial differences can be found here? Like with a lot of life outcomes/predictors, there are racial differences and they are robust.

Male jocks get more sex, after controlling for age, race, SES and family cohesion. Being involved in sports is known to decrease sexual promiscuity, however, this effect did not hold for black American jocks, with the jock label being associated with higher levels of sexual promiscuity (Miller et al, 2005). Black American jocks reported significantly higher levels of sexual activity than non-black jocks, but they did not find that white jocks too fewer risks than their non-jock counterparts.

Black Americans do have a higher rate of STDs compathe average population (Laumann et al, 1999; Cavanaugh et al, 2010; CDC, 2015). Black females who are enrolled in, or have graduated from college had a higher STI (sexually transmitted infection) rate (12.4 percent self-reported; 13.4 percent assayed) than white women with less than a high school diploma (6.4 percent self-reported; 2.3 percent assayed) (Annang et al, 2010). I would assume that these black women would be more attracted to black male jocks and thusly would be more likely to acquire STIs since black males who self-identify as jocks are more sexually promiscuous. It seems that since black male jocks—both in high school and college—are more likely to be sexually promiscuous, this then has an effect on even the college-educated black females, since higher educational status has one less likely to acquire STIs.

Whites use the ‘jock identity’ in a sports context whereas blacks use the identity in terms of the body. Black jocks are more promiscuous and have more sex than white jocks, and I’d bet that black jocks have more STDs than white jocks since they are more likely to have sex than white jocks. Jock identity—but not athletic activity and school athlete status—was a better predictor of juvenile delinquency in a sample of 600 Western New York students, which was robust across gender and race (Miller et al, 2007a). Though, surprisingly, the ‘jock effect’ on crime was not as you would expect it: “The hypothesis that effects would be stronger for black adolescents than for their white counterparts, derived from the work of Stark et al. 1987 and Hughes and Coakley (1991), was not supported. In fact, the only clear race difference that did emerge showed a stronger effect of jock identity on major deviance for whites than for blacks” (Miller et al, 2007a).

Miller et al (2007b) found that the term jock means something different to black and white athletes. For whites, the term was associated with athletic ability and competition, whereas for blacks the term was associated with physical qualities. Whites, though, were more likely to self-identify with the label of jock than blacks (37 percent and 22 percent respectively). They also found that binge drinking predicted violence amongst family members, but in non-jocks only. The jock identity, for whites and not blacks, was also associated with more non-family violence while whites were more likely to use the aggression from sports in a non-sport context in comparison to blacks.

For black American boys, the jock label was a predictor of promiscuity but not for dating. For white American jocks, dating meant more than the jock label. Miller et al (2005) write:

We suggest that White male jocks may be more likely to be involved in a range of extracurricular status-building activities that translate into greater popularity overall, as indicated by more frequent dating; whereas African American male jocks may be “jocks” in a more narrow sense that does not translate as directly into overall dating popularity. Furthermore, it may be that White teens interpret being a “jock” in a sport context, whereas African American teens see it more in terms of relation to body (being strong, fit, or able to handle oneself physically). If so, then for Whites, being a jock would involve a degree of commitment to the “jock” risk-taking ethos, but also a degree of commitment to the conventionally approved norms with sanctioned sports involvement; whereas for African Americans, the latter commitment need not be adjunct to a jock identity.

It’s interesting to speculate on why whites would be more prone to risk-taking behavior than blacks. I would guess that it has something to do with their perception of themselves as athletes, leading to more aggressive behavior. Though certain personalities would be more likely to be athletic and thusly refer to themselves as a jock. The same would hold true for somatype as well.

So the term jock seems to mean different things for whites and blacks, and for whites, leads to more aggressive behavior in a non-sport context.

Black and females who self-identified as jocks reported lower grades whereas white females who self-identified as jocks reported higher grades than white females who did not self-report as jocks (Miller et al, 2006). Jocks also reported more misconduct such as skipping school, cutting class, being sent to the principals office, and parents having to go to the school for a disciplinary manner compared to non-jocks. Boys were more likely to engage in actions that required disciplinary intervention in comparison to girls, while boys were also more likely to skip school, have someone called from home and be sent to the principal’s office. Blacks, of course, reported lower grades than whites but there was no significant difference in misconduct by race. However, blacks reported fewer absences but more disciplinary action than whites, while blacks were less likely to cut class, but more likely to have someone called from home and slightly more likely to be sent to the principal’s office (Miller et al, 2006).

This study shows that the relationship between athletic ability and good outcomes is not as robust as believed. Athletes and jocks are also different; athletes are held in high regard in the eyes of the general public while jocks are seen as dumb and slow while also only being good at a particular sport and nothing else. Miller et al (2006) also state that this so-called ‘toxic jock effect‘ (Miller, 2009; Miller, 2011) is strongest for white boys. Some of these ‘effects’ are binge drinking and heavy drinking, bullying and violence, and sexual risk-taking. Though Miller et al (2006) say that, for this sample at least, “It may be that where academic performance is concerned, the jock label constitutes less of a departure from the norm for white boys than it does for female or black adolescents, thus weakening its negative impact on their educational outcomes.”

The correlation between athletic ability and jock identity was only .31, but significant for whites and not blacks (Miller et al, 2007b). They also found, contrary to other studies, that involvement in athletic programs did not deter minor and major adolescent crime. They also falsified the hypothesis that the ‘toxic jock effect’ (Miller, 2009; Miller, 2011) would be stronger for blacks than whites, since whites who self-identified as jocks were more likely to engage in delinquent behavior.

In sum, there are racial differences in ‘jock’ behavior, with blacks being more likely to be promiscuous while whites are more likely to engage in deviant behavior. Black women are more likely to have higher rates of STIs, and part of the reason is sexual activity with black males who self-identify as jocks, as they are more promiscuous than non-jocks. This could explain part of the difference in STI acquisition between blacks and whites. Miller et al argue to discontinue the use of the term ‘jock’ and they believe that if this occurs, deviant behavior will be curbed in white male populations that refer to themselves as ‘jocks’. I don’t know if that will be the case, but I don’t think there should be ‘word policing’, since people will end up using the term more anyway. Nevertheless, there are differences between race in terms of those that self-identify as jocks which will be explored more in the future.

Nerds vs. Jocks: Different Life History Strategies?

1150 words

I was alerted to a NEEPS (Northeastern Evolutionary Psychology Society) conference paper, and one of the short abstracts of a talk had a bit about ‘nerds’, ‘jocks’, and differing life history strategies. Surprisingly, the results did not line up with current stereotypes about life outcomes for the two groups.

The Life History of the Nerd and Jock: Reproductive Implications of High School Labels

The present research sought to explore whether labels such as “nerd” and “jock” represent different life history strategies. We hypothesized that self-identified nerds would seek to maximize future reproductive success while the jock strategy would be aimed at maximizing current reproductive success. We also empirically tested Belsky’s (1997) theory of attachment style and life history. A mixed student/community sample was used (n=312, average age = 31) and completed multiple questionnaires on Survey Monkey. Dispelling stereotypes, nerds in high school had a lower income and did not demonstrate a future orientation in regards to reproductive success, although they did have less offspring. Being a jock in high school was related to a more secure attachment style, higher income, and higher perceived dominance. (NEEPS, 2017: 11)

This goes against all conventional wisdom; how could ‘jocks’ have better life outcomes than ‘nerds’, if the stereotype about the blubbering idiot jock is supposedly true?

Future orientation is “The degree to which a collectivity encourages and rewards future-oriented behaviors such as planning and delaying gratification (House et al, 2004,p. 282).“ So the fact that self-reported nerds did not show future orientation in regards to reproductive success is a blow to some hypotheses, yet they did have fewer children.

However, there are other possibilities that could explain why so-called nerds have fewer children, for instance, they could be seen as less attractive and desirable; could be seen as anti-social due to being, more often than not, introverted; or they could just be focusing on other things, and not worrying about procreating/talking to women so they end up have fewer children as result. Nevertheless, the fact that nerds ended up having lower income than jocks is pretty telling (and obvious).

There are, of course, numerous reasons why a student should join a sport. One of the biggest is that the skills that are taught in team sports are most definitely translatable to the real world. Most notably, one who plays sports in high school may be a better leader and command attention in a room, and this would then translate over to success in the post-college/high school world. The results of this aren’t too shocking—to people who don’t have any biases, anyway.

Why may nerds in high school have had lower income in adulthood? One reason could be that the social awkwardness did not translate into dollar signs after high school/college graduation, or chose a bad major, or just didn’t know how to translate their thoughts into real-world success. Athletes, on the other hand, have the confidence that comes from playing sports and they know how to work together with others as a cohesive unit in comparison to nerds, who are more introverted and shy away from being around a lot of people.

Nevertheless, this flew in the faces of the stereotypes of nerds having greater success after college while the jocks—who (supposedly) don’t have anything beyond their so-called ‘primitive’ athletic ability—had greater success and more money. This flies in the face of what others have written in the past about how nerds don’t have greater success relative to the average population, well this new presentation says otherwise. Thinking about the traits that jocks have in comparison to nerds, it doesn’t seem so weird that jocks would have greater life outcomes in comparison to nerds.

Self-reported nerds, clearly, don’t don’t have the confidence to make the stratospheric amounts of cash that people would assume that they should make because they are knowledgeable in a few areas, on the contrary. Those who could use their body’s athletic ability had more children as well as had greater life success than nerds, which of course flew in the face of stereotypes. Certain stereotypes need to go, because sometimes stereotypes do not tell the truth about some things; it’s just what people believe ‘sounds good’ in their head.

If you think about what it would take, on average, to make more money and have great success in life after high school and college, you’ll need to know how to talk to people and how to network, which the jocks would know how to do. Nerds, on the other hand, who are more ‘socially isolated’ due to their introverted personality, would not know too much about how to network and how to work together with a team as a cohesive unit. This, in my opinion, is one reason why this was noticed in this sample. You need to know how to talk to people in social settings and nerds wouldn’t have that ability—relative to jocks anyway.

Jocks, of course, would have higher perceived dominance since athletes have higher levels of testosterone both at rest and exhaustion (Cinar et al, 2009). Athletes, of course, would have higher levels of testosterone since 1) testosterone levels rise during conflict (which is all sports really are, simulated conflict) and 2) dominant behavior increases testosterone levels (Booth et al, 2006). So it’s not out of the ordinary that jocks were seen as more dominant than their meek counterparts. In these types of situations, higher levels of testosterone are needed to help prime the body for what it believes is going to occur—competition. Coupled with the fact that jocks are constantly in situations where dominance is required; engage in more physical activity than the average person; and need to keep their diet on point in order to maximize athletic performance, it’s no surprise that jocks showed higher dominance, as they do everything right to keep testosterone levels as high as possible for as long as possible.

I hope there are videos of these presentations because they all seem pretty interesting, but I’m most interested in locating the video for this specific one. I will update on this if/when I find a video for this (and the other presentations listed). It seems that these labels do have ‘differing life history strategies’, and, despite what others have argued in the past about nerds having greater success than jocks, the nerds get the short end of the stick.

Why Are People Afraid of Testosterone?

1100 words

The answer to the question of why people are afraid of testosterone is very simple: they do not understand the hormone. People complain about birth rates and spermatogenesis, yet they believe that having high testosterone makes one a ‘savage’ who ‘cannot control their impulses’. However, if you knew anything about the hormone and how it’s vital to normal functioning then you would not say that.

I’ve covered why testosterone does not cause crime by looking at the diurnal variation in the hormone, showing that testosterone levels are highest at 8 am and lowest at 8 pm, while children commit the most crimes at 3 pm and adults at 10 pm. The diurnal variation is key: if testosterone truly did cause crime then rates of crime would be higher in both children and adults in the morning; yet, as can be seen with children, there are increases in amounts of violence committed when they enter school, go to recess, and exit school. This shows why those times are related to the spike in crime in children.

I have wrote a previous article citing a paper by Book et al (2001) in which they meta-analyzed testosterone studies and found that the correlation between testosterone and aggression was .14. However, that estimate is too high since they included 15 studies that should have not been included in the analysis. The true correlation is .08 (Archer, Graham-Kevan, and Davies, 2004). So, clearly, along with the fact that the diurnal variation in testosterone does not correlate with crime spikes, it shows that testosterone has no relationship to the cause of crime; it’s just always at the scene because it prepares the body to deal with a threat. That does not mean that testosterone itself causes crime.

One main reason people fear testosterone and believe that it causes crime and by extension aggressive behavior is because of racial crime disparities. According to the FBI, black Americans by and large commit the most crime, despite being 13 percent of the US population. And since it has been reported that blacks have higher levels of testosterone (Ross et al, 1986; Lynn, 1992; Rushton, 1997; Ellis, 2017), people believe that the supposed higher levels of testosterone that blacks, on average, have circulating in their blood is the ultimate cause of the crime disparities in America between races. Though see above to see why this is not the ultimate cause.

Blacks, contrary to popular belief, don’t have higher levels of testosterone (Gasper et al, 2006; Rohrrman et al, 2007; Lopez et al, 2013; Richard et al, 2014). Even if they did have higher levels, say the 13 percent that is often cited, it would not be the cause of higher rates of crime, nor the cause of higher rates of prostate cancer in blacks compared to whites. What does cause part of the crime differential, in my opinion, is honor culture (Mazur, 2016). The blacks-have-higher-testosterone canard was pushed by Rushton and Lynn to explain both higher rates of prostate cancer and crime in black Americans, however I have shown that high levels of testosterone do not cause prostate cancer (Stattin et al, 2003; Michaud, Billups, and Partin, 2015). Looking to testosterone as a ‘master switch’ as Rushton called it is the wrong thing to research because, clearly, the theories of Lynn, Rushton, and Ellis have been rebutted.

People are scared of testosterone because they do not understand the hormone. Indeed, people complain about lower birth rates and lower sperm counts, yet believe that having high testosterone will cause one to be a high T savage. This is seen in the misconception that injecting anabolic steroids causes higher levels of aggression. One study looked at the criminal histories of men who self-reported drug use and steroid use Lundholm et al (2014) who conclude: “We found a strong association between self-reported lifetime AAS use and violent offending in a population-based sample of more than 10,000 men aged 20-47 years. However, the association decreased substantially and lost statistical significance after adjusting for other substance abuse. This supports the notion that AAS use in the general population occurs as a component of polysubstance abuse, but argues against its purported role as a primary risk factor for interpersonal violence. Further, adjusting for potential individual-level confounders initially attenuated the association, but did not contribute to any substantial change after controlling for polysubstance abuse.“

The National Institute of Health (NIH) writes: “In summary, the extent to which steroid abuse contributes to violence and behavioral disorders is unknown. As with the health complications of steroid abuse, the prevalence of extreme cases of violence and behavioral disorders seems to be low, but it may be underreported or underrecognized.” We don’t know whether steroids cause aggression or more aggressive athletes are more likely to use the substance (Freberg, 2009: 424). Clearly, the claims of steroids causing aggressive behavior and crime are overblown and there has yet to be a scientific consensus on the matter. A great documentary on the matter is Bigger, Stronger, Faster, which goes through the myths of testosterone while chronicling the use of illicit drugs in bodybuilding and powerlifting.

People are scared of the hormone testosterone—and by extent anabolic steroids—because they believe the myths of the hulking, high T aggressive man that will fight at the drop of the hat. However, reality is much more nuanced than this simple view and psychosocial factors must also be taken into account. Testosterone is not the ‘master switch’ for crime, nor prostate cancer. This is very simply seen with the diurnal variation of the hormone as well as the peak hours for crime in adolescent and adult populations. The extremely low correlation with aggression and testosterone (.08) shows that aggression is mediated by numerous other variables other than testosterone, and that testosterone alone does not cause aggression, and by extension crime.

People fear things they don’t understand and if people were to truly understand the hormone, I’m sure that these myths pushed by people who are scared of the hormone will no longer persist. Low levels of testosterone are part of the cause of our fertility problems in the West. So does it seem logical to imply that high testosterone is for ‘savages’, when, clearly, high levels of testosterone are needed for spermatogenesis which, in turn, would mean a higher birth rate? Anyone who believes that testosterone causes aggression and crime and that the injection of anabolic steroids causes ‘roid rage’ should do some reading on how the production of the hormone in the body as well as the literature on anabolic steroids. If one wants birth rates to increase in the West, then they must also want testosterone levels to increase as well, since they are intimately linked.

Testosterone does not cause crime and there is no reason to fear the hormone.

Diet and Exercise: Don’t Do It? Part II

2300 words

In part II, we will look at the mental gymnastics of someone who is clueless to the data and uses whatever mental gymnastics possible in order to deny the data. Well, shit doesn’t work like that, JayMan. I will review yet more studies on sitting, walking and dieting on mortality as well as behavioral therapy (BT) in regards to obesity. JayMan has removed two of my comments so I assume the discussion is over. Good thing I have a blog so I can respond here; censorship is never cool. JayMan pushes very dangerous things and they need to be nipped in the bud before someone takes this ‘advice’ who could really benefit from lifestyle alterations. Stop giving nutrition advice without credentials! It’s that simple.

JayMan published a new article on ‘The Five Laws of Behavioral Genetics‘ with this little blip:

Indeed, we see this with health and lifestyle: people who exercise more have fewer/later health problems and live longer, so naturally conventional wisdom interprets this to mean that exercise leads to health and longer life, when in reality healthy people are driven to exercise and have better health due to their genes.

So, in JayMan’s world diet and exercise have no substantial impact on health, quality of life and longevity? Too bad the data says otherwise. Take this example:

Take two twins. Lock both of them in a metabolic chamber. Monitor them over their lives and they do not leave the chamber. They are fed different diets (one has a high-carb diet full of processed foods, the other a healthy diet for whatever activity he does); one exercises vigorously/strength trains (not on the same day though!) while the other does nothing and the twin who exercises and eats well doesn’t sit as often as the twin who eats a garbage diet and doesn’t exercise. What will happen?