Home » Posts tagged 'HBD' (Page 3)

Tag Archives: HBD

Race Differences in Penis Size Revisited: Is Rushton’s r/K Theory of Race Differences in Penis Length Confirmed?

2050 words

In 1985 JP Rushton, psychology professor at the University of Ontario, published a paper arguing that r/K selection theory (which he termed Differential K theory) explained and predicted outcomes of what he termed the three main races of humanity—Mongoloids, Negroids and Caucasoids (Rushton, 1985; 1997). Since Rushton’s three races differed on a whole suite of traits, he reasoned races that were more K-selected (Caucasoids and Mongoloids) had slower reproduction times, higher time preference, higher IQ etc in comparison to the more r-selected Negroids who had faster reproduction times, lower time preference, lower IQ etc (see Rushton, 1997 for a review; also see Van Lange, Rinderu, and Bushmen, 2017 for a replication of Rushton’s data not theory). Were Rushton’s assertions on race and penis size verified and do they lend credence to his Differential-K claims regarding human races?

Rushton’s so-called r/K continuum has a whole suite of traits on it. Ranging from brain size to speed of maturation to reaction time and IQ, these data points supposedly lend credence to Rushton’s Differential-K theory of human differences. Penis size is, of course, important for Rushton’s theory due to what he’s said about it in interviews.

Rushton’s main reasoning for penis size differences between race is “You can’t have both”, and that if you have a larger brain then you must have a smaller penis; if you have a smaller penis you must have a larger brain. He believed there was a “tradeoff” between brain size and penis size. In the book Darwin’s Athletes: How Sport Has Damaged Black America and Preserved the Myth of Race, Hoberman (1997: 312) quotes Rushton: “Even if you take something like athletic ability or sexuality—not to reinforce stereotypes or some such thing—but, you know, it’s a trade-off: more brain or more penis. You can’t have both.” This, though, is false. There is no type of evidence to imply that this so-called ‘trade-off’ exists. In my readings of Rushton’s work over the years, that’s always something I’ve wondered: was Rushton implying that large penises take more energy to have and therefore the trade-off exists due to this supposed relationship?

Andrew Joyce of the Occidental Observer published an article the other day in defense of Richard Lynn. Near the end of his article he writes:

Another tactic is to belittle an entire area of research by picking out a particularly counter-intuitive example that the public can be depended on to regard as ridiculous. A good example is J. Philippe Rushton’s claim, based on data he compiled for his classic Race, Evolution and Behavior, that average penis size varied between races in accord with the predictions of r/K theory. This claim was held up to ridicule by the likes of Richard Lewontin and other crusaders against race realism, and it is regularly presented in articles hostile to the race realist perspective. Richard Lynn’s response, as always, was to gather more data—from 113 populations. And unsurprisingly for those who keep up with this area of research, he found that indeed the data confirmedRushton’s original claim.

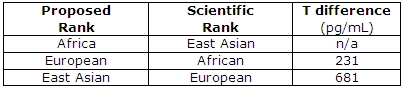

The claim was ridiculed because it was ridiculous. This paper by Lynn (2013) titled Rushton’s r-K life history theory of race differences in penis length and circumference examined in 113 populations is the paper that supposedly verifies Rushton’s theory regarding race differences in penis size, along with one of its correlates in Rushton’s theory (testosterone). Lynn (2013) proclaims that East Asians are the most K-evolved, then come Europeans, while Africans are the least K-evolved. This, then, is the cause of the supposed racial differences in penis size.

Lynn (2013) begins by briefly discussing Rushton’s ‘findings’ on racial differences in penis size while also giving an overview of Rushton’s debunked r/K selection theory. He then discusses some of Rushton’s studies (which I will describe briefly below) along with stories from antiquity of the supposed larger penis size of African males.

Our old friend testosterone also makes an appearance in this paper. Lynn (2013: 262) writes:

Testosterone is a determinant of aggression (Book, Starzyk, & Quinsey, 2001; Brooks & Reddon, 1996; Dabbs, 2000). Hence, a reduction of aggression and sexual competitiveness between men in the colder climates would have been achieved by a reduction of testosterone, entailing the race differences in testosterone (Negroids > Caucasoids > Mongoloids) that are given in Lynn (1990). The reduction of testosterone had the effect of reducing penis length, for which evidence is given by Widodsky and Greene (1940).

Phew, there’s a lot to unpack here. (I discuss Lynn 1990 in this article.) Testosterone does not determine aggression; see my most recent article on testosterone (aggression increases testosterone; testosterone does not increase aggression. Book, Starzyk and Quinsey, 2001 show a .14 correlation between testosterone and aggression, whereas Archer, Graham-Kevan, and Davies 2005 show the correlation is .08). This is just a correlation. Sapolsky (1997: 113) writes:

Okay, suppose you note a correlation between levels of aggression and levels of testosterone among these normal males. This could be because (a) testosterone elevates aggression; (b) aggression elevates testosterone secretion; (c) neither causes the other. There’s a huge bias to assume option a while b is the answer. Study after study has shown that when you examine testosterone when males are first placed together in the social group, testosterone levels predict nothing about who is going to be aggressive. The subsequent behavioral differences drive the hormonal changes, not the other way around.

Brooks and Reddon (1996) also only show relationships with testosterone and aggressive acts; they show no causation. This same relationship was noted by Dabbs (2000; another Lynn 2013 citation) in prisoners. More violent prisoners were seen to have higher testosterone, but there is a caveat here too: being aggressive stimulates testosterone production so of course they had higher levels of testosterone; this is not evidence for testosterone causing aggression.

Another problem with that paragraph quoted from Lynn (2013) is that it’s a just-so story. It’s an ad-hoc explanation. You notice something with data you have today and then you imagine a nice-sounding story to attempt to explain your data in an evolutionary context. Nice-sounding stories are cool and all and I’m sure everyone loves a nicely told story, but when it comes to evolutionary theory I’d like theories that can be independently verified of the data they’re trying to explain.

My last problem with that paragraph from Lynn (2013) is his final citation: he cites it as evidence that the reduction of testosterone affects penis length…..but his citation (Widodsky and Green, 1940) is a study on rats… While these studies can give us a wealth of information regarding our physiologic systems (at least showing us which types of avenues to pursue; see my previous article on myostatin), they don’t really mean anything for humans; especially this study on the application of testosterone to the penis of a rat. See, the fatal flaw in these assertions is this: would a, say, 5 percent difference in testosterone lead to a larger penis as if there is a dose-response relationship between testosterone and penis length? It doesn’t make any sense.

Lynn (2013), though, says that Rushton’s theory doesn’t propose that there is a direct causal relationship between “intelligence”‘ and penis length, but just that they co-evolved together, with testosterone reduction occurring when Homo sapiens migrated north out of Africa they needed to cooperate more so selection for lower levels of testosterone subsequently occurred which then shrunk the penises of Rushton’s Caucasian and Mongoloid races.

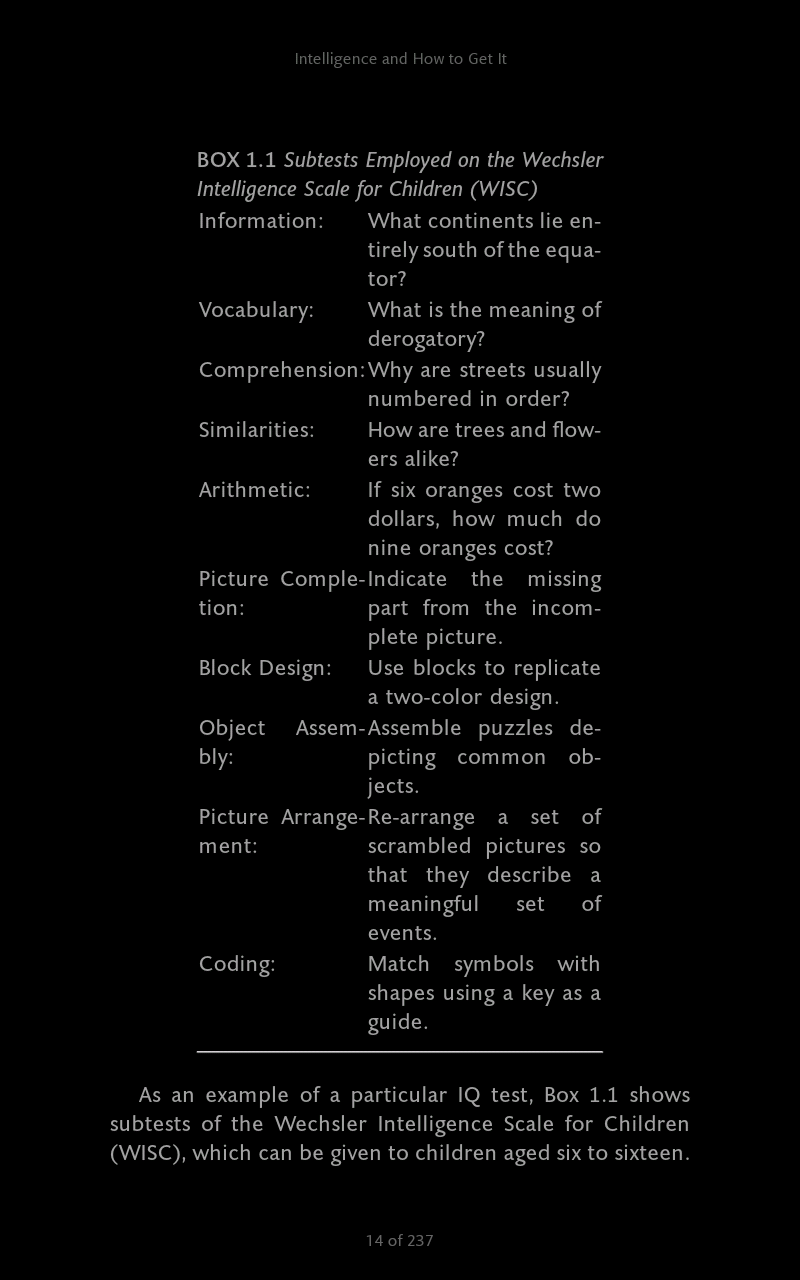

Lynn (2013) then discusses two “new datasets”, one of which is apparently in Donald Templer’s book Is Size Important (which is on my to-read list, so many books, so little time). Table 1 below is from Lynn reproducing Templer’s ‘work’ in his book.

The second “dataset” is extremely dubious. Lynn (2013) attempts to dress it up, writing that “The information in this website has been collated from data obtained by research centres and reports worldwide.” Ethnicmuse has a good article on the pitfalls of Lynn’s (2013) article. (Also read Scott McGreal’s rebuttal.)

Rushton attempted to link race and penis size for 30 years. In a paper with Bogaert (Rushton and Bogaert, 1987), they attempt to show that blacks had larger penises than whites who h ad longer penises than Asians which then supposedly verified one dimension of Rushton’s theory. Rushton (1988) also discusses race differences in penis size, citing a previous paper by Rushton and Bogaert, where they use data from Alfred Kinsey, but this data is nonrepresentative and nonrandom (see Zuckermann and Brody, 1988 and Weizmann et al, 1990: 8).

Still others may attempt to use supposed differences in IGF-1 (insulin-like growth factor 1) as evidence that there is, at least, physiological evidence for the claim that black men have larger penises than white men, though I discussed that back in December of 2016 and found it strongly lacking.

Rushton (1997: 182) shows a table of racial differences in penis size which was supposedly collected by the WHO (World Health Organization). Though a closer look shows this is not true. Ethnicmuse writes:

ANALYSIS: The WHO did not study penis sizes. It relied on three separate studies, two of which were not peer-reviewed and the data was included as “Appendix III” (which should have alerted Rushton that this was not an original study). The first study references Africans in the US (not Africa!) and Europeans in the US (not Europe!), the second Europeans in Australia (not Europe!) and the third, Thais.

So it seems to be bullshit all the way down.

Ajmani et al (1985) showed that 385 healthy Nigerians had an average penile length of 3.21 inches (flaccid). Orakwe and Ebuh (2007) show that while Nigerians had longer penises than other ethnies tested, the only statistical difference was between them and Koreans. Though Veale et al (2014: 983) write that “There are no indications of differences in racial variability in our present study, e.g. the study from Nigeria was not a positive outlier.”

Lynn and Dutton have attempted to use androgen differentials between the races as evidence for racial differences in penis size (this is another attempt at a physiological argument to attempt to show the existence of racial differences in penis size). Edward Dutton attempted to revive the debate on racial differences in penis size during a 2015 presentation where he, again, showed that Negroids have higher levels of testosterone than Caucasoids who have higher levels of androgens than Mongoloids. These claims, though, have been rebutted by Scott McGreal who showed that populations differences in androgen levels are meaningless while they subsequently fail to validate Rushton and Lynn’s claims on racial differences in penis size.

Finally, it was reported the other day that condoms from China were too small in Zimbabwe, per Zimbabwe’s health minister. This led Kevin MacDonald to proclaim that this was “More corroboration of race differences in penis size which was part of the data Philippe Rushton used in his theory of r/K selection (along with brain size, maturation rates, IQ, etc.)” This isn’t “more corroboration” for Rushton’s long-dead theory; nor is this evidence that blacks have longer penises. I don’t understand why people make broad and sweeping generalizations. It’s one country in Africa that complained about smaller condoms from a country in East Asia, therefore this is more corroboration for Rushton’s r/K selection theory? The logic doesn’t follow.

Asians have small condoms. Those condoms go to Africa. They complain condoms from China are too small. Therefore Rushton’s r/K selection theory is corroborated. Flawed logic.

In sum, Lynn (2013) didn’t verify Rushton’s theory regarding racial differences in penis size and I find it even funnier that Lynn ends his article talking about “falsification’ stating that this aspect of Rushton’s theory has survived two attempts at falsification, therefore, it can be regarded as a “progressive research program“, though obviously, with the highly flawed “data” that was used, one cannot rationally make that statement. Supposed hormonal differences between the races do not cause penis size differences; even if blacks had levels of testosterone significantly higher than whites (the 19 percent that is claimed by Lynn and Rushton off of one highly flawed study in Ross et al, 1986) they still would not have longer penises.

The study of physical differences between populations is important, but sometimes, stereotypes do not tell you anything, especially in this case. Though in this instance, the claim that blacks have the longest penis lies on shaky ground, and with what evidence we do have for the claim, we cannot logically make the inference (especially not from Lynn’s (2013) flimsy data). Richard Lynn did not “confirm” anything with this paper; the only thing he “confirmed” are his own preconceived notions; he did not ‘prove’ what he set out to.

Minimalist Races Exist and are Biologically Real

3050 words

People look different depending on where their ancestors derived from; this is not a controversial statement, and any reasonable person would agree with that assertion. Though what most don’t realize, is that even if you assert that biological races do not exist, but allow for patterns of distinct visible physical features between human populations that then correspond with geographic ancestry, then race—as a biological reality—exists because what denotes the physical characters are biological in nature, and the geographic ancestry corresponds to physical differences between continental groups. These populations, then, can be shown to be real in genetic analyses, and that they correspond to traditional racial groups. So we can then say that Eurasian, East Asian, Oceanian, black African, and East Asians are continental-level minimalist races since they hold all of the criteria needed to be called minimalist races: (1) distinct facial characters; (2) distinct morphologic differences; and (3) they come from a unique geographic location. Therefore minimalist races exist and are a biological reality. (Note: There is more variation within races than between them (Lewontin, 1972; Rosenberg et al, 2002; Witherspoon et al, 2007; Hunley, Cabana, and Long, 2016), but this does not mean that the minimalist biological concept of race has no grounding in biology.)

Minimalist race exists

The concept of minimalist race is simple: people share a peculiar geographic ancestry unique to them, they have peculiar physiognomy (facial features like lips, facial structure, eyes, nose etc), other physical traits (hair/hair color), and a peculiar morphology. Minimalist races exist, and are biologically real since minimalist races can survive findings from population genetics. Hardimon (2017) asks, “Is the minimalist concept of race a social concept?” on page 62. He writes that social concepts are socially constructed in a pernicious sense if and only if it “(i) fails to represent any fact of the matter and (ii) supports and legitimizes domination.” Of course, populations who derive from Africa, Europe, and East Asia have peculiar facial morphology/morphology unique to that isolated population. Therefore we can say that minimalist race does not conform to criteria (i). Hardimon (2017: 63) then writes:

Because it lacks the nasty features that make the racialist concept of race well suited to support and legalize domination, the minimalist race concept fails to satisfy condition (ii). The racialist concept, on the other hand, is socially constructed in the pernicious sense. Since there are no racialist races, there are no facts of the matter it represents. So it satisfies (i). To elaborate, the racialist race concept legtizamizes racial domination by representing the social hierarchy of race as “natural” (in a value-conferring sense): as the “natural” (socially unmediated and inevitable) expression of the talent and efforts of the inidividuals who stand on its rungs. It supports racial domination by conveying the idea that no alternative arrangment of social institutions could possibly result in racial equality and hence that attempts to engage in collective action in the hopes of ending the social hierarchy of race are futile. For these reasons the racialist race concept is also idealogical in the prejorative sense.

Knowing what we know about minimalist races (they have distinct physiognomy, distinct morphology and geographic ancestry unique to that population), we can say that this is a biological phenomenon, since what makes minimalist races distinct from one another (skin color, hair color etc) are based on biological factors. We can say that brown skin, kinky hair and full lips, with sub-Saharan African ancestry, is African, while pale/light skin, straight/wavy/curly hair with thin lips, a narrow nose, and European ancestry makes the individual European.

These physical features between the races correspond to differences in geographic ancestry, and since they differ between the races on average, they are biological in nature and therefore it can be said that race is a biological phenomenon. Skin color, nose shape, hair type, morphology etc are all biological. So knowing that there is a biological basis to these physical differences between populations, we can say that minimalist races are biological, therefore we can use the term minimalist biological phenomenon of race, and it exists because there are differences in the patterns of visible physical features between human populations that correspond to geographic ancestry.

Hardimon then talks about how eliminativist philosophers and others don’t deny that above premises above the minimalist biological phenomenon of race, but they allow these to exist. Hardimon (2017: 68-69) then quotes a few prominent people who profess that there are, of course, differences in physical features between human populations:

… Lewontin … who denies that biological races exist, freely grants that “peoples who have occupied major geographic areas for much of the recent past look different from one another. Sub-Saharan Africans have dark skin and people who have lived in East Asia tend to have a light tan skin and an eye color and eye shape that is difference from Europeans.” Similarly, population geneticist Marcus W. Feldman (final author of Rosenberg et al., “Genetic Stucture of Human Populations” [2002]), who also denies the existence of biological races, acknowledges that “it has been known for centuries that certain physical features of humans are concentrated within families: hair, eye, and skin color, height, inability to digest milk, curliness of hair, and so on. These phenotypes also show obvious variation among people from different continents. Indeed, skin color, facial shape, and hair are examples of phenotypes whose variation among populations from different regions is noticeable.” In the same vein, eliminative anthropologist C. Loring Brace concedes, “It is perfectly true that long term residents of various parts of the world have patterns of features that we can identify as characteristic of they area from which they come.”

So even these people who claim to not believe in “biological races”, do indeed believe in biological races because what they are describing is biological in nature and they, of course, do not deny that people look different while their ancestors came from different places so therefore they believe in biological races. We can then use the minimalist biological phenomenon of race to get to the existence of minimalist races.

Hardimon (2017: 69) writes:

Step 1. Recognize that there are differences in patterns of visible physical features of human beings that correspond to their differences in geographic ancestry.

Step 2. Observe that these patterns are exhibited by groups (that is, real existing groups).

Step 3. Note that the groups that exhibit these patterns of visible physical features correspond to differences in geographical ancestry satisfy the conditions of the minimalist concept of race.

Step 4. Infer that minimalist race exists.

Those individuals mentioned previously who deny biological races but allow that people with ancestors from differing geographic locales look differently do not disagree with step 1, nor does anyone really disagree with step 2. Step 4’s inference immediately flows from the premise in step 3. “Groups that exhibit patterns or visible physical features that correspond to differences in geographical ancestry satisfy the conditions of the minimalist concept of race. Call (1)-(4) the argument from the minimalist biological phenomenon of race” (Hardimon, 2017: 70). Of course, the argument does not identify which populations may be called races (see further below), it just shows that race is a biological reality. Because if minimalist races exist, then races exist because minimalist races are races. Minimalist races exist, therefore biological races exist. Of course, no one doubts that people come from Europe, sub-Saharan Africa, East Asia, the Americas, and the Pacific Islands, even though the boundaries between them are ‘blurry’. They exhibit patterns of visible physical characters that correspond to their differing geographic ancestry, they are minimalist races therefore minimalist races exist.

Pretty much, the minimalist concept of race is just laying out what everyone knows and arguing for its existence. Minimalist races exist, but are they biologically real?

Minimalist races are biologically real

Of course, some who would assert that minimalist races do not exist would say that there are no ‘genes’ that are exclusive to one certain population—call them ‘race genes’. Of course, these types of genes do not exist. Whether or not one individual is a part of one race or not does not rest on the basis of his physical characters, but is determined by who his parents are, because one of the three premises for the minimalist race argument is ‘must have a peculiar geographic ancestry’. So it’s not that members of races share sets of genes that other races do not, it’s based on the fact that they share a distinctive set of visible physical features that then correspond with geographic ancestry. So of course if the minimalist concept of race is a biological concept then it entails more than ‘genes for’ races.

Of course, there is a biological significance to the existence of minimalist biological races. Consider that one of the physical characters that differ between populations is skin color. Skin color is controlled by genes (about half a dozen within and a dozen between populations). Lack of UV rays for individuals with dark skin will lead to diseases like prostate cancer, while darker skin is a protectant against UV damage to human skin (Brenner and Hearing, 2008; Jablonksi and Chaplin, 2010). Since minimalist race is biologically significant and minimalist races are partly defined by differences in skin color between populations then skin color has both medical and ecological significance.

(1) Consider light skin. People with light skin are more susceptible to skin cancer since they evolved in locations with poor UVR radiation (D’Orazio et al, 2013). The body needs vitamin D to absorb and use calcium for maintaining proper cell functioning. People who evolved near the equator don’t have to worry about this because the doses of UVB they absorb are sufficient for the production of enough previtamin D. While East Asians and Europeans on the other hand, became adapted to low-sunlight locations and therefore over time evolved lighter skin. This loss of pigmentation allowed for better UVB absorption in these new environments. (Also read my article on the evolution of human skin variation and also how skin color is not a ‘tell’ of aggression in humans.)

(2) While darker-skinned people have a lower rate of skin cancer “primarily a result of photo-protection provided by increased epidermal melanin, which filters twice as much ultraviolet (UV) radiation as does that in the epidermis of Caucasians” (Bradford, 2009). Dark skin is thought to have evolved to protect against skin cancer (Greaves, 2014a) but this has been contested (Jablonski and Chaplin, 2014) and defended (Greaves, 2014b). So therefore, using (1) and (2), skin color has evolutionary signifigance.

So as humans began becoming physically adapted to their new niches they found themselves in, they developed new features distinct from the location they previously came from to better cope with the new lifestyle due to their new environments. For instance “Northern Europeans tend to have light skin because they belong to a morphologically marked ancestral group—a minimalist race—that was subject to one set of environmental conditions (low UVR) in Europe” (Hardimon, 2017: 81). Of course explaining how human beings survived in new locations falls into the realm of biology, while minimalist races can explain why this happened.

Minimalist races clearly exist since minimalist races constitute complex biological patterns between populations. Hardimon (2017: 83) writes:

It [minimalist race] also enjoys intrinsic scientific interest because it represents distinctive salient systematic dimension of human biological diversity. To clarify: Minimalist race counts as (i) salient because human differences of color and shape are striking. Racial differences in color and shape are (ii) systematic in that they correspond to differences in geographic ancestry. They are not random. Racial differences are (iii) distinctive in that they are different from the sort of biological differences associated with the other two salient systematic dimensions of human diversity: sex and age.

[…]

An additional consideration: Like sex and age, minimalist race constitutes one member of what might be called “the triumverate of human biodiversity.” An account of human biodiversity that failed to include any one of these three elements would be obviously incomplete. Minimalist race’s claim to be biologically real is as good as the claim of the other members of the triumverate. Sex is biologically real. Age is biologically real. Minimalist race is biologically real.

Real does not mean deep. Compared to the biological associated with sex (sex as contrasted with gender), the biological differences associated with minimalist race are superficial.

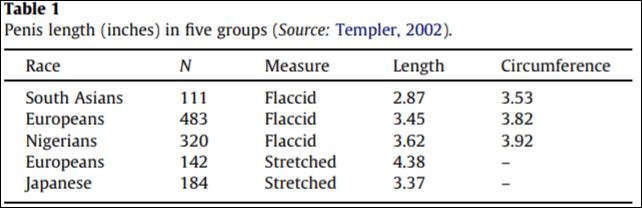

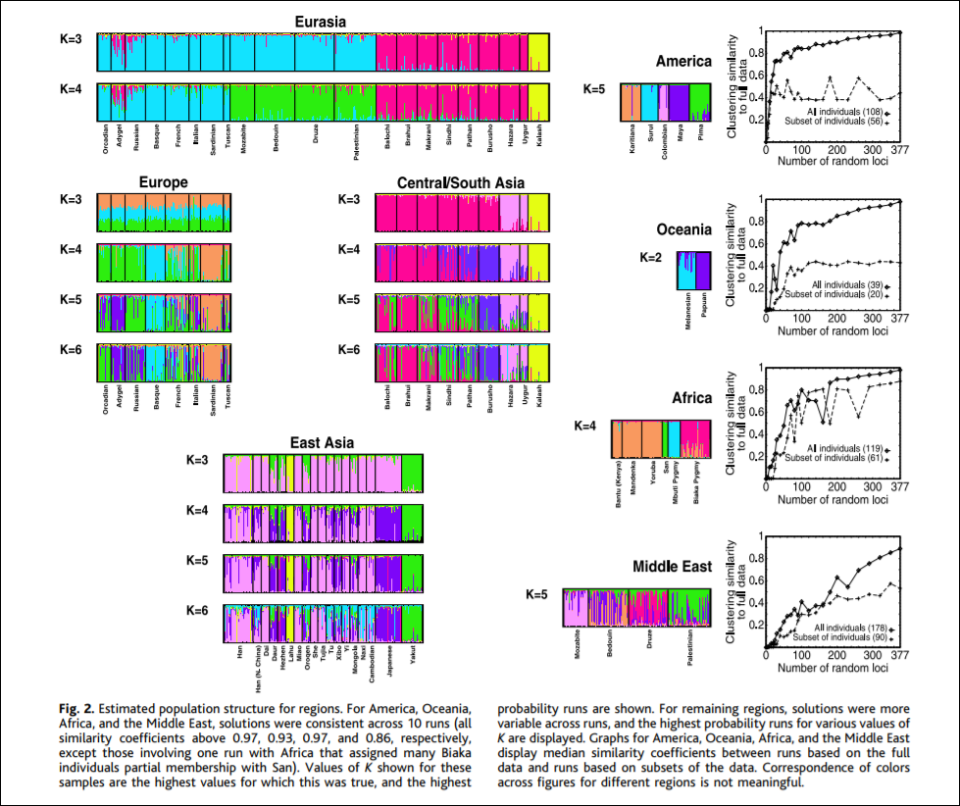

Of course, the five ‘clusters’ and ‘populations’ identified by Rosenberg et al’s (2002) K=5 graph, which told structure to produce 5 genetic clusters, corresponds to Eurasia, Africa, East Asia, Oceania, and the Americas, are great candidates for minimalist biological races since they correspond to geographic locations, and even corroborates what Fredrich Blumenbach said about human races back in the 17th century. Hardimon further writes (pg 85-86):

If the five populations corresponding to the major areas are continental-level minimalist races, the clusters represent continental-level minimalist races: The cluster in the mostly orange segment represents the sub-Saharan African continental-level minimalist race. The cluster in the mostly blue segment represents the Eurasian continental-level minimal race. The cluster in the mostly pink segment represents the East Asian continental-level minimalist race. The cluster in the mostly green segment represents the Pacific Islander continental-level minimalist race. And the cluster in the mostly purple segment represents the American continental-level minimalist race.

[…]

The assumption that the five populations are continental-level minimalist races entitles us to interpret structure as having the capacity to assign individuals to continental-level minimalist races on the basis of markers that track ancestry. In constructing clusters corresponding to the five continental-level minimalist races on the basis of objective, race-neutral genetic markers, structure essentially “reconstructs” those races on the basis of a race-blind procedure. Modulo our assumption, the article shows that it is possible to assign individuals to continental-level races without knowing anything about the race or ancestry of the individuals from whose genotypes the microsattelites are drawn. The populations studied were “defined by geography, language, and culture,” not skin color or “race.”

Of course, as critics note, the researchers predetermine how many populations that structure demarcates, for instance, K=5 indicates that the researchers told the program to delineate 5 clusters. Though, these objections do not matter. For the 5 populations that come out in K=5 “are genetically structured … which is to say, meaningfully demarcated solely on the basis of genetic markers” (Hardimon, 2017: 88). K=6 brings one more population, the Kalash, a group from northern Pakistan who speak an Indo-European language. Though “The fact that structure represents a population as genetically distinct does not entail that the population is a race. Nor is the idea that populations corresponding to the five major geographic areas are minimalist races undercut by the fact that structure picks out the Kalash as a genetically distinct group. Like the K=5 graph, the K=6 graph shows that modulo our assumption, continental-level races are genetically structured” (Hardimon, 2017: 88).

Though of course there are naysayers. Svante Paabo and David Serre, Hardimon writes, state that when individuals are sampled from homogeneous populations from around the world, the gradients of the allele frequencies that are found are distributed randomly across the world rather than clustering discretely. Though Rosenberg et al responded by verifying that the clusters they found are not artifacts of sampling as Paabo and Serre imply, but reflect features of underlying human variation. Though Rosenberg et al agree with Paabo and Serre in that that human genetic diversity consists of clines in variation in allele frequencies (Hardimon, 2017: 89). Other naysayers also state that all Rosenberg et al show is what we can “see with our eyes”. Though a computer does not partition individuals into different populations based on something that can be done with eyes, it’s based on an algorithm.

Hardimon also accepts that black Africans, Caucasians, East Asians, American Indians and Oceanians can be said to be races in the basic sense because “they constitute a partition of the human species“, and that they are distinguishable “at the level of the gene” (Hardimon, 2017: 93). And of course, K=5 shows that the 5 races are genetically distinguishable.

Hardimon finally discusses some medical significance for minimalist races. He states that if you are Caucasian that it is more likely that you have a polymorphism that protects against HIV compared to a member of another race. Meanwhile, East Asians are more likely to carry alleles that make them more susceptible to Steven-Johnson syndrome or another syndrome where their skin falls off. Though of course, the instances where this would matter in a biomedical context are rare, but still should be at the back of everyone’s mind (as I have argued), even though instances where medical differences between minimalist races are rare, there are times where one’s race can be medically significant.

Hardimon finally states that this type of “metaphysics of biological race” can be called “deflationary realism.” Deflationary because it “consists in the repudiation of the ideas that racialist races exist and that race enjoys the kind of biological reality that racialist race was supposed to have” and realism which “consists in its acknowledgement of the existence of minimalist races and the genetically grounded, relatively superficial, but still significant biological reality of minimalist race” (Hardimon, 2017: 95-96).

Conclusion

Minimalist races exist. Minimalist races are a biological reality because distinct visible patterns show differences between geographically isolated populations. This is enough for the classification of the five classic races we know of to be called race, be biologically real, and have a medical significance—however small—because certain biological/physical traits are tied to different geographic populations—minimalist races.

Hardimon (2017: 97) shows an alternative to racialism:

Deflationary realism provides a worked-out alternative to racialism—it it a theory that represents race as a genetically grounded, relatively superficial biological reality that is not normatively important in itself. Deflationary realism makes it possible to rethink race. It offers the promise of freeing ourselves, if only imperfectly, from the racialist background conception of race.

It is clear that minimalist races exist and are biologically real. You do not need to speak about supposed mental traits between these minimalist races, they are irrelevant to the existence of these minimalist biological races. As Hardimon (2017: 67) writes: “No reference is made to normatively important features such as intelligence, sexuality, or morality. No reference is made to essences. The idea of sharp boundaries between patterns of visible physical features or corresponding geographical regions is not invoked. Nor again is reference made to the idea of significant genetic differences. No reference is made to groups that exhibit patterns of visible physical features that correspond to geographic ancestry.”

The minimalist biological concept of race stands up to numerous lines of argumentation, therefore we can say without a shadow of a doubt that minimalist biological race exists and is real.

Do pigmentation and the melanocortin system modulate aggression and sexuality in humans as they do in other animals? A Response to Rushton and Templer (2012)

2100 words

Rushton et al have kept me pretty busy over the last year or so. I’ve debunked many of their claims that rest on biology—such as testosterone causing crime and aggression. The last paper that Rushton published before he died in October of 2012 was an article with Donald Templer—another psychologist—titled Do pigmentation and the melanocortin system modulate aggression and sexuality in humans as they do in other animals? (Rushton and Templer, 2012) and they make a surfeit of bold claims that do not follow. They review animal studies on skin and fur pigmentation and show that the darker an animal’s skin or fur, the more likely they are to be aggressive and violent. They then conclude that, of course (it wouldn’t be a Rushton article without it), that the long-debunked r/K ‘continuum’ explains the co-variation between human populations in birth rate, longevity, violent crime, infant mortality and rate and acquisition of AIDS/HIV.

In one of the very first articles I wrote on this site, I cited Rushton and Templer (2012) favorably (back when I had way less knowledge of biology and hormones). I was caught by biases and not knowing anything about what was discussed. After I learned more about biology and hormones over the years, I came to find out that the claims in the paper are wrong and that they make huge, sweeping conclusions based on a few correlations. Either way, I have seen the error of my ways and the biases that lead me to the beliefs I held, and when I learned more about hormones and biology I saw how ridiculous some of the papers I have cited in the past truly were.

Rushton and Templer (2012) start off the paper by discussing Ducrest et al (2008) who state that within each species studied, darker-pigmented individuals of said species exhibited higher rates of aggression, sexuality and social dominance (which is caused by testosterone) than lighter-pigmented individuals in that same species. They state that this is due to pleiotropy—when a single gene has to or more phenotypic effects. They then refer to Rushton and Jensen (2005) to reference the claim that low IQ is correlated with skin color (skin color doesn’t cause IQ, obviously).

They then state that in 40 vertebrate species that within each that the darker-pigmented members had higher levels of aggression and sexual activity along with a larger body size, better stress resistance, and are more physically active while grooming (Ducrest, Keller, and Roulin, 2008). Rushton and Templer (2012) then state that this relationship was ‘robust’ across numerous species, specifically 36 species of birds, 4 species of fish, 3 species of mammals, and 4 species of reptiles.

Rushton and Templer (2012) then discuss the “Validation of the pigmentation system as causal to the naturalistic observations was demonstrated by experimentally manipulating pharmacological dosages and by studies of cross-fostering“, citing Ducrest, Keller, and Roulin (2008). They even state that ‘Placing darker versus lighter pigmented individuals with adoptive parents of the opposite pigmentation did not modify offspring behavior.” Seems legit. Must mean that their pigmentation caused these differences. They then state something patently ridiculous: “The genes that control that balance occupy a high level in the hierarchical system of the genome.” Though, unfortunately for their hypothesis, there is no privileged level of causation (Noble, 2016; also see Noble, 2008), so this is a nonsense claim. Genes are not ‘blueprints’ or ‘recipes’ (Oyama, 1985; Schneider, 2007).

They then refer to Ducrest, Keller and Roulin (2008: 507) who write:

In this respect, it is important to note that variation in melanin-based coloration between human populations is primarily due to mutations at, for example, MC1R, TYR, MATP and SLC24A5 [29,30] and that human populations are therefore not expected to consistently exhibit the associations between melaninbased coloration and the physiological and behavioural traits reported in our study.

This quote, however, seems to be ignored by Rushton and Templer (2012) throughout the rest of their article, and so even though they did a brief mentioning of the paper and how one should be ‘cautious’ in interpreting the data in their study, it seems like they just brush it under the rug to not have to contend with it. Rushton and Templer (2012) then cite the famous silver fox study, where tame foxes were bred. They lost their dark fur and became lighter and, apparently, were less aggressive than their darker-pigmented kin. These animal studies are, in my useless when attempting to correlate skin color and the melanocortin system in the modulation of aggressive behavior, so let’s see what they write about human studies.

It’s funny, because Rushton and Templer (2012) cite Ducrest, Keller, and Roulin (2008: 507) to show that caution should be made when assessing any so-called differences in the melanocortin system between human races. They then disregard that by writing “A first examination of whether melanin based pigmentation plays a role in human aggression and sexuality (as seen in non-human animals), is to compare people of African descent with those of European descent and observe whether darker skinned individuals average higher levels of aggression and sexuality (with violent crime the main indicator of aggression).” This is a dumb comparison. Yes, African nations commit more crime than European nations, but does this mean that the skin color (or whatever modulates skin color/melanocortin system) is the cause for this? No. Not at all.

There really isn’t anything to discuss here, though, because they just run through how different African nations have higher levels of crime than European and East Asian nations, how blacks report having more sex and feel less guilty about it. Rushton and Templer (2012) then state that one study “asked married couples how often they had sex each week. Pacific Islanders and Native Americans said from 1 to 4 times, US Whites answered 2–4 times, while Africans said 3 to over 10 times.” They then switch over to their ‘replication’ of this finding, using the data from Alfred Kinsey (Rushton and Bogaert, 1988). Though, unfortunately for Rushton and Bogaert, there are massive problems with this data.

Though, the Kinsey data can hardly be seen as representative (Zuckerman and Brody, 1988), and it is also based on outdated, non-representative, non-random samples (Lynn, 1989). Rushton and Templer (2012) also discuss so-called differences in penis size between races, too. But I have written two response articles on the matter and shown that Rushton used shoddy sources like ‘French Army Surgeon who contradicts himself: “Similarly, while the French Army surgeon announces on p. 56 that he once discovered a 12-inch penis, an organ of that size becomes “far from rare” on p. 243. As one might presume from such a work, there is no indication of the statistical procedures used to compute averages, what terms such as “often” mean, how subjects were selected, how measurements were made, what the sample sizes were, etc” (Weizmann et al, 1990: 8).

Rushton and Templer (2012) invoke, of course, Rushton’s (1985; 1995) r/K selection theory as applied to human races. I have written numerous articles on r/K selection and attempts at reviving it, but it is long dead, especially as a way to describe human populations (Anderson, 1991; Graves, 2002). The theory was refuted in the late 70s (Graves, 2002), and replaced with age-specific mortality (Reznick et al, 2002). Some of his larger claims I will cover in the future (like how r/K relates to criminal activity), but he just goes through all of the same old motions he’s been going through for years, bringing nothing new to the table. In all honesty, testosterone is one of the pillars of Rushton’s r/K selection theory (e.g., Lynn, 1990; Rushton, 1997; Rushton, 1999; Hart, 2007; Ellis, 2017; extensive arguments against Ellis, 2017 can be found here). If testosterone doesn’t do what he believes it does and the levels of testosterone between the races are not as high as believed/non-existent (Gapstur et al, 2002; read my discussion of Gapstur et al 2002; Rohrmann et al, 2007; Richard et al, 2014. Though see Mazur, 2016 and read my interpretation of the paper) then we can safely disregard their claims.

Rushton and Templer (2012: 6) write:

Another is that Blacks have the most testosterone (Ellis & Nyborg, 1992), which

helps to explain their higher levels of athletic ability (Entine, 2000).

As I have said many times in the past, Ellis and Nyborg (1992) found a 3 percent difference in testosterone levels between white and black ex-military men. This is irrelavent. He also, then cites John Entine’s (2002) book Taboo: Why Black Athletes Dominate Sports and Why We’re Afraid to Talk About It, but this doesn’t make sense. Because he literally cites Rushton who cites Ellis and Nyborg (1992) and Ross et al (1986) (stating that blacks have 3-19 percent higher levels of testosterone than whites, citing Ross et al’s 1986 uncorrected numbers)—and I have specifically pointed out numerous flaws in their analysis and so, Ross et al (1986) cannot seriously be used as evidence for high testosterone differences between the races. Though I cited Fish (2013), who wrote about Ellis and Nyborg (1992):

“These uncorrected figures are, of course, not consistent with their racial r- and K-continuum.”

Rushton and Templer (2012) then state that testosterone acts like a ‘master switch’ (Rushton, 1999), implicating testosterone as a cause for aggression, though I’ve shown that this is not true, and that aggression causes testosterone production, testosterone doesn’t cause aggression. Testosterone does control muscle mass, of course. But Rushton’s claim that blacks have deeper voices due to higher levels of testosterone, but this claim does not hold in newer studies.

Rushton and Templer (2012) then shift gears to discuss Templer and Arikawa’s (2006) study on the correlation between skin color and ‘IQ’. However, there is something important to note here from Razib:

we know the genetic architecture of pigmentation. that is, we know all the genes (~10, usually less than 6 in pairwise between population comparisons). skin color varies via a small number of large effect trait loci. in contrast, I.Q. varies by a huge number of small effect loci. so logically the correlation is obviously just a correlation. to give you an example, SLC45A2 explains 25-40% of the variance between africans and europeans.

long story short: it’s stupid to keep repeating the correlation between skin color and I.Q. as if it’s a novel genetic story. it’s not. i hope don’t have to keep repeating this for too many years.

Rushton and Templer (2012: 7) conclude:

The melanocortin system is a physiological coordinator of pigmentation and life history traits. Skin color provides an important marker placing hormonal mediators such as testosterone in broader perspective.

I don’t have a problem with the claim that the melanocortin system is a physiological coordinator of pigmentation, because it’s true and we have a great understanding of the physiology behind the melanocortin system (see Cone, 2006 for a review). EvolutionistX also has a great article, reviewing some studies (mouse studies and some others) showing that increasing melatonin appears to decreases melanin.

Rushton and Templer’s (2012) make huge assumptions not warranted by any data. For instance, Rushton states in his VDare article on the subject, J. Phillipe Rushton Says Color May Be More Than Skin Deep, “But what about humans? Despite all the evidence on color, aggression, and sexuality in animals, there has been little or no discussion of the relationship in people. Ducrest & Co. even warned that genetic mutations may make human populations not exhibit coloration effects as consistently as other species. But they provided no evidence.” All Rushton and Templer (2012) do in their article is just restating known relationships with crime and race, and then attempting to implicate the melanocortin system as a factor driving this relationship, literally off of a slew of animal studies. Even then, the claim that Ducrest, Keller, and Roulin (2008: 507) provide no evidence for their warning is incorrect, because before they stated that, they wrote “In this respect, it is important to note that variation in melanin-based coloration between human populations is primarily due to mutations at, for example, MC1R, TYR, MATP and SLC24A5 [29,30]. . .” Melanin does not cause aggression, it does not cause crime. Rushton and Templer just assume too many things based on no evidence in humans, while their whole hypothesis is structured around a bunch of animal studies.

In conclusion, it seems like Rushton and Templer don’t know anything about the physiology of the melanocortin system if they believe that pigmentation and the melanocortin system modulates aggression and sexual behavior in humans. I know of no evidence (studies, not Rushton and Templer’s 2012 relationships with crime and then asserting that, because these relationships are seen in animals, that it must mean that the melanocortin system in humans modulates the relationships too) for these assertions by Rushton and Templer (2012). The fact that they think that restating relationships between crime and race, country of origin and race, supposed correlations with testosterone and crime and blacks supposedly having higher testosterone than whites, among other things, shows that the proposed relationships are caused by the melanocortin system and Lift History Theory shows their ignorance of the human physiological system.

Height, Longetivity, and Aging

1700 words

Humans reach their maximum height at around their mid-20s. It is commonly thought that taller people have better life outcomes, and are in general healthier. Though this misconception stems from misconceptions about the human body. In all reality, shorter people live longer than taller people. (Manlets of the world should be rejoicing; in case anyone is wondering I am 5’10”.) This flies in the face about what people think, and may be counter-intuitive to some but the logic—and data—is sound. I will touch on mortality differences between tall and short people and at the end talk a bit about shrinking with age (and studies that show there is no—or little—decrease in height due to self-reports, the study is flawed).

One reason why the misconception of taller people living longer, healthier lives than shorter people is the correlation between height and IQ—people assume that they are traits that are ‘similar’ in that they become ‘stable’ at adulthood—but one way to explain that relationship is that IQ is correlated with height because higher SES people can afford better food and thus be better nourished. Either way, it is a myth that taller people have lower rates of all-cause mortality.

The truth of the matter is this: smaller bodies live longer lives, and this is seen in the animal kingdom and humans—larger body size independently reduces mortality (Samaras and Elrick, 2002). They discuss numerous lines of evidence—from human to animal studies—and show that smaller bodies have a lower chance of all-cause mortality, the reasoning being (one of the reasons, anyway) that larger bodies have more cells which then would, in turn, be more subject to carcinogens and, obviously, would have higher rates of cancer which would then, too, lower mortality rates. Samaras (2012) also has another paper where the implications are reviewed for this, and other causes are proposed for this observation. Causes are reduced cell damage, lower DNA damage, and lower cancer incidence; with other, hormonal differences, between tall and short people that explain more of the variation between them.

One study found a positive linear correlation between height and cancer mortality. Lee et al (2009) write:

A positive linear association was observed between height and cancer mortality. For each standard deviation greater height, the risk of cancer was increased by 5% (2–8%) and 9% (5–14%) in men and women, respectively.

One study suggests that “variations in adult height (and, by implication, the genetic and other determinants of height) have pleiotropic effects on several major adult-onset diseases” (The Emerging Risk Factors Collaboration, 2012). Taller people also are at greater risk for heart attack (Tamaras, 2013). The cause for this, Tamaras writes, is “including reduced telomere shortening, lower atrial fibrillation, higher heart pumping efficiency, lower DNA damage, lower risk of blood clots, lower left ventricular hypertrophy and superior blood parameters.” Height, though, may be inversely associated with long-term incidence of fatal stroke (Goldbourt and Tanne, 2002). Schmidt et al (2014) conclude: “In conclusion, short stature was a risk factor for ischemic heart disease and premature death, but a protective factor for atrial fibrillation. Stature was not substantially associated with stroke or venous thromboembolism.” Cancer incidence also increases with height (Green et al, 2011). Samaras, Elrick, and Storms (2003) suggest that men live longer than women live longer than men due to the height difference between them, being about 8 percent taller than women but having a 7.9 percent lower life expectancy at birth.

Height at mid-life, too, is a predictor of mortality with shorter people living longer lives (He et al, 2014). There are numerous lines of evidence that shorter people—and people of shorter ethnies, too—live longer lives if they are vertically challenged. One study on patients undergoing maintenance hemodialysis stated that “height was directly associated with all-cause mortality and with mortality due to cardiovascular events, cancer, and infection” (Daugirdas, 2015; Shapiro et al, 2015). Even childhood height is associated with prostate cancer acquisition (Aarestrup et al, 2015). Even men who are both tall and have more adipose tissue (body fat) are more likely to die younger and that greater height was associated with a higher risk of acquiring prostate cancer (Perez-Cornago et al, 2017). Short height is a risk factor for death for hemodyalisis patients (Takenaka et al, 2010). Though there are conflicting papers regarding short height and CHD, many reviews show that shorter people have better health outcomes than taller people.

Sohn (2016) writes:

An additional inch increase in height is related to a hazard ratio of death from all causes that is 2.2% higher for men and 2.5% higher for women. The findings are robust to changing survival distributions, and further analyses indicate that the figures are lower bounds. This relationship is mainly driven by the positive relationship between height and development of cancer. An additional inch increase in height is related to a hazard ratio of death from malignant neoplasms that is 7.1% higher for men and 5.7% higher for women.

[…]

It has been widely observed that tall individuals live longer or die later than short ones even when age and other socioeconomic conditions are controlled for. Some researchers challenged this position, but their evidence was largely based on selective samples.

Four additional inches of height in post-menopausal women coincided with an increase in all types of cancer risk by 13 percent (Kabat et al, 2013), while taller people also have less efficient lungs (Leon et al, 1995; Smith et al, 2000). Samaras and Storms (1992) write “Men of height 175.3 cm or less lived an average of 4.95 years longer than those of height over 175.3 cm, while men of height 170.2 cm or less lived 7.46 years longer than those of at least 182.9 cm.”

Lastly, regarding height and mortality, Turchin et al (2012) write “We show that frequencies of alleles associated with increased height, both at known loci and genome wide, are systematically elevated in Northern Europeans compared with Southern Europeans.” This makes sense, because Southern European populations live longer (and have fewer maladies) than Northern European populations:

Compared with northern Europeans, shorter southern Europeans had substantially lower death rates from CHD and all causes.2 Greeks and Italians in Australia live about 4 years longer than the taller host population … (Samaras and Elrick, 2002)

So we have some data that doesn’t follow the trend of taller people living shorter lives due to maladies they acquire due to their height, but most of the data points in the direction that taller people live shorter lives, higher rates of cancer, lower heart pumping efficiency (the heart needs to pump more blood through a bigger body) etc. It makes logical sense that a shorter body would have fewer maladies, and would have higher heart pumping efficiency, lower atrial fibrillation, lower DNA damage, lower risk of blood clotting (duh) when compared to taller people. So it seems that, if you’re a normal American man, then if you want to live a good, long life then you’d want to be shorther, rather than taller.

Lastly, do we truly shrink as we age? Steve Hsu has an article on this matter, citing Birrell et al (2005) which is a longitudinal study in Newcastle, England which began in 1947. The children were measured when full height was expected to be acheived, which is about 22 years of age. They were then followed up at age 50. Birrell et al (2005) write:

Height loss was reported by 57 study members (15%, median height loss: 2.5 cm), with nine reporting height loss of >3.5 cm. However, of the 24 subjects reporting height loss for whom true height loss from age 22 could be calculated, assuming equivalence of heights within 0.5 cm, 7 had gained height, 9 were unchanged and only 8 had lost height. There was a poor correlation between self-reported and true height loss (r=0.28) (Fig. 1).

In this population, self-reported height was off the mark, and it seems like Hsu takes this conclusion further than he should, writing “Apparently people don’t shrink quite as much with age as they think they do.” No no no. This study is not good. We begin shrinking at around age 30:

Men gradually lose an inch between the ages of 30 to 70, and women can lose about two inches. After the age of 80, it’s possible for both men and women to lose another inch.

The conclusion from Hsu on that study is not warranted. To see this, we can look at Sorkin, Muller, and Andres (1999) who write:

For both sexes, height loss began at about age 30 years and accelerated with increasing age. Cumulative height loss from age 30 to 70 years averaged about 3 cm for men and 5 cm for women; by age 80 years, it increased to 5 cm for men and 8 cm for women. This degree of height loss would account for an “artifactual” increase in body mass index of approximately 0.7 kg/m2 for men and 1.6 kg/m2 for women by age 70 years that increases to 1.4 and 2.6 kg/m2, respectively, by age 80 years.

So, it seems that Hsu’s conclusion is wrong. We do shrink with age for myriad reasons, including discs between the vertebrae and spine decompress and dehydrate, the aging spine becomes more curved due to loss of bone density, and loss of torso muscle could contribute to the differing posture. Either way, these are preventable, but some height decrease will be notable for most people. Either way, Hsu doesn’t know what he’s talking about here.

In conclusion, while there is some conflicting data on whether tall or short people have lower all-cause mortality, the data seems to point to the fact that shorter people live longer due since they have lower atrial fibrillation, higher heart pumping efficiency, low DNA damage, lower risk for blood clots (since the blood doesn’t have to travel too far in shorter people), along with superior blood parameters etc. With the exception of a few diseases, shorter people do have a higher quality of life and higher lung efficiency. We do get shorter as we age—though with the right diet we can ameliroate some of those effects (for instance keeping calcium high). There are many reasons why we shrink due to age, and the study that Hsu cited isn’t good compared to the other data we have in the literature on this phenomenon. All in all, shorter people live longer for myriad reasons and we do shrink as we age, contrary to Steve Hsu’s claims.

Is There Really More Variation Within Races Than Between Them?

1500 words

In 1972 Richard Lewontin, studying the blood groups of different races, came to the conclusion that “Human racial classification is of no social value and is positively destructive of social and human relations. Since such racial classification is now seen to be of virtually no genetic or taxonomic significance either, no justification can be offered for its continuance” (pg 397). He also found that “the difference between populations within a race account for an additional 8.3 percent, so that only 6.3 percent is accounted for by racial classification.” This has lead numerous people to, along with Lewontin, conclude that race is ‘of virtually no genetic or taxonomic significance’ and conclude that, due to this, race does not exist.

Lewontin’s main reasoning was that since there is more variation within races than between them (85 percent of differences were within populations while 15 percent was within them) then since a lion’s share of human diversity is distributed within races, not between them, then race is of no genetic nor taxonomic use. Lewontin is correct that there is more variation within races than between them, but he is incorrect that this means that racial classification ‘is of no social value’, since knowing and understanding the reality of race (even our perceptions of them, whether they are true or not) influence things such as medical outcomes.

Though, like Lewontin, people have cited this paper as evidence against the existence of human races, for if there is way more genetic variation between races, and that most human genetic variation is within races, then race cannot be of any significance for things such as medical outcomes since most genetic variation is within races not between them.

Rosenberg et al (2002) also confirmed and replicated Lewontin’s analysis, showing that within-population genetic variation accounts for 93-95 percent of human genetic variation, while 3 to 5 percent of human genetic variation lies between groups. Philosopher Michael Hardimon (2017) uses these arguments to buttress his point that ‘racialist races’ (as he calls them) do not exist. His criteria being:

(a) The fraction of human genetic diversity between populations must exceed the fraction of diversity between them.

(b) The fraction of human genetic diversity within populations must be small.

(c) The fraction of diversity between populations must be large.

(d) Most genes must be highly differentiated by race.

(e) The variation in genes that underlie obvious physical differences must be typical of the genome in general.

(f) There must be several important genetic differences between races apart from the genetic differences that underlie obvious physical differences.

Note: (b) says that racialist races are genetically racially homogeneous groups; (c)-(f) say that racialist races are distinguised by major biological differences.

Call (a)-(f) the racialist concept of race’s genetic profile. (Hardimon, 2017: 21)

He clearly strawmans the racialist position, but I’ll get into that another day. Hardimon writes about how both of these studies lend credence to his above argument on racialist races (pg 24):

Rosenberg and colleagues also confirm Lewontin’s findings that most genes are not highly differentiated by race and that the variation in genes that underlie obvious physical differences is not typical of the variation of the genome in general. They also suggest that it is not the case that there are many important genetic differences between races apart from the genetic differences that underlie the obvious physical differences. These considerations further buttress the case against the existence of racialist races.

[…]

The results of Lewontin’s 1972 study and Rosenberg and colleagues’ 2002 study strongly suggest that it is extremely unlikely that there are many important genetic differences between races apart from the genetic differences that underlie the obvious physical differences.

(Hardimon also writes on page 124 that Rosenberg et al’s 2002 study could also be used as evidence for his populationist concept of race, which I will return to in the future.)

Though, my reasoning for writing this article is to show that the findings by Lewontin and Rosenberg et al regarding more variation within races than between them are indeed true, despite claims to the contrary. There is one article, though, that people cite as evidence against the conclusions by Lewontin and Rosenberg et al, though it’s clear that they only read the abstract and not the full paper.

Witherspoon et al (2007) write that “sufficient genetic data can permit accurate classification of individuals into populations“, which is what the individuals who cite this study as evidence for their contention mean, though they conclude (emphasis mine):

The fact that, given enough genetic data, individuals can be correctly assigned to their populations of origin is compatible with the observation that most human genetic variation is found within populations, not between them. It is also compatible with our finding that, even when the most distinct populations are considered and hundreds of loci are used, individuals are frequently more similar to members of other populations than to members of their own population. Thus, caution should be used when using geographic or genetic ancestry to make inferences about individual phenotypes.

Witherspoon et al (2007) analyzed the three classical races (Europeans, Africans and East Asians) over thousands of loci and came to the conclusion when genetic similarity is measured over thousands of loci, the answer to the question “How often is a pair of individuals from one population genetically more dissimilar than two individuals chosen from two different populations?” is “never“.

Hunley, Cabana, and Long (2016: 7) also confirm Lewontin’s analysis, writing “In sum, we concur with Lewontin’s conclusion that Western-based racial classifications have no taxonomic significance, and we hope that this research, which takes into account our current understanding of the structure of human diversity, places his seminal finding on firmer evolutionary footing.” But the claim that “racial classifications have no taxonomic significance” is FALSE.

This is a point that Edwards (2003) rebutted in depth. While he did agree with Lewontin’s (1972) analysis that there was more variation within races than between them (which was confirmed through subsequent analysis), he strongly disagreed with Lewontin’s conclusion that race is of no taxonomic significance. Richard Dawkins, too disagreed with Lewontin, though as Dawkins writes in his book The Ancestors Tale: “Most of the variation among humans can be found within races as well as between them. Only a small admixture of extra variation distinguishes races from each other. That is all correct. What is not correct is the inferene that race is therefore a meaningless concept.” The fact that there is more variation within races than between them is irrelevant to taxonomic classification, and classifying races by phenotypic differences (morphology, and facial features) along with geographic ancestry shows that just by looking at the average phenotype that race exists, though these concepts make no value-based judgements on anything you can’t ‘see’, such as mental and personality differences between populations.

Though while some agree with Edwards’ analysis of Lewontin’s argument about race’s taxonomic significance, they don’t believe that he successfully refuted Lewontin. For instance, Hardimon (2017: 22-23) writes that Lewontin’s argument against—what Hardimon (2017) calls ‘racialist race’ (his strawman quoted above)—the existence of race because the within-race component of genetic variation is greater than the genetic variation between races “is untouched by Edwards’ objections.”

Though Sesardic (2010: 152) argues that “Therefore, contra Lewontin, the racial classification that is based on a number of genetic differences between populations may well be extremely reliable and robust, despite the fact that any single of those genetic between-population differences\ remains, in itself, a very poor predictor of racial membership.” He also states that the 7 to 10 percent difference between populations “actually refers to the inter-racial portion of variation that is averaged over the separate contributions of a number of individual genetic indicators that were sampled in different studies” (pg 150).

I personally avoid all of this talk about genes/allele frequencies between populations and jump straight to using Hardimon’s minimalist race concept—a concept that, according to Hardimon is “stripped down to its barest bones” since it captures enough of the racialist concept of race to be considered a race concept.

In sum, variation within races is greater than variation between races, but this does not mean anything for the reality of race since race can still be delineated based on peculiar physical features and peculiar geographic ancestry to that group. Using a few indicators (morphology, facial features such as nose, lips, cheekbones, facial structure, and hair along with geographic ancestry), we can group races based on these criteria and we can show that race does indeed exist in a physical—not social—sense and that these categories are meaningful in a medical context (Hardimon, 2013, 2017). So even though genetic variation is greater within races than between them, this does not mean that there is no taxonomic significance to race, as other authors have argued. Hardimon (2017: 23) agrees, writing (emphasis his) “… Lewontin’s data do not preclude the possibility that racial classification might have taxonomic significance, but they do preclude the possibility that racialist races exist.”

Hardimon’s strawman of the racialist concept notwithstanding (which I will cover in the future), his other three race concepts (minimalist, populationist and socialrace concepts) are logically sound and stand up to a lot of criticism. Either way, race does exist, and it does not matter if the apportionment of human genetic diversity is greatest within races than between them.

Don’t Fall for Facial ‘Reconstructions’

1400 words

Back in April of last year, I wrote an article on the problems with facial ‘reconstructions’ and why, for instance, Mitochondrial Eve probably didn’t look like that. Now, recently, ‘reconstructions’ of Nariokotome boy and Neanderthals. The ‘reconstructors’, of course, have no idea what the soft tissue of said individual looked like, so they must infer and use ‘guesswork’ to show parts of the phenotype when they do these ‘reconstructions’.

My reason for writing this is due to the ‘reconstruction’ of Nefertiti. I have seen altrighers proclaim ‘The Ancient Egyptians were white!’ whereas I saw blacks stating ‘Why are they whitewashing our history!’ Both of these claims are dumb, and they’re also wrong. Then you have articles—purely driven by ideology—that proclaim ‘Facial Reconstruction Reveals Queen Nefertiti Was White!‘

This article is garbage. It first makes the claim that King Tut’s DNA came back as being similar to 70 percent of Western European man. Though, there are a lot of problems with this claim. 1) the company IGENEA inferred his Y chromosome from a TV special; the data was not available for analysis. 2) Haplogroup does not equal race. This is very simple.

Now that the White race has decisively reclaimed the Ancient Egyptians

The white race has never ‘claimed’ the Ancient Egyptians; this is just like the Arthur Kemp fantasy that the Ancient Egyptians were Nordic and that any and all civilizations throughout history were started and maintained by whites, and that the causes of the falls of these civilizations were due to racial mixing etc etc. These fantasies have no basis in reality, and, now, we will have to deal with people pushing these facial ‘reconstructions’ that are largely just ‘art’, and don’t actually show us what the individual in question used to look like (more on this below).

Stephan (2003) goes through the four primary fallacies of facial reconstruction: fallacy 1) That we can predict soft tissue from the skull, that we can create recognizable faces. This is highly flawed. Soft tissue fossilization is rare—rare enough to be irrelevant, especially when discussing what ancient humans used to look like. So for these purposes, and perhaps this is the most important criticism of ‘reconstructions’, any and all soft tissue features you see on these ‘reconstructions’ are largely guesswork and artistic flair from the ‘reconstructor’. So facial ‘reconstructions’ are mostly art. So, pretty much, the ‘reconstructor’ has to make a ton of leaps and assumptions while creating his sculpture because he does not have the relevant information to make sure it is truly accurate, which is a large blow to facial ‘reconstructions’.

And, perhaps most importantly for people who push ‘reconstructions’ of ancient hominin: “The decomposition of the soft tissue parts of paleoanthropological beings makes it impossible for the detail of their actual soft tissue face morphology and variability to be known, as well as the variability of the relationship between the hard and the soft tissue.” and “Hence any facial “reconstructions” of earlier hominids are likely to be misleading [4].”

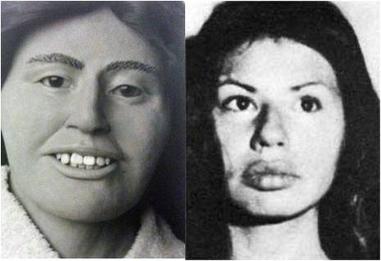

As an example for the inaccuracy of these ‘reconstructions’, see this image from Wikipedia:

The left is the ‘reconstruction’ while the right is how the woman looked. She had distinct lips which could not be recreated because, again, soft tissue is missing.

2) That faces are ‘reconstructed’ from skulls: This fallacy directly follows from fallacy 1: that ‘reconstructors’ can accurately predict what the former soft tissue looked like. Faces are not ‘reconstructed’ from skulls, it’s largely guesswork. Stephan states that individuals who see and hear about facial ‘reconstructions’ state things like “wow, you have to be pretty smart/knowledgeable to be able to do such a complex task”, which Stephan then states that facial ‘approximation’ may be a better term to use since it doesn’t imply that the face was ‘reconstructed’ from the skull.

3) That this discipline is ‘credible’ because it is ‘partly science’, but Stephan argues that calling it a science is ‘misleading’. But he writes (pg 196): “The fact that several of the commonly used subjective guidelines when scientifically evaluated have been found to be inaccurate, … strongly emphasizes the point that traditional facial approximation methods are not scientific, for if they were scientific and their error known previously surely these methods would have been abandoned or improved upon.”

And finally, 4) We know that ‘reconstructions’ work because they have been successful in forensic investigations. Though this is not a strong claim because other factors could influence the discovery, such as media coverage, chance, or ‘contextual information’. So these forensics cases cannot be pointed to when one attempts to argue for the utility of facial ‘reconstructions’. There also seems to be a lot of publication bias in this literature too, with many scientists not publishing data that, for instance, did not show the ‘face’ of the individual in question. It is largely guesswork. “The inconsistency in reports combined with confounding factors influencing casework success suggest that much caution should be employed when gauging facial approximation success based on reported practitioner success and the success of individual forensic cases” (Stephan, 2003: 196).

So, 1) the main point here is that soft tissue work is ‘just a guess’ and the prediction methods employed to guess the soft tissue have not been tested. 2) faces are not ‘reconstructed’ from skulls. 3) It’s hardly ‘science’, and more of a form of art due to the guesses and large assumptions poured into the ‘technique’. 4) ‘Reconstructions’ don’t ‘work’ because they help us ‘find’ people, as there is a lot more going on there than the freak-chance happenings of finding a person based on a ‘reconstruction’ which was probably due to chance. Hayes (2015) also writes: “Their actual ability to meaningfully represent either an individual or a museum collection is questionable, as facial reconstructions created for display and published within academic journals show an enduring preference for applying invalidated methods.”

Stephan and Henneberg (2001) write: “It is concluded that it is rare for facial approximations to be sufficiently accurate to allow identification of a target individual above chance. Since 403 incorrect identifications were made out of 592 identification scenarios, facial approximation should be considered to be a highly inaccurate and unreliable forensic technique. These results suggest that facial approximations are not very useful in excluding individuals to whom skeletal remains may not belong.”

Wilkinson (2010) largely agrees, but states that ‘artistic interpretation’ should be used only when “particularly for the morphology of the ears and mouth, and with the skin for an ageing adult” but that “The greatest accuracy is possible when information is available from preserved soft tissue, from a portrait, or from a pathological condition or healed injury.” But she also writes: “… the laboratory studies of the Manchester method suggest that facial reconstruction can reproduce a sufficient likeness to allow recognition by a close friend or family member.”

So to sum up: 1) There is insufficient data for tissue thickness. This just becomes guesswork and, of course, is up to artistic ‘interpretation’, and then becomes subjective to whichever individual artist does the ‘reconstruction’. Cartilage, skin and fat does not fossilize (only in very rare cases and I am not aware of any human cases). 2) There is a lack of methodological standardization. There is no single method to use to ‘guesstimate’ things like tissue thickness and other soft tissue that does not fossilize. 3) They are very subjective! For instance, if the artist has any type of idea in his head of what the individual ‘may have’ looked like, his presuppositions may go from his head to his ‘reconstruction’, thusly biasing a look he/she will believe is true. I think this is the case for Mitochondrial Eve; just because she lived in Africa doesn’t mean that she looks similar to any modern Africans alive today.

I would make the claim that these ‘reconstructions’ are not science, they’re just the artwork of people who have assumptions of what people used to look like (for instance, with Nefertiti) and they take their assumptions and make them part of their artwork, their ‘reconstruction’. So if you are going to view the special that will be on tomorrow night, keep in the back of your mind that the ‘reconstruction’ has tons of unvalidated assumptions thrown into it. So, no, Nefertiti wasn’t ‘white’ and Nefertiti wasn’t ‘white washed’; since these ‘methods’ are highly flawed and highly subjective, we should not state that “This is what Nefertiti used to look like”, because it probably is very, very far from the truth. Do not fall for facial ‘reconstructions’.

I Am Not A Phrenologist

1500 words

People seem to be confused on the definition of the term ‘phrenology’. Many people think that just the measuring of skulls can be called ‘phrenology’. This is a very confused view to hold.

Phrenology is the study of the shape and size of the skull and then drawing conclusions from one’s character from bumps on the skull (Simpson, 2005) to overall different-sized areas of the brain compared to others then drawing on one’s character and psychology from these measures. Franz Gall—the father of phrenology—believed that by measuring one’s skull and the bumps etc on it, then he could make accurate predictions about their character and mental psychology. Gall had also proposed a theory of mind and brain (Eling, Finger, and Whitaker, 2017). The usefulness of phrenology aside, the creator Gall contributed a significant understanding to our study of the brain, being that he was a neuroanatomist and physiologist.

Gall’s views on the brain can be seen here (read this letter where he espouses his views here):

1.The brain is the organ of the mind.