DNA—Blueprint and Fortune Teller?

2500 words

What would you think if you heard about a new fortune-telling device that is touted to predict psychological traits like depression, schizophrenia and school achievement? What’s more, it can tell your fortune from the moment of your birth, it is completely reliable and unbiased — and it only costs £100.

This might sound like yet another pop-psychology claim about gimmicks that will change your life, but this one is in fact based on the best science of our times. The fortune teller is DNA. The ability of DNA to understand who we are, and predict who we will become has emerged in the last three years, thanks to the rise of personal genomics. We will see how the DNA revolution has made DNA personal by giving us the power to predict our psychological strengths and weaknesses from birth. This is a game-changer as it has far-reaching implications for psychology, for society and for each and every one of us.

This DNA fortune teller is the culmination of a century of genetic research investigating what makes us who we are. When psychology emerged as a science in the early twentieth century, it focused on environmental causes of behavior. Environmentalism — the view that we are what we learn — dominated psychology for decades. From Freud onwards, the family environment, or nurture, was assumed to be the key factor in determining who we are. (Plomin, 2018: 6, my emphasis)

The main premise of Plomin’s 2018 book Blueprint is that DNA is a fortune teller while personal genomics is a fortune-telling device. The fortune-telling device Plomin most discusses in the book is polygenic scores (PGS). PGSs are gleaned from GWA studies; SNP genotypes are then added up with scores of 0, 1, and 2. Then, the individual gets their PGS for trait T. Plomin’s claim—that DNA is a fortune teller—though, falls since DNA is not a blueprint—which is where the claim that “DNA is a fortune teller” is derived.

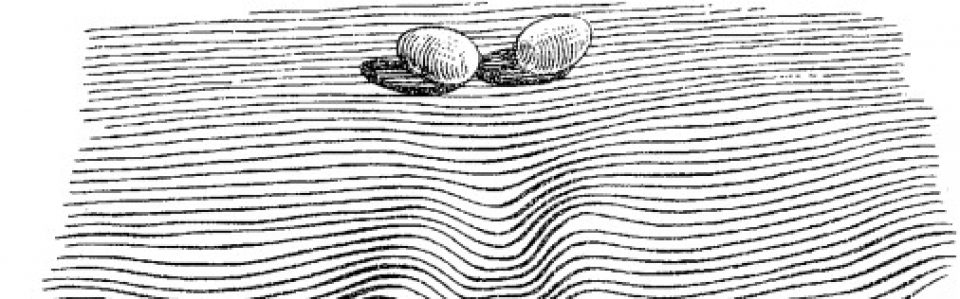

It’s funny that Plomin calls the measure “unbiased”, (he is talking about DNA, which is in effect “unbiased”), but PGS are anything BUT unbiased. For example, most GWAS/PGS are derived from European populations. But, for example, there are “biases and inaccuracies of polygenic risk scores (PRS) when predicting disease risk in individuals from populations other than those used in their derivation” (De La Vega and Bustamante, 2018). (PRSs are derived from statistical gene associations using GWAS; Janssens and Joyner, 2019.) Europeans make up more than 80 percent of GWAS studies. This is why, due to the large amount of GWASs on European populations, that “prediction accuracy [is] reduced by approximately 2- to 5-fold in East Asian and African American populations, respectively” (Martin et al, 2018). See for example Figure 1 from Martin et al (2018):

With the huge number of GWAS studies done on European populations, these scores cannot be used on non-European populations for ‘prediction’—even disregarding the other problems with PGS/GWAS.

By studying genetically informative cases like twins and adoptees, behavioural geneticists discovered some of the biggest findings in psychology because, for the first time, nature and nurture could be disentangled.

[…]

… DNA differences inherited from our parents at the moment of conception are the consistent, lifelong source of psychological individuality, the blueprint that makes us who we are. A blueprint is a plan. … A blueprint isn’t all that matters but it matters more than everything else put together in terms of the stable psychological traits that make us who we are. (Plomin, 2018: 6-8, my emphasis)

Nevermind the slew of problems with twin and adoption studies (Joseph, 2014; Joseph et al, 2015; Richardson, 2017a). I also refuted the notion that “A blueprint is a plan” last year, quoting numerous developmental systems theorists. The main thrust of Plomin’s book—that DNA is a blueprint and therefore can be seen as a fortune teller using the fortune-telling device to tell the fortunes of the people’s whose DNA are analyzed—is false, as DNA does not work how it does in Plomin’s mind.

These big findings were based on twin and adoption studies that indirectly assessed genetic impact. Twenty years ago the DNA revolution began with the sequencing of the human genome, which identified each of the 3 billion steps in the double helix of DNA. We are the same as every other human being for more than 99 percent of these DNA steps, which is the blueprint for human nature. The less than 1 per cent of difference of these DNA steps that differ between us is what makes us who we are as individuals — our mental illnesses, our personalities and our mental abilities. These inherited DNA differences are the blueprint for our individuality …

[DNA predictors] are unique in psychology because they do not change during our lives. This means that they can foretell our futures from our birth.

[…]

The applications and implications of DNA predictors will be controversial. Although we will examine some of these concerns, I am unabashedly a cheerleader for these changes. (Plomin, 2018: 8-10, my emphasis)

This quote further shows Plomin’s “blueprint” for the rest of his book—DNA can “foretell our futures from our birth”—and how it affects his conclusions gleaned from his work that he mostly discusses in his book. Yes, all scientists are biased (as Stephen Jay Gould noted), but Plomin outright claimed to be an unabashed cheerleader for his work. Plomin’s self-admission for being an “unabashed cheerleader”, though, does explain some of the conclusions he makes in Blueprint.

However, the problem with the mantra ‘nature and nurture’ is that it runs the risk of sliding back into the mistaken view that the effects of genes and environment cannot be disentangled.

[…]

Our future is DNA. (Plomin, 2018: 11-12)

The problem with the mantra “nature and nurture” is not that it “runs the risk of sliding back into the mistaken view that the effects of genes and environment cannot be disentangled”—though that is one problem. The problem is how Plomin assumes how DNA works. That DNA can be disentangled from the environment presumes that DNA is environment-independent. But as Moore shows in his book The Dependent Gene—and as Schneider (2007) shows—“the very concept of a gene requires the environment“. Moore notes that “The common belief that genes contain context-independent “information”—and so are analogous to “blueprints” or “recipes”—is simply false” (quoted in Schneider, 2007). Moore showed in The Dependent Gene that twin studies are flawed, as have numerous other authors.

Lewkowicz (2012) argues that “genes are embedded within organisms which, in turn, are embedded in external environments. As a result, even though genes are a critical part of developmental systems, they are only one part of such systems where interactions occur at all levels of organization during both ontogeny and phylogeny.” Plomin—although he does not explicitly state it—is a genetic reductionist. This type of thinking can be traced back, most popularly, to Richard Dawkins’ 1976 book The Selfish Gene. The genetic reductionists can, and do, make the claim that organisms can be reduced to their genes, while developmental systems theorists claim that holism, and not reductionism, better explains organismal development.

The main thrust of Plomin’s Blueprint rests on (1) GWA studies and (2) PGSs/PRSs derived from the GWA studies. Ken Richardson (2017b) has shown that “some cryptic but functionally irrelevant genetic stratification in human populations, which, quite likely, will covary with social stratification or social class.” Richardson’s (2017b) argument is simple: Societies are genetically stratified; social stratification maintains genetic stratification; social stratification creates—and maintains—cognitive differentiation; “cognitive” tests reflect prior social stratification. This “cryptic but functionally irrelevant genetic stratification in human populations” is what GWA studies pick up. Richardson and Jones (2019) extend the argument and argue that spurious correlations can arise from genetic population structure that GWA studies cannot account for—even though GWA study authors claim that this population stratification is accounted for, social class is defined solely on the basis of SES (socioeconomic status) and therefore, does not capture all of what “social class” itself captures (Richardson, 2002: 298-299).

Plomin also heavily relies on the results of twin and adoption studies—a lot of it being his own work—to attempt to buttress his arguments. However, as Moore and Shenk (2016) show—and as I have summarized in Behavior Genetics and the Fallacy of Nature vs Nurture—heritability estimates for humans are highly flawed since there cannot be a fully controlled environment. Moore and Shenk (2016: 6) write:

Heritability statistics do remain useful in some limited circumstances, including selective breeding programs in which developmental environments can be strictly controlled. But in environments that are not controlled, these statistics do not tell us much. In light of this, numerous theorists have concluded that ‘the term “heritability,” which carries a strong conviction or connotation of something “[in]heritable” in the everyday sense, is no longer suitable for use in human genetics, and its use should be discontinued.’ 31 Reviewing the evidence, we come to the same conclusion.

Heritability estimates assume that nature (genes) can be separated from nurture (environment), but “the very concept of a gene requires the environment” (Schneider, 2007) so it seems that attempting to partition genetic and environmental causation of any trait T is a fool’s—reductionist—errand. If the concept of gene depends on and requires the environment, then how does it make any sense to attempt to partition one from the other if they need each other?

Let’s face it: Plomin, in this book Blueprint is speaking like a biological reductionist, though he may deny the claim. The claims from those who push PRS and how it can be used for precision medicine are unfounded, as there are numerous problems with the concept. Precision medicine and personalized medicine are similar concepts, though Joyner and Paneth (2015) are skeptical of its use and have seven questions for personalized medicine. Furthermore, Joyner, Boros and Fink (2018) argue that “redundant and degenerate mechanisms operating at the physiological level limit both the general utility of this assumption and the specific utility of the precision medicine narrative.” Joyner (2015: 5) also argues that “Neo-Darwinism has failed clinical medicine. By adopting a broader perspective, systems biology does not have to.”

Janssens and Joyner (2019) write that “Most [SNP] hits have no demonstrated mechanistic linkage to the biological property of interest.” Researchers can show correlations between disease phenotypes and genes, but they cannot show causation—which would be mechanistic relations between the proposed genes and the disease phenotype. Though, as Kampourakis (2017: 19), genes do not cause diseases on their own, they only contribute to its variation.

Edit: Take also this quote from Plomin and Stumm (2018) (quoted by Turkheimer):

GPS are unique predictors in the behavioural sciences. They are an exception to the rule that correlations do not imply causation in the sense that there can be no backward causation when GPS are correlated with traits. That is, nothing in our brains, behaviour or environment changes inherited differences in DNA sequence. A related advantage of GPS as predictors is that they are exceptionally stable throughout the life span because they index inherited differences in DNA sequence. Although mutations can accrue in the cells used to obtain DNA, like any cells in the body these mutations would not be expected to change systematically the thousands of inherited SNPs that contribute to a GPS.

Turkheimer goes on to say that this (false) assumption by Plomin and Stumm (2018) assumes that there is no top-down causation—i.e., that phenotypes don’t cause genes, or there is no causation from the top to the bottom. (See the special issue of Interface Focus for a slew of articles on top-down causation.) Downward causation exists in biological systems (Noble, 2012, 2017), as does top-down. The very claim that “nothing in our brains, behaviour or environment changes inherited differences in DNA sequence” is ridiculous! This is something that, of course, Plomin did not discuss in Blueprint. But in a book that, supposedly, shows “how DNA makes us who we are”, why not discuss epigenetics? Plomin is confused, because DNA methylation impacts behavior and behavior impacts DNA methylation (Lerner and Overton, 2017: 114). Lerner and Overtone (2017: 145) write that:

… it should no longer be possible for any scientist to undertake the procedure of splitting of nature and nurture and, through reductionist procedures, come to conclusions that the one or the other plays a more important role in behavior and development.

Plomin’s reductionist takes, therefore again, fail. Plomin’s “reluctance” to discuss “tangential topics” to “inherited DNA differences” included epigenetics (Plomin, 2018: 12). But it seems that his “reluctance” to discuss epigenetics was a downfall in his book as epigenetic mechanisms can and do make a difference to “inherited DNA differences” (see for example, Baedke, 2018, Above the Gene, Beyond Biology: Toward a Philosophy of Epigenetics and Meloni, 2019, Impressionable Biologies: From the Archaeology of Plasticity to the Sociology of Epigenetics see also Meloni, 2018). The genome can and does “react” to what occurs to the organism in the environment, so it is false that “nothing in our brains, behaviour or environment changes inherited differences in DNA sequence” (Plomin and Stumm, 2018), since our behavior and actions can and do methylate our DNA (Meloni, 2014) which falsifies Plomin’s claim and which is why he should have discussed epigenetics in Blueprint. End Edit

Conclusion

So the main premise of Plomin’s Blueprint is his two claims: (1) that DNA is a fortune teller and (2) that personal genomics is a fortune-telling device. He draws these big claims from PGS/PRS studies. However, over 80 percent of GWA studies have been done on European populations. And, knowing that we cannot use these datasets on other, non-European datasets, greatly hampers the uses of PGS/PRS in other populations—although the PGS/PRS are not that useful in and of itself for European populations. Plomin’s whole book is a reductionist screed—“Sure, other factors matter, but DNA matters more” is one of his main claims. Though, a priori, since there is no privileged level of causation, one cannot privilege DNA over any other developmental variables (Noble, 2012). To understand disease, we must understand the whole system and how when one part of the system becomes dysfunctional how it affects other parts of the system and how it runs. The PGS/PRS hunts are reductionist in nature, and the only answer to these reductionist paradigms are new paradigms from systems biology—one of holism.

Plomin’s assertions in his book are gleaned from highly confounded GWA studies. Plomin also assumes that we can disentangle nature and nurture—like all reductionists. Nature and nurture interact—without genes, there would be an environment, but without an environment, there would be no genes as gene expression is predicated on the environment and what occurs in it. So Plomin’s reductionist claim that “Our future is DNA” is false—our future is studying the interactive developmental system, not reducing it to a sum of its parts. Holistic biology—systems biology—beats reductionist biology—the Neo-Darwinian Modern Synthesis.

DNA is not a blueprint nor is it a fortune teller and personal genomics is not a fortune-telling device. The claim that DNA is a blueprint/fortune teller and personal genomics is a fortune-telling device come from Plomin and are derived from highly flawed GWA studies and, further, PGS/PRS. Therefore Plomin’s claim that DNA is a blueprint/fortune teller and personal genomics is a fortune-telling device are false.

(Also read Erick Turkheimer’s 2019 review of Plomin’s book The Social Science Blues, along with Steve Pitteli’s review Biogenetic Overreach for an overview and critiques of Plomin’s ideas. And read Ken Richardson’s article It’s the End of the Gene As We Know It for a critique of the concept of the gene.)

African Neolithic Part 1: Amending Common Misunderstandings

One of the weaknesses, in my opinion, to HBD is the focus on the Paleolithic and modern eras while glossing over the major developments in between. For instance, the links made between Paleolithic Western Europe’s Cromagnon Art and Modern Western Europe’s prowess (note the geographical/genetic discontinuity there for those actually informative on such matters).

Africa, having a worst archaeological record due to ideological histories and modern problems, leaves it rather vulnerable to reliance on outdated sources already discussed before on this blog. This lack of mention however isn’t strict.

Eventually updated material will be presented by a future outline of Neolithic to Middle Ages development in West Africa.

A recent example of an erroneous comparison would be in Heiner Rindermann’s Cogntivie Capitalism, pages 129-130. He makes multiple claims on precolonial African development to explained prolonged investment in magical thinking.

- Metallurgy not developed independently.

- No wheel.

- Dinka did not properly used cattle due to large, uneaten, portions left castrated.

- No domesticated animals of indigenous origin despite Europeans animals being just as dangerous, contra Diamond (lists African dogs, cats, antelope, gazelle, and Zebras as potential specimens, mentions European Foxes as an example of a “dangerous” animal to be recently domesticated along with African Antelopes in the Ukraine.

- A late, diffused, Neolithic Revolution 7000 years following that of the Middle East.

- Less complex Middle Age Structure.

- Less complex Cave structures.

Now, technically, much of this falls outside of what would be considered “neolithic”, even in the case of Africa. However, understanding the context of Neolithic development in Africa provides context to each of these points and periods of time by virtue of causality. Thus, they will be responded by archaeological sequence.

Dog domestication, Foxes, and human interaction.

The domestication of dogs occurred when Eurasian Hunter-Gathers intensified megafauna hunting, attracting less aggressive wild dogs to tame around 23k-25k ago. Rindermann’s mention of the fox experiment replicates this idea. Domestication isn’t a matter of breaking the most difficult of animals, it’s using the easiest ones to your advantage.

In this same scope, this needs to be compared to Africa’s case. In regards to behavior they are rarely solitary, so attracting lone individuals is already impractical. The species likewise developed under a different level of competition.

They were probably under as much competition from these predators as the ancestral African wild dogs were under from the guild of super predators on their continent.

What was different, though, is the ancestral wolves never evolved in an enviroment which scavenging from various human species was a constant threat, so they could develop behaviors towards humans that were not always characterized by extreme caution and fear.

Europe in particular shows that carnivore density was lower, and thus advantageous to hominids.

Consequently, the first Homo populations that arrived in Europe at the end of the late Early Pleistocene found mammal communities consisting of a low number of prey species, which accounted for a moderate herbivore biomass, as well as a diverse but not very abundant carnivore guild. This relatively low carnivoran density implies that the hominin-carnivore encounter rate was lower in the European ecosystems than in the coeval East African environments, suggesting that an opportunistic omnivorous hominin would have benefited from a reduced interference from the carnivore guild.

This would be a pattern based off of megafaunal extinction data.

The first hints of abnormal rates of megafaunal loss appear earlier, in the Early Pleistocene in Africa around 1 Mya, where there was a pronounced reduction in African proboscidean diversity (11) and the loss of several carnivore lineages, including sabertooth cats (34), which continued to flourish on other continents. Their extirpation in Africa is likely related to Homo erectus evolution into the carnivore niche space (34, 35), with increased use of fire and an increased component of meat in human diets, possibly associated with the metabolic demands of expanding brain size (36). Although remarkable, these early megafauna extinctions were moderate in strength and speed relative to later extinctions experienced on all other continents and islands, probably because of a longer history in Africa and southern Eurasia of gradual hominid coevolution with other animals.

This fundamental difference in adaptation to human presence and subsequent response is obviously a major detail in in-situ animal domestication.

Another example would be the failure of even colonialists to tame the Zebra.

Of course, this alone may not be good enough. One can nonetheless cite the tame-able Belgian Congo forest Elephant, or Eland. Therefore we can just ignore regurgitating Diamond.

This will just lead me to my next point. That is, what’s the pay-off?

Pastoralism and Utility

A decent test to understand what fauna in Africa can be utilized would the “experiments” of Ancient Egyptians, who are seen as the Eurasian “exception” to African civilization. Hyenas, and antelope from what I’ve, were kept under custody but overtime didn’t resulted in selected traits. The only domesticated animal in this region would be Donkeys, closer relatives to Zebras.

This brings to light another perspective to the Russian Fox experiments, that is, why have pet foxes not been a trend for Eurasians prior to the 20th century? It can be assumed then that attempts of animals domestication simply where not worth investment in the wake of already domesticated animals, even if one grew up in a society/genetic culture at this time that harnessed the skills.

For instance, a slow herd of Eland can be huddled and domesticated but will it pay off compared to the gains from investing into adapting diffused animals into a new environment? (This will be expanded upon as well into the future).

Elephants are nice for large colonial projects, but unique herding discouraging local diseases that also disrupts population density again effects the utility of large bodied animals. Investing in agriculture and iron proved more successful.

Cats actually domesticated themselves and lacked any real utility prior to feasting on urban pests. In Africa, with highly mobile groups as will be explained later, investment in cats weren’t going to change much. Wild Guineafowl, however, were useful to tame in West Africa and use to eat insects.

As can be seen here, Pastoralism is roughly as old in Africa diffused from the Middle East as compared to Europe. Both lacked independently raised species prior to it and making few innovations in regard to in situ beasts beyond the foundation. (Advancement in plant management preceding developed agriculture, a sort of skill that would parallel dog domestication for husbandry, will be discussed in a future article).

And given how advanced Mesoamericans became without draft animals, as mentioned before, their importance seems to be overplayed from a pure “indigenous” perspective. The role in invention itself ought be questioned as well in what we can actually infer.

Borrowed, so what?

In a thought experiment, lets consider some key details in diffusion. The invention of Animal Domestication or Metallurgy is by no means something to be glossed over as an independent invention. Over-fixating on this however in turn glosses over some other details on successful diffusion.

Why would a presumably lower apt population adopt a cognitively demanding skill, reorient it’s way of society around it, without attributing this change to an internal change of character compared to before? Living in a new type of economy system as a trend it undoubtedly bound to result in a new population in regards to using cognition to exploit resources. This would require contributions to their own to the process.

This applies regards to African Domesticated breeds,

Viewing domestication as an invention also produces a profound lack of curiosity about evolutionary changes in domestic species after their documented first appearances. [……] African domesticates, whether or not from foreign ancestors, have adapted to disease and forage challenges throughout their ranges, reflecting local selective pressures under human management. Adaptations include dwarfing and an associated increase in fecundity, tick resistance, and resistance to the most deleterious effects of several mortal infectious diseases. While the genetics of these traits are not yet fully explored, they reflect the animal side of the close co-evolution between humans and domestic animals in Africa. To fixate upon whether or not cattle were independently domesticated from wild African ancestors, or to dismiss chickens’ swift spread through diverse African environments because they were of Asian origin, ignores the more relevant question of how domestic species adapted to the demands of African environments, and how African people integrated them into their lives.

The same can be said for Metallurgy,

We do not yet knowwhether the seventh/sixth century Phoenician smelt-ing furnace from Toscanos, Spain (illustrated byNiemeyer in MA, p.87, Figure 3) is typical, but it isclearly very different from the oldest known iron smelt-ing technology in sub-Saharan Africa. Almost all pub-lished iron smelting furnaces of the first millennium calBC from Rwanda/Burundi, Buhaya, Nigeria, Niger,Cameroon, Congo, Central African Republic and Ga-bon are slag-pit furnaces, which are so far unknownfrom this or earlier periods in the Middle East or NorthAfrica. Early Phoenician tuyères, which have squareprofiles enclosing two parallel (early) or converging(later) narrow bores are also quite unlike those de-scribed for early sites in sub-Saharan Africa, which arecylindrical with a single and larger bore.

African ironworkers adapted bloomery furnacesto an extraordinary range of iron ores, some of whichcannot be used by modern blast furnaces. In bothnorthern South Africa (Killick & Miller 2014)andinthe Pare mountains of northern Tanzania (Louise Ilespers. comm., 2013) magnetite-ilmenite ores contain-ing up to 25 per cent TiO2(by mass) were smelted.The upper limit for TiO2in iron ore for modernblast furnaces is only 2 per cent by mass (McGan-non 1971). High-titanium iron ores can be smeltedin bloomery furnaces because these operate at lowertemperatures and have less-reducing furnace atmo-spheres than blast furnaces. In the blast furnace tita-nium oxide is partially reduced and makes the slagviscous and hard to drain, but in bloomery furnacesit is not reduced and combines with iron and siliconoxide to make a fluid slag (Killick & Miller 2014). Blastfurnace operators also avoid ores containing morethan a few tenths of a percent of phosphorus or ar-senic, because when these elements are dissolved inthe molten iron, they segregate to grain boundaries oncrystallization, making the solid iron brittle on impact.

Bulls (and rams) are often, but not necessarily, castrated at a

fairly advanced age, probably in part to allow the conformation and characteristics of the animal to become evident before

the decision is made. A castrated steer is called muor buoc, an

entire bull thon (men in general are likened to muor which are

usually handsome animals greatly admired on that account; an

unusually brave, strong or successful man may be called thon,

that is, “bull with testicles”). Dinka do not keep an excess of

thon, usually one per 10 to 40 cows. Stated reasons for the

castration of others are for important esthetic and cultural

reasons, to reduce fighting, for easier control, and to prevent

indiscriminant or repeat breeding of cows in heat (the latter

regarded as detrimental to pregnancy and accurate

genealogies).

Since then, Pearl Millet, Rice, Yams, and Cowpeas have been confirmed to be indigenous crops to the area. This is against hypotheses of others. Multiple studies show late expansion southwards, thus likely linking them to Niger-Kongo speakers. Modern SSA genetics revealed farmer population expansion signals similar to that of Neolithic ancestry in Europeans to their own late date of agriculture in the region as well.

Renfrew

Made multiple remarks on Africa’s “exemplars”, trying to construct a sort of perpetual gap since the Paleolithic by citing Renfew’s Neuroscience, evolution and the sapient paradox: the factuality of value and of the sacred. However, Renfrew doesn’t quite support the comparisons he made and approaches a whole different point.

The discovery of clearly intentional patterning on fragments of red ochre from the Blombos Cave (at ca 70 000 BP) is interesting when discussing the origins of symbolic expression. But it is entirely different in character, and very much simpler than the cave paintings and the small carved sculptures which accompany the Upper Palaeolithic of France and Spain (and further east in Europe) after 40 000 BP.[….]

It is important to remember that what is often termed cave art—the painted caves, the beautifully carved ‘Venus’ figurines—was during the Palaeolithic (i.e. the Pleistocene climatic period) effectively restricted to one developmental trajectory, localized in western Europe. It is true that there are just a few depictions of animals in Africa from that time, and in Australia also. But Pleistocene art was effectively restricted to Franco-Cantabria and its outliers.

It was not until towards the end of the Pleistocene period that, in several parts of the world, major changes are seen (but see Gamble (2007) for a more nuanced view, placing more emphasis upon developments in the Late Palaeolithic). They are associated with the development of sedentism and then of agriculture and sometimes stock rearing. At the risk of falling into the familiar ‘revolutionary’ cliché, it may be appropriate to speak of the Sedentary Revolution (Wilson 1988; Renfrew 2007a, ch. 7).[….] Although the details are different in each area, we see a kind of sedentary revolution taking place in western Asia, in southern China, in the Yellow River area of northern China, in Mesoamerica, and coastal Peru, in New Guinea, and in a different way in Japan (Scarre 2005).

Weil (2014) paints a picture of African development in 1500, both relative to the rest of the world and heterogeneity within the continent itself, using as his indicators population density, urbanization, technological advancement, and political development. Ignoring North Africa, which was generally part of the Mediterranean world, the highest levels of development by many indicators are found in Ethiopia and in the broad swathe of West African countries running from Cameroon and Nigeria eastward along the coast and the Niger river. In this latter region, the available measures show a level of development just below or sometimes equal to that in the belt of Eurasia running from Japan and China, through South Asia and the Middle East, into Europe. Depending on the index used, West Africa was above or below the level of development in the Northern Andes and Mexico. Much of the rest of Africa was at a significantly lower level of development, although still more advanced than the bulk of the Americas or Australia.