Follow the Leader? Selfish Genes, Evolution, and Nationalism

1750 words

Yet we get tremendously increased phenotypic variation … because the form and variation of cells, what they produce, whether to grow, to move, or what kind of cell to become, is under control of a whole dynamic system, not the genes. (Richardson, 2017: 125)

In 1976 Richard Dawkins published his groundbreaking book The Selfish Gene (Dawkins, 1976). In the book, Dawkins argues that selection occurs at the level of the gene—“the main theme of his book is a metaphorical account of competition between genes …” (Midgley, 2010: 45). Others then took note of the new theory and attempted to integrate it into their thinking. But is it as simple as Dawkins makes it out to be? Are we selfish due to the genes we carry? Is the theory testable? Can it be distinguished from other competing theories? Can it be used to justify certain behaviors?

Rushton, selfish genes, nationalism and politics

JP Rushton is a serious scholar, perhaps most well-known for attempting to use r/K selection theory to explain human behavior (Anderson, 1991). perhaps has the most controversial use of Dawkins’ theory. The main axiom of the theory is that an organism is just a gene’s way of ensuring the survival of other genes (Rushton, 1997). Thus, Rushton’s formulated genetic similarity theory posits that those who are more genetically similar—who share more genes—will be more altruistic toward those with more similar genes even if they are not related and will therefore show negative attitudes to less genetically similar individuals. This is the gene’s “way” of propagating themselves through evolutionary time. Richardson (2017: 9-11) tells us of all of the different ways in which genes are invoked to attempt to justify X.

In the beginning of his career, Rushton was a social learning theorist studying altruism, even publishing a book on the matter—Altruism, Socialization and Society (Rushton, 1980). Rushton reviews the sociobiological literature and concludes that altruism is a learned behavior. Though, Rushton seems to have made the shift from a social learning perspective to a genetic determinist perspective in the years between the publication of Altruism, Socialization and Society and 1984 when he published his genetic similarity theory. So, attempting to explain altruism through genes, while not part of Rushton’s original research programme, seems, to me, to be a natural evolution in his thought (however flawed it may be).

Dawkins responded to the uses of his theory to attempt to justify nationalism and patriotism through an evolutionary lens during an interview with Frank Miele for Skeptic:

Skeptic: How do you evaluate the work of Irena”us Eibl-Eibesfeldt, J.P. Rushton, and Pierre van den Berghe, all of whom have argued that kin selection theory does help explain nationalism and patriotism?

Dawkins: One could invoke a kind “misfiring” of kin selection if you wanted to in such cases. Misfirings are common enough in evolution. For example, when a cuckoo host feeds a baby cuckoo, that is a misfiring of behavior which is naturally selected to be towards the host’s own young. There are plenty of opportunities for misfirings. I could imagine that racist feeling could be a misfiring, not of kin selection but of reproductive isolation mechanisms. At some point in our history there may have been two species of humans who were capable of mating together but who might have produced sterile hybrids (such as mules). If that were true, then there could have been selection in favor of a “horror” of mating with the other species. Now that could misfire in the same sort of way that the cuckoo host’s parental impulse misfires. The rule of thumb for that hypothetical avoiding of miscegenation could be “Avoid mating with anybody of a different color (or appearance) from you.”

I’m happy for people to make speculations along those lines as long as they don’t again jump that is-ought divide and start saying, “therefore racism is a good thing.” I don’t think racism is a good thing. I think it’s a very bad thing. That is my moral position. I don’t see any justification in evolution either for or against racism. The study of evolution is not in the business of providing justifications for anything.

This is similar to his reaction when Bret Weinstein remarked that the Nazi’s “behaviors” during the Holocaust “were completely comprehensible at the level of fitness”—at the level of the gene.” To which Dawkins replied “I think nationalism may be an even greater evil than religion. And I’m not sure that it’s actually helpful to speak of it in Darwinian terms.” This is what I like to call “rampant adaptationism.”

This is important because Rushton (1998) invokes Dawkins’ theory as justification for his genetic similarity theory (GST; Rushton, 1997), attempting to justify ethno-nationalism from a gene’s-eye view. Rushton did what Dawkins warned against: using the theory to justify nationalism/patriotism. Rushton (1998: 486) states that “Genetic Similarity Theory explains why” ethnic nationalism has come back into the picture. Kin selection theory (which, like with selfish gene theory, Rushton invoked) has numerous misunderstandings attached to it, and of course, Rushton, too, was an offender (Park, 2007).

Dawkins (1981), in Selfish genes in race or politics stated that “It is annoying to find this elegant and important theory being dragged down to the ephemeral level of human politics, and parochial British politics at that.” Rushton (2005: 494), responded, stating that “feeling a moral obligation to condemn racism, some evolutionists minimised the theoretical possibility of a biological underpinning to ethnic or national favouritism.“

Testability?

The main premise of Dawkins’ theory is that evolution is gene-centered and that selection occurs at the level of the gene—genes that propagate fitness will be selected for while genes that are less fit are selected against. This “genes’-eye view” of evolution states “that adaptive evolution occurs through differential survival of competing genes, increasing the allele frequency of those alleles whose phenotypic trait effects successfully promote their own propagation, with gene defined as “not just one single physical bit of DNA [but] all replicas of a particular bit of DNA distributed throughout the world.“

Noble (2018) discusses “two fatal difficulties in the selfish gene version of neo-Darwinism“:

The first is that, from a physiological viewpoint, it does’t lead to a testable prediction. The only problem is that the central definition of selfish gene theory is not independent of the only experimental test of the theory, which is whether genes, defined as DNA sequences, are in fact selfish, i.e., whether their frequency in the gene pool increases (18). The second difficulty is that DNA can’t be regarded as a replicator separate from the cell (11, 17). The cell, and specifically its living physiological functionality, is what makes DNA be replicated faithfully, as I will explain later.

Noble (2017: 156) further elaborates in Dance to the Tune of Life: Biological Relativity:

Could this problem be avoided by attaching a meaning to ‘selfish’ as applied to DNA sequences that is independent of meanings in terms of phenotype? For example. we could say that a DNA sequence is ‘selfish’ to the extent which its frequency in subsequent generations is increased. This at least would be an objective definition that could be measured in terms of population genetics. But wait a minute! The whole point of the characterisation of a gene as selfish is precisely that this property leads to its success in reproducing itself. We cannot make the prediction of a theory be the basis of the definition of the central element of the theory. If we do that, the theory is empty from the viewpoint of empirical science.

Dawkins’ theory is, therefore “not a physiologically testable hypothesis” (Noble, 2011). Dawkins’ theory posits that the gene is the unit of selection, whereas the organism is only used to propagate the selfish genes. But “Just as Special Relativity and General Relativity can be succintly phrased by saying that there is no global (privileged) frame of reference, Biological Relativity can be phrased as saying that there is no global frame of causality in organisms” (Noble, 2017: 172). Dawkins’ theory privileges the gene as the unit of selection, when there is no direct unit of selection in multi-level biological systems (Noble, 2012).

In The Solitary Self: Darwin and the Selfish Gene, Midgley (2010) states “The choice of the word “selfish” is actually quite a strange one. This word is not really a suitable one for what Dawkins wanted to say about genetics because genes do not act alone.” As Dawkins later noted, “the cooperative gene” would have been a better description, while The Immortal Gene would have been a better title for the book. Midgley (2010: 16) states that Dawkins and Wilson (in The Selfish Gene and Sociobiology, respectively) “use a very simple concept of selfishness derived not from Darwin but from a wider background of Hobbesian social atomism, and give it a general explanation of all behaviour, including that of humans.” Dawkins and others claim that “the thing actually being selected was the genes” (Midgley, 2010: 47).

Conclusion

Developmental systems theory (DST) explains and predicts more than the neo-Darwinian Modern Synthesis (Laland et al, 2015). Dawkins’ theory is not testable. Indeed, the neo-Darwinian Modern Synthesis (and along with it Dawkins’ selfish gene theory) is dead, an extended synthesis explains evolution. As Fodor and Piattelli-Palmarini (2010a, b) and Fodor (2008) state in What Darwin Got Wrong, natural selection is not mechanistic and therefore cannot select-for genes or traits (also see Midgley’s 2010: chapter 6 discussion of Fodor and Piattelli-Palmarini). (Okasha, 2018 also discusses ‘selection-for- genes—and, specifically, Dawkins’ selfish gene theory.)

Dawkins’ theory was repurposed, used to attempt to argue for ethno-nationalism and patriotism—even though Dawkins himself is against such uses. Of course, theories can be repurposed from their original uses, though the use of the theory is itself erroneous, as is the case with regard to Rushton, Russel and Wells (1984) and Rushton (1997, 1998). Since the theory is itself not testable (Noble, 2011, 2017), it should therefore—along with all other theories that use it as its basis—be dropped. While Rushton’s change from social learning to genetic causation regarding altruism is not out of character for his former research (he began his career as a social learning theorist studying altruism; Rushton, 1980), his use of the theory to attempt to explain why individuals and groups prefer those more similar to themselves ultimately fails since it is “logically flawed” (Mealey, 1984: 571).

Genes ‘do’ what the physiological system ‘tells’ them to do; they are just inert, passive templates. What is active is the cell—the genome is an organ of the cell and is what is ‘immortal.’ Genes don’t “control” anything; they are used by and for the physiological system to carry out certain processes (Noble, 2017; Richardson, 2017: chapter 4, 5). There are new views of what ‘genes’ really are (Portin and Wilkins, 2017), what they are and were—are—used for.

Development is dynamic and not determined by genes. Genes (DNA sequences) are followers, not leaders. The leader is the physiological system.

Mary Midgley on ‘Intelligence’ and its ‘Measurement’

1050 words

Mary Midgley (1919-2018) is a philosopher perhaps most well-known for her writing on moral philosophy and rejoinders to Richard Dawkins after his publication of The Selfish Gene. Before her passing in October of 2018, she published What Is Philosophy For? on September 21st. In the book, she discusses ‘intelligence’ and its ‘measurement’ and comes to familiar conclusions.

‘Intelligence’ is not a ‘thing’ like, say, temperature and weight (though it is reified as one). Thermometers measure temperature, and this was verified without relying on the thermometer itself (see Hasok Chang, Inventing Temperature). Temperature can be measured in terms of units like kelvin, celsius, and Fahrenheit. The temperature is the available kinetic energy of heat; ‘thermo’ means heat while ‘meter’ means to measure, so heat is what is being measured with a thermometer.

Scales measure weight. If energy balance is stable, so too will weight be stable. Eat too much or too little, then weight gain or loss will occur. But animals seem to have a body set weight which has been experimentally demonstrated (Leibel, 2008). In any case, what a scale measures is the overall weight of an object which is done by measuring how much force exists between the weighed object and the earth.

The whole concept of ‘intelligence’ is hopelessly unreal.

Prophecies [like those of people who work on AI] treat intelligence as a quantifiable stuff, a standard, unvarying, substance like granulated sugar, a substance found in every kind of cake — a substance which, when poured on in larger quantities, always produces a standard improvement in performance. This mythical way of talking has nothing to do with the way in which cleverness — and thought generally — actually develops among human beings. This imagery is, in fact, about as reasonable as expecting children to grow up into steamrollers on the ground that they are already getting larger and can easily be trained to stamp down gravel on roads. In both cases, there simply is not the kind of continuity that would make any such progress conceivable. (Midgley, 2018: 98)

We recognize the divergence of interests all the time when we are trying to find suitable people for different situations. Thus Bob may be an excellent mathematician but is still a hopeless sailor, while Tim, that impressive navigator, cannot deal with advanced mathematics at all. which of them then should be considered the more intelligent? In real life, we don’t make the mistake of trying to add these people’s gifts up quantitatively to make a single composite genius and then hope to find him. We know that planners wanting to find a leader for their exploring expedition must either choose between these candidates or send both of them. Their peculiar capacities grow out of their special interests in topics, which is not a measurable talent but an integral part of their own character.

In fact, the word ‘intelligence’ does not name a single measurable property, like ‘temperature’ or ‘weight’. It is a general term like ‘usefulness’ or ‘rarity’. And general terms always need a context to give them any detailed application. It makes no more sense to ask whether Newton was more intelligent than Shakespeare than it does to ask if a hammer is more useful than a knife. There can’t be such a thing as an all-purpose intelligence, any more than an all-purpose tool. … Thus the idea of a single scale of cleverness, rising from the normal to beyond the highest known IQ, is simply a misleading myth.

It is unfortunate that we have got so used today to talk of IQs, which suggests that this sort of abstract cleverness does exist. This has happened because we have got used to ‘intelligence tests’ themselves, devices which sort people out into convenient categories for simple purposes, such as admission to schools and hospitals, in a way that seems to quantify their ability. This leads people to think that there is indeed a single quantifiable stuff called intelligence. But, for as long as these tests have been used, it has been clear that this language is too crude even for those simple cases. No sensible person would normally think of relying on it beyond those contexts. Far less can it be extended as a kind of brain-thermometer to use for measuring more complex kinds of ability. The idea of simply increasing intelligence in the abstract — rather than beginning to understand some particular kind of thing better — simply does not make sense. (Midgley, 2018: 100-101)

IQ researchers, though, take IQ to be a measure of a quantitative trait that can be measured in increments—like height, weight, and temperature. “So, in deciding that IQ is a quantitative trait, investigators are making big assumptions about its genetic and environmental background” (Richardson, 2000: 61). But there is no validity to the measure and hence no backing for the claim that it is a quantitative trait and measures what they suppose it does.

Just because we refer to something abstract does not mean that it has a referent in the real world; just because we call something ‘intelligence’ and say that it is tested—however crudely—by IQ tests does not mean that it exists and that the test is measuring it. Thermometers measure temperature; scales measure weight; IQ tests….don’t measure ‘intelligence’ (whatever that is), they measure acculturated knowledge and skills. Howe (1997: 6) writes that psychological test scores are “an indication of how well someone has performed at a number of questions that have been chosen for largely practical reasons” while Richardson (1998: 127) writes that “The most reasonable answer to the question “What is being measured?”, then, is ‘degree of cultural affiliation’: to the culture of test constructors, school teachers and school curricula.”

But the word ‘intelligence’ refers to what? The attempt to measure ‘intelligence’ is a failure as such tests cannot be divorced from their cultural contexts. This won’t stop IQ-ists, though, from claiming that we can rank one’s mind as ‘better’ than another on the basis of IQ test scores—even if they can’t define ‘intelligence’. Midgley’s chapter, while short, gets straight to the point. ‘Intelligence’ is not a ‘thing’ like height, weight, or temperature. Height can be measured by a ruler; weight can be measured by a scale; temperature can be measured by a thermometer. Intelligence? Can’t be measured by an IQ test.

McNamara’s Morons

2650 words

The Vietnam War can be said to be the only war that America has lost. Due to a lack of men volunteering for combat (and a large number of young men getting exemptions from service from their doctors and many other ways), standards were lowered in order to meet quotas. They recruited those with low test scores who came to be known as ‘McNamara’s Morons’—a group of 357,000 or so men. With ‘mental standards’ now lower, the US now had men to fight in the war.

This decision was made by Secretary of Defense Robert McNamara and Lyndon B. Johnson. This came to be known as ‘McNamara’s Folly’—the title of a book on the subject (Hamilton, 2015). Hamilton (2015: 10) writes: “A total of 5,478 low-IQ men died will in the service, most of them in combat. Their fatality rate was three times as high as that of other GIs. An estimated 20,270 were wounded, and some were permanently disabled (including an estimated 500 amputees).”

Hamilton spends the first part of the book describing his friendship with a man named Johnny Gupton who could neither read nor write. He spoke like a hillbilly and used hillbilly phrasing. According to Hamilton (2010: 14):

I was surprised that he knew nothing about the situation he was in. He didn’t understand what basic training was all about, and he didn’t know that America was in a war. I tried to explain what was happening, but at the end, I could tell that he was still in a fog.

Hamilton describes an instance in which they were told that on their postcards they were to send home, they should not write anything “raunchy” like the sergeant said “Don’t be like that trainee who went through here and wrote ‘Dear Darlene. This is to inform you that Sugar Dick has arrived safely…’” (Hamilton, 2015: 16). Hamilton went on to write that Gupton did not ‘get’ the joke while “There was a roar of laughter” from everyone else. Gupton’s postcard, since he could not read or write, was written by Hamilton but he did not know his address; he could not state the name of a family member, only stating “Granny” while not able to state her full name. He could not tie his boots correctly, so Hamilton did it for him every morning. But he was a great boot-shiner, having the shiniest boots in the barracks.

Writing home to his fiancee, Hamilton (2015: 18) wrote to her that Gupton’s dogtags “provide him with endless fascination.”

Gupton had trouble distinguishing between left and right, which prevented him from marching in step (“left, right, left, right”) and knowing which way to turn for commands like “left face!” and “right flank march!” So Sergeant Boone tied an old shoelace around Gupton’s right wrist to help him remember which side of his body was the right side, and he placed a rubber band on the left wrist to denote the left side of the body. The shoelace and the rubberband helped, but Gupton was a but slow in responding. For example, he learned how to execute “left face” and “right face,” but he was a fraction of a second behind everyone else.

Gupton was also not able to make his bunk to Army standards, so Hamilton and another soldier did it for him. Hamilton stated that Gupton could also not distinguish between sergeants and officers. “Someone in the barracks discovered that Gupton thought a nickel was more valuable than a dime because it was bigger in size” (Hamilton, 2015: 26). So after that, Hamilton took Gupton’s money and rationed it out to him.

Hamilton then describes a time where he was asked by a Captain what they were doing and the situation they were in—to which he gave the correct responses. A Captain then asked Gupton “Which rank is higher, a captain or a general?” to which Gupton responded, “I don’t know, Drill Sergeant.” (He was supposed to say ‘Sir.’) The captain talking to Hamilton then said:

Can you believe this idiot we drafted? I tell you who else is an idiot. Fuckin’ Robert McNamara. How can he expect us to win a war if we draft these morons? (Hamilton, 2015: 27)

Captain Bosch’s contemptuous remark about Defense Secretary McNamara was typical of the comments I often heard from career Army men, who detested McNamara’s lowering of enlistment standards in order to bring low-IQ men into the ranks. (Hamilton, 2015: 28)

Hamilton heard one sergeant tell others that “Gupton should absolutely never be allowed to handle loaded weapons on his own” (Hamilton, 2015: 41). Gupton was then sent to kitchen duty where, for 16 hours (5 am to 9 pm), they would have to peel potatoes, clean the floors, do the dishes etc.

Hamilton (2015: 45) then describes another member of “The Muck Squad” but in a different platoon who “was unfazed by the dictatorial authority of his superiors.” When an officer screamed at him for not speaking or acting correctly he would then give a slightly related answer. When asked if he had shaved one morning, he “replied with a rambling of pronouncements about body odor and his belief that the sergeants were stealing his soap and shaving cream” (Hamilton, 2015: 45). He was thought to be faking insanity but he kept getting weirder; Hamilton was told that he would talk to an imaginary person in his bunk at night.

Murdoch was then told to find an electric floor buffer to buff the floors and he “wandered around in battalion headquarters until he found the biggest office, which belonged to the battalion commander. He walked in without knocking or saluting or seeking permission to speak, and asked the commander—a lieutenant colonel—for a buffer“. When in the office, he “proceeded to play with a miniature cannon and other memorabilia on the commander’s desk…” (Hamilton, 2015: 45). Murdoch was then found to have schizophrenia and was sent on home medical discharge.

Right before their tests of physical fitness to see if they qualified, young-looking sergeants shaved their heads and did the tests for them—Gupton got a 95 while Hamilton got an 80, which upset Hamilton because he knew he could have scored 100.

Hamilton ended up nearly getting heatstroke (with a 105-degree fever) and so he was separated from Gupton. He eventually ended up contacting someone who had spent time with Gupton. He did not “remember much about Gupton except that he was protected by a friendly sergeant, who had grown up with a “mentally handicapped” sister and was sensitive to his plight” (Hamilton, 2015: 51). Gupton was only given menial jobs by this sergeant. Hamilton discovered that Gupton had died at age 57 in 2002.

Hamilton then got sent to Special Training Company because while he was out with his fever he missed important days so his captain sent him to the Company to get “rehabilitation” before returning to another training company. They had to do log drills and a Physical Combat Proficiency Test, which most men failed. You needed 60 points per event to pass. The first event was crawling on dirt as fast as possible for 40 yards on your hands and knees. “Most of the men failed to get any points at all because they were disqualified for getting up on their knees. They had trouble grasping the concept of keeping their trunks against the ground and moving forward like supple lizards” (Hamilton, 2015: 59).

The second event was the horizontal ladder—imagine a jungle gym. Think of swinging like an ape through the trees. Hamilton, though as he admits not being strong, traversed 36 rungs in under a minute for the full 60 points. When he attempted to show them how to do it and watch them try, “none of the men were able to translate the idea into action” * (Hamilton, 2015: 60).

The third event was called run, dodge, and jump. They had to zig-zag, dodge obstacles, and side-step people and finally jump over a shallow ditch. To get the 60 points they had to make 2 trips in 25 seconds.

Some of the Special Training men were befuddled by one aspect of the course: the wooden obstacles had directional arros, and if you failed to go in the right direction, you were disqualified. A person of normal intelligence would observe the arrows ahead of time and run in the right direction without pausing or breaking stride. But these men would hesitate in order to study the arros and think about which way to go. For each second they paused, they lost 10 points. A few more men were unable to jump across the ditch, so they were disqualified. (Hamilton, 2015: 60-61)

Fourth was the grenade throw. They had to throw 5 training grenades 90 feet with scoring similar to that of a dartboard where the closer you are to the bull’s eye, the higher your score. They had to throw it from one knee in order to simulate battle conditions, but “Most of the Special Training men were too weak or uncoordinated to come close to the target, so they got a zero” * (Hamilton, 2015: 61). Most of them tried throwing it in a straight line like a baseball catcher rather than an arc like a center fielder to a catcher trying to throw someone out at home plate. “…the men couldn’t understand what he was driving at, or else they couldn’t translate it into action. Their throws were pathetic little trajectories” (Hamilton, 2015: 62).

Fifth was the mile-run—they had to do it in eight minutes and 33 seconds but they had to have their combat boots on. The other men in his group would immediately sprint, tiring themselves outs, they could not—according to Hamilton—“grasp or apply what the sergeants told them about the need to maintain a steady pace (not too slow, not too fast) throughout the entire mile.”

Hamilton then discusses another instance in which sergeants told a soldier that there was a cat behind the garbage can and to pick up a cat. But the cat turned out to be a skunk and he spent the next two weeks in the hospital getting treated for possible rabies. “He had no idea that the sergeants had played a trick on him.”

It was true that most of us were unimpressive physical specimens—overweight or scrawny or just plain unhealthy-looking, with unappealing faces and awkward ways of walking and running.

[…]

Sometimes trainees from other companiees, riding by in trucks, would hoot at us and shout “morons!” and “dummies!” Once, when a platoon marched by, the sergeant led the men in singing,

If I had a low IQ,

I’d be Special Training, too!(It was sung to the tune of the famous Jody songs, as in “Ain’t no use goin’ home/Jody’s got your girls and gone.”)

Hamilton states that there was “One exception to the general unattractiveness” who “was Freddie Hensley.” He was consumed with “dread and anxiety”, always sighing. Freddie ended up being too slow to pass the rifle test with moving targets. Hamilton had wondered “why Freddie had been chosen to take the rifle test, but it soon dawned on me that he was selected because he was a handsome young man. Many people equate good looks with competence, and ugliness with incompetence. Freddie didn’t look like a dim bulb” (Hamilton, 2015: 72).

Freddy also didn’t know some ‘basic facts’ such as thunder precedes lightining. “As Freddy and I sat together on foot lockers and looked out the window, I passed the time by trying to figure out how close the lightning was. … I tried to explain what I was doing, and I was not surprised that Freddy could not comprehend. What was surprising was my discovery that Freddy did not know that lightning caused thunder. He knew what lightning was, he knew what thunder was, but he did not know that one caused the other” (Hamilton, 2015: 72).

The test used while the US was in Vietnam was the AFQT (Armed Forces Qualifying Test) (Maier, 1993: 1). As Maier (1993: 3) notes—as does Hamilton—men who chose to enlist could choose their occupation from a list whereas those who were forced had their occupation chosen for them.

For example, during the Vietnam period, the minimum selection standards were so low that many recruits were not qualified for any specialty, or the specialties for which they were qualified had already been filled by people with higher aptitude scores. These people, called no-equals, were rejected by the algorithm and had to be assigned by hand. Typically they were assigned as infantrymen, cooks, or stevedores. Maier (1993: 4)

Most of McNamara’s Morons

came from economically unstable homes with non-traditional family structures. 70% came from low-income backgrounds, and 60% came from singleparent families. Over 80% were high school dropouts, 40% read below a sixth grade level, and 15% read below a fourth grade level. 50% had IQs of less than 85. (Hsiao, 1989: 16-17)

Such tests were constructed from their very beginnings, though, to get this result.

… the tests’ very lack of effect on the placement of [army] personnel provides the clue to their use. The tests were used to justify, not alter, the army’s traditional personnel policy, which called for the selection of officers from among relatively affluent whites and the assignment of white of lower socioeconomic status go lower-status roles and African-Americans at the bottom rung. (Mensh and Mensh 1991: 31)

Reading through this book, the individuals that Hamilton describes clearly had learning disabilities. We do not need IQ tests to identify such individuals who clearly suffer from learning disabilities and other abnormalities (Sigel, 1989). Jordan Peterson claims that the military won’t accept people with IQs below 83, while Gottfredson states that

IQ 85 is a second important minimum threshold because the U.S. military sets its minimum enlistment standards at about this level. (2004, 28)

The laws in some countries, such as the United States, do not allow individuals with IQs below 80 to serve in the military because they lack adequate trainability. (2004, 18)

What “laws” do we have here in America ***specifically*** to disallow “individuals with IQs below 80 to serve in the military”? ** Where are the references? Why do Peterson and Gottfredson both make unevidenced claims when the claim in question most definitely needs a reference?

McNamara’s Folly is a good book; it shows why we should not let people with learning/physical/mental disabilities into the war. However, from the descriptions Hamilton gave, we did not need to learn their IQ to know that they could not be soldiers. It was clear as day that they weren’t all there, and their IQ score is irrelevant to that. The people described in the book clearly have developmental disabilities; how is IQ causal in this regard? IQ is an outcome, not a cause (Howe, 1997).

Both Jordan Peterson and Linda Gottfredson claim that the military will not hire a recruit with an IQ score of 80 or below; but they both just make a claim and attempting to validate the claim by searching through military papers does not validate the claim. In any case, IQ scores are not needed to learn that an individual has a learning disability (like how those described in the book clearly had). The unevidenced claims from Gottfredson and Peterson should not be accepted. In any case, one’s IQ is not causal in regard to their inability to, say, become a soldier as other factors are important, not a reified number we call ‘IQ.’ Their IQ scores were not their downfalls.

* Note that if one does not have a good mind-muscle connection then they won’t be able to carry-out novel tasks such as what they went through on the monkey bars.

1/20/2020 Edit ** I did not look hard enough for a reference for the claims. It appears that there is indeed a law (10 USC Sec. 520) that states that those that get between 1 and 9 questions right (category V) are not trainable recruits. The ASVAB is not not a measure of ‘general intelligence’, but is a measure of “acculturated learning” (Roberts et al, 2000). The ‘IQ test’ used in Murray and Herrnstein’s The Bell Curve was the AFQT, and it “best indicates poverty” (Palmer, 2018). This letter relates AFQT scores to the Weschler and Stanford-Binet—where the cut-off is 71 for the S-B and 80 for Weschler (both are category V). Returning to Mensh and Mensh (1991), such tests were—from their very beginnings—used to justify the current military order, having lower-class recruits in more menial jobs.

The Oppression of the High IQs

1250 words

I’m sure most people remember their days in high school. Popular kids, goths, preppies, the losers, jocks, and the geeks are some of the groups you may find in the typical American high school. Each group, most likely, had another group that they didn’t like and became their rival. For the geeks, their rivals are most likely the jocks. They get beat on, made fun of, and most likely sit alone at lunch.

Should there be legal protection for such individuals? One psychologist argues there should be. Sonja Falck from the University of London specializes in high “ability” individuals and states that terms like “geek”, and “nerd” should be hate crimes and categorized under the same laws like homophobic, religious and racial slurs. She even published a book on the subject, Extreme Intelligence: Development, Predicaments, Implications (Falck, 2019). (Also see The Curse of the High IQ, see here for a review.)

She wants anti-IQ slurs to be classified as hate crimes. Sure, being two percent of the population (on a constructed normal curve) does mean they are a “minority group”, just like those at the bottom two percent of the distribution. Some IQ-ists may say “If the bottom two percent are afforded special protections then so should the top two percent.”

While hostile or inciteful language about race, religion, sexuality, disability or gender identity is classed as a hate crime, “divisive and humiliating” jibes such as ‘smart-arse’, ‘smart alec’, and ‘know-it-all’ are dismissed as “banter” and used with impunity against the country’s high-IQ community, she said.

According to Dr Falck, being labelled a ‘nerd’ in the course of being bullied, especially as a child, can cause psychological damage that may last a lifetime.

Extending legislation to include so-called ‘anti-IQ’ slurs would, she claims, help stamp out the “archaic” victimisation of more than one million Britons with a ‘gifted’ IQ score of 132 or over.

Her views are based on eight years of research and after speaking to dozens of high-ability children, parents and adults about their own experiences.

Non-discrimination against those with very high IQ is also supported by Mensa, the international high IQ society and by Potential Plus UK, the national association for young people with high-learning potential. (UEL ACADEMIC: ANTI-IQ TERMS ARE HATE CRIME’S ‘LAST TABOO’)

I’m not going to lie—if I ever came across a job application and the individual had on their resume that they were a “Mensa member” or a member of some other high IQ club, it would go into the “No” pile. I would assume that is discrimination against high IQ individuals, no?

It seems like Dr. Falck is implying that terms such as “smart arse”, “geek”, and “nerd” are similar to “moron” (a term with historical significance coined by Henry Goddard, see Dolmage, 2018), idiot, dumbass and stupid should be afforded the same types of hate crime legislation? Because people deemed to be “morons” or “idiots” were sterilized in America as the eugenics movement came to a head in the 1900s.

Low IQ individuals were sterilized in America in the 1900s, and the translated Binet-Simon test (and other, newer tests) were used for those ends. The Eugenics Board of North Carolina sterilized thousands of low IQ individuals in the 1900s—around 60,000 people were sterilized in total in America before the 1960s, and IQ was one way to determine who to sterilize. Sterilization in America (which is not common knowledge) continued up until the 80s in some U.S. states (e.g., California).

There was true, real discrimination against low IQ people during the 20th century, and so, laws were enacted to protect them. They, like the ‘gifted’ individuals, comprise 2 percent of the population (on a constructed curve by the test’s constructors), low IQ individuals are afforded protection by the law. Therefore, states the IQ-ist, high IQ individuals should be afforded protection by the law.

But is being called a ‘nerd’, ‘geek’, ‘smarty pants’, ‘dweeb’, ‘smart arse’ (Falck calls these ‘anti-IQ words‘) etc is not the same as being called terms that originated during the eugenic era of the U.S.. Falck wants the term ‘nerd’ to be a ‘hate-term.’ The British Government should ‘force societal change’ and give special protections to those with high IQs. People freely use terms like ‘moron’ and ‘idiot’ in everyday speech—along with the aforementioned terms cited by Falck.

Falck wants ‘intelligence’ to be afforded the same protections under the Equality Act of 2010 (even though ‘intelligence’ means just scoring high on an IQ test and qualifying for Mensa; note that Mensans have a higher chance for psychological and physiological overexcitability; Karpinski et al, 2018). Now, Britain isn’t America (where we, thankfully, have free speech laws), but Falck wants there to be penalities for me if I call someone a ‘geek.’ How, exactly, is this supposed to work? Like with my example above on putting a resume with ‘Mensa member’ in the “No” pile? Would that be discrimination? Or is it my choice as an employer who I want to work for me? Where do we draw the line?

By way of contrast, intelligence does not neatly fit within the definition of any of the existing protected characteristics. However, if a person is treated differently because of a protected characteristic, such as a disability, it is possible that derogatory comments regarding their intelligence might form part of the factual matrix in respect of proving less favourable treatment.

[…]

If the individual is suffering from work-related stress as a result of facing repeated “anti-IQ slurs” and related behaviour, they might also fall into the definition of disabled under the Equality Act and be able to bring claims for disability discrimination. (‘Anti-IQ’ slurs: Why HR should be mindful of intelligence-related bullying

How would one know if the individual in question is ‘gifted’? Acting weird? They tell you? (How do you know if someone is a Mensan? Don’t worry, they’ll tell you.) Calling people names because they do X? That is ALL a part of workplace banter—better call up OSHA! What does it even mean for one to be mistreated in the workplace due to their ‘high intelligence’? If there is someone that I work with and they seem to be doing things right, not messing up and are good to work with, there will be no problem. On the other hand, if they start messing up and are bad to work with (like they make things harder for the team, not being a team player) there will be a problem—and if their little quirks means they have a ‘high IQ’ and I’m being an IQ bigot, then Falck would want there to be penalties for me.

I have yet to read the book (I will get to it after I read and review Murray’s Human Diversity and Warne’s Debunking 35 Myths About Human Intelligence—going to be a busy winter for me!), but the premise of the book seems strange—where do we draw the line on ‘minority group’ that gets afforced special protections? The proposal is insane; name-calling (such as the cited examples in the articles) is normal workplace banter (you, of course, need thick skin to not be able to run to HR and rat your co-workers out). It seems like Mensa has their own out there, attempting to afford them protections that they do not need. High IQ people are clearly oppressed and discriminated against in society and so need to be afforded special protection by the law. (sarcasm)

This, though, just speaks to the insanity on special group protection and the law. I thought that this was a joke when I read these articles—then I came across the book.

Genes and Disease: Reductionism and Knockouts

1600 words

Genetic reductionism refers to the belief that understanding our genes will have us understand everything from human behavior to disease. The behavioral genetic approach claims to be the best way to parse through social and biological causes of health, disease, and behavior. The aim of genetic reductionism is to reduce a complex biological system to the sum of its parts. While there was some value in doing so when our technology was in its infancy and we did learn a lot about what makes us “us”, the reductionist paradigm has outlived its usefulness.

If we want to understand a complex biological system then we shouldn’t use gene scores, heritability estimates, or gene sequencing. We should be attempting to understand how the whole biological system interacts with its surroundings—its environment.

Reductionists may claim that “gene knockout” studies can point us in the direction of genetic causation—“knockout” a gene and, if there are any changes, then we can say that that gene caused that trait. But is it so simple? Richardson (2000) puts it well:

All we know for sure is that rare changes, or mutations, in certain single genes can drastically disrupt intelligence, by virtue of the fact that they disrupt the whole system.

Noble (2011) writes:

Differences in DNA do not necessarily, or even usually, result in differences in phenotype. The great majority, 80%, of knockouts in yeast, for example, are normally ‘silent’ (Hillenmeyer et al. 2008). While there must be underlying effects in the protein networks, these are clearly buffered at the higher levels. The phenotypic effects therefore appear only when the organism is metabolically stressed, and even then they do not reveal the precise quantitative contributions for reasons I have explained elsewhere (Noble, 2011). The failure of knockouts to systematically and reliably reveal gene functions is one of the great (and expensive) disappointments of recent biology. Note, however, that the disappointment exists only in the gene-centred view. By contrast it is an exciting challenge from the systems perspective. This very effective ‘buffering’ of genetic change is itself an important systems property of cells and organisms.

Moreover, even when a difference in the phenotype does become manifest, it may not reveal the function(s) of the gene. In fact, it cannot do so, since all the functions shared between the original and the mutated gene are necessarily hidden from view. … Only a full physiological analysis of the roles of the protein it codes for in higher-level functions can reveal that. That will include identifying the real biological regulators as systems properties. Knockout experiments by themselves do not identify regulators (Davies, 2009).

All knocking-out or changing genes/alleles will do is show us that T is correlated with G, not that T is caused by G. Merely observing a correlation between a change in genes or knocking genes out will tell us nothing about biological causation. Reductionism will not have us understand the etiology of disease as the discipline of physiology is not reductionist at all—it is a holistic discipline.

Lewontin (2000: 12) writes in the introduction to The Ontogeny of Information: “But if I successfully perform knockout experiments on every gene that can be seen in such experiments to have an effect on, say, wing shape, have I even learned what causes the wing shape of one species or individual to differ from that of another? After all, two species of Drosophilia presumably have the same relevant set of loci.”

But the loss of a gene can be compensated by another gene—a phenomenon known as genetic compensation. In a complex bio-system, when one gene is knocked out, another similar gene may take the ‘role’ of the knocked-out gene. Noble (2006: 106-107) explains:

Suppose there are three biochemical pathways A, B, and C, by which a particular necessary molecule, such as a hormone, can be made in the body. And suppose the genes for A fail. What happens? The failure of the A genes will stimulate feedback. This feedback will affect what happens with the sets of genes for B and C. These alternate genes will be more extensively used. In the jargon, we have here a case of feedback regulation; the feedback up-regulates the expression levels of the two unaffected genes to compensate for the genes that got knocked out.

Clearly, in this case, we can compensate for two such failures and still be functional. Only if all three mechanisms fail does the system as a whole fail. The more parallel compensatory mechanisms an organism has, the more robust (fail-safe) will be its functionality.

The Neo-Darwinian Synthesis has trouble explaining such compensatory genetic mechanisms—but the systems view (Developmental Systems Theory, DST) does not. Even if a knockout affects the phenotype, we cannot say that that gene outright caused the phenotype, the system was screwed up, and so it responded in that way.

Genetic networks and their role in development became clear when geneticists began using genetic knockout techniques to disable genes which were known to be implicated in the development of characters but the phenotype remained unchanged—this, again, is an example of genetic compensation. Jablonka and Lamb (2005: 67) describe three reasons why the genome can compensate for the absence of a particular gene:

first, many genes have duplicate copies, so when both alleles of one copy are knocked out, the reserve copy compensates; second, genes that normally have other functions can take the place of a gene that has been knocked out; and third, the dynamic regulatory structure of the network is such that knocking out single components is not felt.

Using Waddington’s epigenetic landscape example, Jablonka and Lamb (2005: 68) go on to say that if you knocked a peg out, “processes that adjust the tension on the guy ropes from other pegs could leave the landscape essentially unchanged, and the character quite normal. … If knocking out a gene completely has no detectable effect, there is no reason why changing a nucleotide here and there should necessarily make a difference. The evolved network of interactions that underlies the development and maintenance of every character is able to accommodate or compensate for many genetic variations.”

Wagner and Wright (2007: 163) write:

“multiple alternative pathways . . . are the rule rather than the exception . . . such pathways can continue to function despite amino acid changes that may impair one intermediate regulator. Our results underscore the importance of systems biology approaches to understand functional and evolutionary constraints on genes and proteins.” (Quoted in Richardson, 2017: 132)

When it comes to disease, genes are said to be difference-makers—that is, the one gene difference/mutation is what is causing the disease phenotype. Genes, of course, interact with our lifestyles and they are implicated in the development of disease—as necessary, not sufficient, causes. GWA studies (genome-wide association studies) have been all the rage for the past ten or so years. And, to find diseases ‘associated’ with disease, GWA practioners take healthy people and diseased people, sequence their genomes and they then look for certain alleles that are more common in one group over the other. Alleles more common in the disease group are said to be ‘associated’ with the disease while alleles more common in the control group can be said to be protective of the disease (Kampourakis, 2017: 102). (This same process is how ‘intelligence‘ is GWASed.)

“Disease is a character difference” (Kampourakis, 2017: 132). So if disease is a character difference and differences in genes cannot explain the existence of different characters but can explain the variation in characters then the same must hold for disease.

“Gene for” talk is about the attribution of characters and diseases to DNA, even thoughit is not DNA that is directly responsible for them. … Therefore, if many genes produce or affect the production of the protein that in turn affects a character or disease, it makes no sense to identify one gene as the gene responsible “for” this character or disease. Single genes do not produce characters or disease …(Kampourakis, 2017: 134-135)

This all stems from the “blueprint metaphor”—the belief that the genome contains a blueprint for form and development. There are, however, no ‘genes for’ character or disease, therefore, genetic determinism is false.

Genes, in fact, are weakly associated with disease. A new study (Patron et al, 2019) analyzed 569 GWA studies, looking at 219 different diseases. David Scott (one of the co-authors) was interviewed by Reuters where he said:

“Despite these rare exceptions [genes accounting for half of the risk of acquiring Crohn’s, celiac and macular degeneration], it is becoming increasingly clear that the risks for getting most diseases arise from your metabolism, your environment, your lifestyle, or your exposure to various kinds of nutrients, chemicals, bacteria, or viruses,” Wishart said.

…

“Based on our results, more than 95% of diseases or disease risks (including Alzheimer’s disease, autism, asthma, juvenile diabetes, psoriasis, etc.) could NOT be predicted accurately from SNPs.”

It seems like this is, yet again, another failure of the reductionist paradigm. We need to understand how genes interact in the whole human biological system, not reducing our system to the sum of its parts (‘genes’). Programs like this are premised on reductionist assumptions; it seems intuitive to think that many diseases are ’caused by’ genes, as if genes are ‘in control’ of development. However, what is truly ‘in control’ of development is the physiological system—where genes are used only as resources, not causes. The reductionist (neo-Darwinist) paradigm cannot really explain genetic compensation after knocking out genes, but the systems view can. The amazing complexity of complex bio-systems allows them to buffer against developmental miscues and missing genes in order to complete the development of the organism.

Genes are not active causes, they are passive causes, resources—they, therefore, cannot cause disease and characters.

Behavioral Geneticists are Silenced!: Public Perceptions on Genetics

2000 words

HBDers like to talk about this perception that their ideas are not really discussed in the public discourse; that the truth is somehow withheld from the public due to a nefarious plot to shield people from the truth that they so heroically attempt to get out to the dumb masses. They like to claim that the field and its practitioners are ‘silenced’, that they are rejected outright for ‘wrongthink’ ideas they hold. But if we look at what kinds of studies get out to the public, a different picture emerges.

The title of Cofnas’ (2019) paper is Research on group differences in intelligence: A defense of free inquiry; the title of Carl’s (2018) paper is How Stifiling Debate Around Race, Genes and IQ Can Do Harm; and the title of Meisenberg’s (2019) paper is Should Cognitive Differences Research Be Forbidden? Meisenberg’s paper is the most direct response to my most recent article, an argument to ban IQ tests due to the class/racial bias they hold that then may be used to enact undesirable consequences on a group that scores low—but like all IQ-ists, they assume that IQ tests are tests of intelligence which is a dubious assumption. In any case, these three authors seem to think there is a silencing of their work.

For Darwin200 (his 200th birthday) back in 2009, the question “Should scientists study race and IQ” was asked in the journal Nature. Neuroscientist Steven Rose (2009: 788) stated “No”, writing:

The problem is not that knowledge of such group intelligence differences is too dangerous, but rather that there is no valid knowledge to be found in this area at all. It’s just ideology masquerading as science.

Ceci and Williams (2009: 789) answered “Yes” to the question, writing:

When scientists are silenced by colleagues, administrators, editors and funders who think that simply asking certain questions is inappropriate, the process begins to resemble religion rather than science. Under such a regime, we risk losing a generation of desperately needed research.

John Horgan wrote in Scientific American:

But another part of me wonders whether research on race and intelligence—given the persistence of racism in the U.S. and elsewhere–should simply be banned. I don’t say this lightly. For the most part, I am a hard-core defender of freedom of speech and science. But research on race and intelligence—no matter what its conclusions are—seems to me to have no redeeming value.

And when he says that “research on race and intelligence … should simply be banned“, he means:

Institutional review boards (IRBs), which must approve research involving human subjects carried out by universities and other organizations, should reject proposed research that will promote racial theories of intelligence, because the harm of such research–which fosters racism even if not motivated by racism–far outweighs any alleged benefits. Employing IRBs would be fitting, since they were formed in part as a response to the one of the most notorious examples of racist research in history, the Tuskegee Syphilis Study, which was carried out by the U.S. Public Health Service from 1932 to 1972.

At the end of the 2000s, journalist William Saletan was big in the ‘HBD-sphere’ due to his writings on sport and race and race and IQ. But in 2018 after the Harris/Murray fiasco on Harris’ podcast, Saletan wrote:

Many progressives, on the other hand, regard the whole topic of IQ and genetics and sinister. That too is a mistake. There’s a lot of hard science here. It can’t be wished away, and it can be put to good use. The challenge is to excavate that science from the muck of speculation about racial hierarchies.

What’s the path forward? It starts with letting go of race talk. No more podcasts hyping gratuitous racial comparisons as “forbidden knowledge.” No more essays speaking of grim ethnic truths for which, supposedly, we must prepare. Don’t imagine that if you posit an association between race and some trait, you can add enough caveats to erase the impression that people can be judged by their color. The association, not the caveats, is what people will remember.

If you’re interested in race and IQ, you might bristle at these admonitions. Perhaps you think you’re just telling the truth about test scores, IQ heritability, and the biological reality of race. It’s not your fault, you might argue, that you’re smeared and misunderstood. Harris says all of these things in his debate with Klein. And I cringe as I hear them, because I know these lines. I’ve played this role. Harris warns Klein that even if we “make certain facts taboo” and refuse “to ever look at population differences, we will be continually ambushed by these data.” He concludes: “Scientific data can’t be racist.”

Of course “scientific data can’t be racist”, but the data can be used by racists for racist motives and the tool to collect the data could be inherently biased against certain groups meaning they favor certain groups too.

Saletan claims that IQ tests can be ‘put to good use’, but it is “illogical” to think that the use of IQ tests was negative and then positive in other instances; it’s either one or the other, you cannot hold that IQ testing is good here and bad there.

Callier and Bonham (2015) write:

These types of assessments cannot be performed in a vacuum. There is a broader social context with which all investigators must engage to create meaningful and translatable research findings, including intelligence researchers. An important first step would be for the members of the genetics and behavioral genetics communities to formally and directly confront these challenges through their professional societies and the editorial boards of journals.

[…]

If traditional biases triumph over scientific rigor, the research will only exacerbate existing educational and social disparities.

Tabery (2015) states that:

it is important to remember that even if the community could keep race research at bay and out of the newspaper headlines, research on the genetics of intelligence would still not be expunged of all controversy.

IQ “science” is a subfield of behavioral genetics; so the overarching controversy is on ‘behavioral genetics’ (see Panofsky, 2014). You would expect there to hardly be any IQ research reported in mainstream outlets with how Cofnas (2019), Carl (2018) and Meisenberg (2019) talk about race and IQ. But that’s not what we find. What we find when we look at what is published regarding behavioral genetic studies compared to regular genetic studies is a stark contrast.

Society at large already harbors genetic determinist attitudes and beliefs, and what the mainstream newspapers put out then solidifies the false beliefs of the populace. Even then, a more educated populace in regard to genes and trait ontogeny will not necessarily make them supportive of new genetics research and discoveries; they are even critical of such studies (Etchegary et al, 2012). Schweitzer and Saks (2007) showed that the popular show CSI pushes false concepts of genetic testing on the public, showing that DNA testing is quick, reliable, and prosecutes many cases; about 40 percent of the ‘science’ used on CSI does not exist and this, too, promulgates false beliefs about genetics in society. Lewis et al (2000) asked schoolchildren “Why are genes important?”, to which 73 percent responded that they are important because they determine characters while 14 percent responded that they are important because they transfer information, but none spoke of gene products.

In the book Genes, Determinism and God, Denis Alexander (2017: 18) states that:

Much data suggest that the stories promulgated by the kind of ‘elite media’ stories cited previously do not act as ‘magic bullets’ to be instantly absorbed by the reader, but rather are resisted, critiqued or accepted depending on the reader’s economic interests, health and social status and access to competing discourses. A recurring theme is that people dis[lplay a ‘two-track model’ in which they can readily switch between more genetic deterministic explanations for disease or different behaviors and those which favour environmental factors or human choice (Condit et al., 2009).

The so-called two-track model is simple: one holds genetic determinist beliefs for a certain trait, like heart disease or diabetes, but then contradict themselves and state that diet and exercise can ameliorate any future complications (Condit, 2010). Though, holding “behavioral causal beliefs” (that one’s behavior is causal in regard to disease acquisition) is associated with behavioral change (Nguyen et al, 2015). This seems to be an example of what Bo Wingard means when he uses the term “selective blank slatism” or “ideologically motivated blank slatism.” That one’s ideology motivates them to believe that genes are causal regarding health, intelligence, and disease or reject the claim must be genetically mediated too. So how can we ever have objective science if people are biased by their genetics?

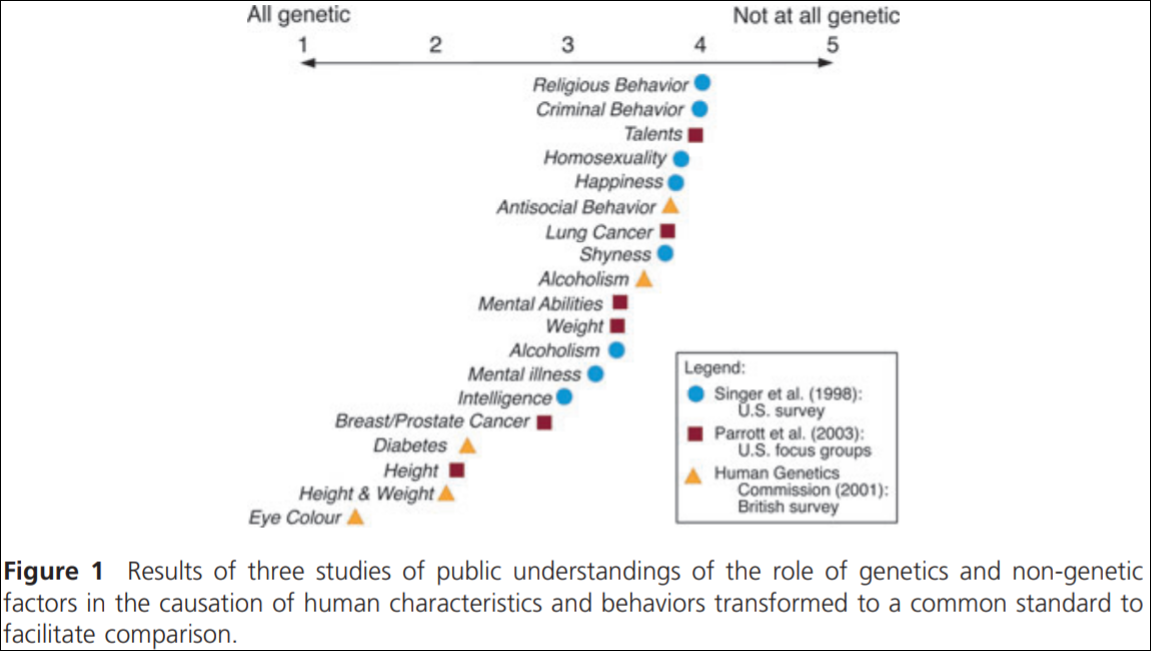

Condit (2011: 625) compiled a chart showing people’s attitudes to how ‘genetic’ a trait is or not:

Clearly, the public understands genes as playing more of a role when it comes to bodily traits and environment plays more of a role when it comes to things that humans have agency over—for things relating to the mind (Condit and Shen, 2011). “… people seem to deploy elements of fatalism or determinism into their worldviews or life goals when they suit particular ends, either in ways that are thought to ‘explain’ why other groups are the way they are or in ways that lessen their own sense of personal responsibility (Condit, 2011)” (Alexander, 2017: 19).

So, behavioral geneticists must be silenced, right? Bubela and Caufield (2004: 1402) write:

Our data may also indicate a more subtle form of media hype, in terms of what research newspapers choose to cover. Behavioural genetics and neurogenetics were the subject of 16% of the newspaper articles. A search of PubMed on May 30, 2003, with the term “genetics” yielded 1 175 855 hits, and searches with the terms “behavioural genetics” and “neurogenetics” yielded a total of 3587 hits (less than 1% of the hits for “genetics”).

So Bubela and Caufield (2004) found that 11 percent of the articles they looked at had moderately to highly exaggerated claims, while 26 percent were slightly exaggerated. Behavioral genetic/neurogenetic stories comprised 16 percent of the articles they found, while one percent of all academic press articles were on genetics, which “might help explain why the reader gains the impression that much of genetics research is directed towards explaining human behavior; such copy makes newsworthy stories for obvious reasons” (Alexander, 2017: 17-18). Behavioral genetics research is indeed silenced!

(By @barrydeutsch)

Conclusion

The public perception of genetics seems to line-up with that of genetics researchers in some ways but not in others. The public at large is bombarded with numerous messages per day, especially in the TV programs they watch (inundated with ad after ad). Certain researchers claim that ‘free inquiry’ into race and IQ is being hushed. To Cofnas (2019) I would say, “In virtue of what is it ‘free inquiry’ that we should study how a group handles an inherently biased test?” To Carl (2018) I would say, “What about the harm done assuming that the hereditarian hypothesis is true, that IQ tests test intelligence, and the funneling of minority children into EMR classes?” And to Meisenberg (2019) I would say “The answer to the question “Should research into cognitive differences be forbidden?” should be “Yes, they should be forbidden and banned since no good can come from a test that was biased from its very beginnings.” There is no ‘good’ that can come from using inherently biased tests, which is why the hereditarian-environmentalist debate on IQ is useless.

It is due to newspapers and other media outlets that people hold the beliefs on genetics they do. Behavioral genetics studies are overrepresented in newspapers; IQ is a subfield of behavioral genetics. Is contemporary research ignored in the mainstream press? Not at all. Recent articles on the social stratification of Britain have been in the mainstream press—so what are Cofnas, Carl, and Meisenberg complaining about? It seems it just stems from a persecution complex; to be seen as the new ‘Galileo’ who, in the face of oppression told the truth that others did not want to hear so they attempted to silence him.

Well that’s not what is going on here, as behavioral genetic studies are constantly pushed in the mainstream press; the complaining from the aforementioned authors means nothing; look to the newspapers and the public’s perception of genes to see that the claims from the authors are false.

An Argument for Banning IQ Tests

1650 words

In 1979, a California judge ruled that the proliferation of IQ testing in the state was unconstitutional. Some claimed that the ruling discriminated against minority students while others claimed that the banning would be protecting them from testing which is racially and culturally biased. The Judge in Larry P. v Riles (see Wade, 1980 for an exposition) sided with the parents, stating that IQ tests were both racially and culturally biased and therefore it was unconstitutional to use them to place minority children into EMR classes (educable mentally retarded).

While his decision applied to only one test used in one state (California), its implications are universal: if IQ tests are biased against a particular group, they are not only invalid for one use but for all uses on that group. Nor is bias a one-dimensional phenomenon. If the tests are baised against one or more groups, they are necessarily biased in favor of one or more goups — and so invalid. (Mensh and Mensh, 1991: 2)

In 1987 in The Washington Times, Jay Matthews reported:

Unbeknownst to her and most other Californians, a lengthy national debate over intelligence tests in public schools had just ended in the nation’s most populous state, and anti-test forces had won.

Henceforth, no black child in California could be given a state-administered intelligence test, no matter how severe the student’s academic problems. Such tests were racially and culturally biased, U.S. District Court Judge Robert F. Peckham had ruled in 1979. After losing in the 9th U.S. Circuit Court of Appeals last year, the state agreed not to give any of the 17 banned IQ (intelligence quotient) tests to blacks.

But one year later in 1980, there was another court case, Parents in Action on Special Ed, and the court found that IQ tests were not discriminatory. However, that misses the point because all of the items on IQ and similar tests are carefully chosen out of numerous trial items to get the types of score distributions that they want.

Although the ban on standardized testing for blacks in California was apparently lifted in the early 90s, Fox News reported in 2004 that “Pamela Lewis wanted to have her 6-year-old son Nicholas take a standardized IQ test to determine if he qualifies for special education speech therapy. Officials at his school routinely provide the test to kids but as Lewis soon found out, not to children who are black, due to a statewide policy that goes back to 1979.” The California Associatiotn of School Psychologists wants the ban on IQ tests for black children lifted, but they are not budging.

There is an argument somewhere here, and I will formalize.

Judge Peckham sided with the parents in the case Larry P v. Riles, stating that since IQ tests were racially and culturally biased, they should not be given to black children. He stated that we cannot truly measure nor define intelligence. But he also found that IQ tests were racially and culturally biased against blacks. Thus, the application of IQ testing was funneling more black children into EMR classrooms. All kinds of standardized tests have their origins in the IQ testing movement of the 1900s. There, it was decided which groups would be or would not be intelligent and the tests were then constructed on this a priori assumption.

Let’s assume that hereditarianism is true, like Gottfredson (2005) does. Gottfredson (2005: 318) writes that “We might especially target individuals below IQ 80 for special support, intellectual as well as material. This is the cognitive ability (“trainability”) level below which federal law prohibits induction into the American military and below which no civilian jobs in the United States routinely recruit their workers.” This seems reasonable enough on its face; some people are ‘dumber’ than others and so they deserve special treatment and education in order to maximize their abilities (or lack thereof). But hereditarianism is false and rests on false pretenses.

But if it were false and we believed it to be true—like the trend seems to be going today, then we can enact undesirable social policies due to our false belief that hereditarianism is true. Believing in such falsities, while using IQ tests to prop and back up our a priori biases, can lead to social policies that may be destructive for a group that the IQ test ‘deems’ to be ‘unintelligent.’

So if we believe something that’s not true (like, say, the Hereditarian Hypothesis is true and that IQ tests test one’s capacity for intellectual ability), then destructive social policy may be enacted that may further harm the low-scoring group in question. The debate between hereditarians and environmentalists has been on-going for the past one hundred years, but they are arguing about tests with the conclusion already in mind. Environmentalists give weight and lend credence to the claim that IQ tests are measures of intelligence where environmental factors preclude one to a low score whereas hereditarians claim that they are measures of intelligence but genes significantly influence one’s ability to be intelligent.

The belief that IQ tests test intelligence goes hand-in-hand with hereditarianism: since environmentalists lend credence to the Hereditarian Hypothesis by stating that environmental factors decrease intellectual ability, they are in effect co-signing the use for IQ tests as tests of ability. If we believe that the Hereditarian or Environmentalist Hypotheses are true, we are still presuming that these tests measure intellectual ability, and that this ability is constrained either by genes, environment or a combination of the two.

So, if a certain policy could be enacted and this certain social policy could have devastating consequences for a social group’s educational attainment, say, then why shouldn’t we ban these tests that put a label on individuals that follow them for many years? This is known as the Pygmalion effect. Rosenthal and Jacob (1965) told teachers at the beginning of the new academic year that this new test would predict which students would ‘bloom’ intellectually throughout the year. They told the teachers that their most gifted students were chosen on the basis of a new test, but they were just randomly selected from 18 classrooms while their true scores did not show that they were ‘intellectual.’ Those who were designated as ‘bloomers’ showed a 2 point increase in VIQ, 7 in reasoning, and 4 points in FSIQ. The experiment shows that a teacher’s thoughts on the abilities of their students affect their academic output—that is, the prophecy becomes self-fulfilling. (Also see Boser, Wilhelm, and Hanna, 2014.)

So if a teacher believes their student to be less ‘intelligent’, then, most likely, the prophecy will be fulfilled in virtue of the teacher’s expectations of the student (the same can be said about maternal expectations too, see also Jensen and McHale, 2015). This then could lead them to getting placed into EMR classes and being labled for life—which would screw up one’s life prospects. For instance, Ercole (2009: 5) writes that:

According to Schultz (1983), the expectations teachers have of their students inevitably effects the way that teachers interact with them, which ultimately leads to changes in the student’s behavior and attitude. In a classic study performed by Robert Rosenthal, elementary school teachers were given IQ scores for all of their students, scores that, unbeknownst to the teachers, did not reflect IQ and, in fact, measured nothing. Yet just as researchers predicted, teachers formed a positive expectation for those students who scored high on the exam vs. those who scored low (Harris, 1991). In response to these expectations, the teachers inevitably altered their environment in four ways (Harris, 1991): First, the teaching climate was drastically different depending on if a “smart” child asked questions, or offered answers, vs. if a “dumb” child performed the same behaviors. The former was met with warm and supportive feedback while the latter was not. Second, the amount of input a teacher gave to a “smart” student was much higher, and entailed more material being taught, vs. if the student was “dumb”. Third, the opportunity to respond to a question was only lengthened for students identified as smart. Lastly, teachers made much more of an effort to provide positive and encouraging feedback to the “smart” children while little attention/feedback was given to the “dumb” students, even if they provided the correct answer.

Conclusion

This is one of many reasons why such labeling does more harm than good—and always keep in mind that such labeling begins and ends with the advent of IQ testing in the 1900s. In any case, teachers—and parents—can influence the trajectory of students/children just by certain beliefs they hold about them. And believing that IQ=intelligence and that low scorers are somehow “dumber” than high scorers is how one gets ‘labeled’ which then follows them for years after the labeling.

Even though it is not explicitly stated, it is implicitly believed that the hereditarian hypothesis is true, thus, believing it is while also believing that IQ tests test intelligence is a recipe for disaster in the not-so-distant future. I only need to point to the utilities of IQ testing in the 1900s at Ellis Island. I only need to point to the fact that American IQ tests have their origins in eugenic policies and that such policies were premised on the IQ test assumption, which many American states and different countries throughout the world got involved in (Wahlsten, 1997; Kevles, 1999; Farber, 2008; Reddy, 2008; Grennon and Merrick, 2014). Many people supported sterilizing those with low IQ scores (Wilson, 2017: 46-47).

The formalized argument is here:

(P1) The Hereditarian Hypothesis is false

(P2) If the Hereditarian Hypothesis is false and we believed it to be true, then policy A could be enacted.

(P3) If Policy A is enacted, then it will do harm to group G.

(C1) If the Hereditarian Hypothesis is false and we believed it to be true and policy A is enacted, then it will do harm to group G (Hypothetical Syllogism, P2, P3).

(P4) If the Hereditarian Hypothesis is false and we believed it to be true and it would harm group G, then we should ban whatever led to policy A.

(P5) If Policy A is derived from IQ tests, then IQ tests must be banned.

(C2) Therefore, we should ban IQ tests (Modus Ponens, P4, P5).

The Frivolousness of the Hereditarian-Environmentalist IQ Debate: Gould, Binet, and the Utility of IQ Testing

1850 words

Hereditarians have argued that IQ scores are mostly caused by genetic factors with environment influencing a small amount of the gap whereas environmentalists argue that the gaps can be fully accounted for by environmental factors such as access to resources, the educational attainment of parents and so on. However, the debate is useless. It is useless not only due to the fact that it props up a false dichotomy, it is uselss because the tests get the results the constructors want.

Why the hereditarian-environmentalist debate is frivolous

This is due to the fact that when high-stakes tests were first created (eg the SAT in the mid-1920s) they were based on the first IQ tests brought to America. All standardized tests are based on the concept of IQ—this means that, since the concept of IQ is based on presuppositions of the ‘intelligence’ distribution in society and high-stakes standardized tests are then based on that concept, then they will be inherently biased as a rule. The SAT is even the “first offshoot of the IQ test” (Mensh and Mensh, 1991: 3). Such tests are not even objective as is frequently claimed, “high-stakes, standardised testing has functions to mask the reality of structural race and class inequalities in the United States” (Au, 2013: 17; see also Knoester and Au, 2015).

The reasoning for the uselessness of the debate between hereditarians and environmentalists is simple: The first tests were constructed with the results the test constructors wanted to get; they assumed the distribution of test scores would be normal and create the test around that assumption, adding and removing items until they get the outcome they presupposed.

Sure, someone may say that “It’s all genes and environment so the debate is useless”, though that’s not what the debate is actually about. The debate isn’t one of nature and nurture, but it is a debate about tests created with prior biases in mind to attempt to justify certain social inequalities between groups. What these tests do is “sort human populations along socially, culturally, and economically determined lines” (Au, 2008: 151; c.f. Mensh and Mensh, 1991). And it’s these socially, culturally, and economically determined lines that the tests are based off. The constructors assume that people at the bottom must be less intelligent and so they build the test around the assumption.

If the test constructors had different presuppositions about the nature and distribution of “intelligence” then they would get different results. This is argued by Hilliard (2012:115-116) in Straightening the Bell Curve where she shoes that South African IQ test constructors removed a 15-20 point difference between two white South African groups.

A consistent 15-20 point IQ differential existed between the more economically privileged, better educated, urban-based, English-speaking whites and the lower-scoring, rural-based, poor, white Afrikaners. To avoid comparisons that would have led to political tensions between the two white groups, South African IQ testers squelched discussion about genetic differences between the two European ethnicities. They solved the problem by composing a modified version of the IQ test in Afrikaans. In this way, they were able to normalize scores between the two white cultural groups.

This is, quite obviously, is admission from test constructors themselves that score differences can, and have been, built into and out of the tests based on prior assumptions.